New Mars Forums

You are not logged in.

- Topics: Active | Unanswered

Announcement

#201 Re: Human missions » New NASA Director nominated » 2025-04-11 17:40:03

GW,

SpaceX may survive this, if Gwynn Shotwell can keep doing the right things without being over-ruled by Musk.

Can you tell us what "wrong things" Tesla has done, as a company, to deserve having their dealerships firebombed?

Can you tell us how any "wrong things" that Tesla may or may not have done, according to you, makes it okay for random people who happen to own Teslas, to have their cars vandalized on the street or at their homes or when they go to the store to buy groceries?

I think the left blames anyone and everyone but themselves for their own psychotic and anti-social behavior.

#202 Re: Not So Free Chat » When Science climate change becomes perverted by Politics. » 2025-04-11 16:43:51

Void,

Apart from the fact that more CO2 absolutely will increase warming, the extent to which further increases in atmospheric CO2 levels will increase temperature appears to be greatly exaggerated for ideological reasons. If there's no "global crisis", then there's no funding for the people studying it. The political left of the western world created an entire industry built around terrifying their religious faithful over the weather. They ran out of real issues to fight against. Our stocks of true racists and dictators have largely been depleted. The number will never be actual zero, but virtually all of the racism was a product of leftism, assuming you accept that radical leftists want dictatorship (maximal government control over individuals) and radical rightists want anarchy (maximal individual freedom). All the traditional humanity-centric issues the left historically championed have been accepted as normative by the majority of society, with the possible exception of transgenderism, simply because it forces people to play a game of pretend which is obviously false. I suppose the climate change religion a kind of genius, but not the kind that will ever unite people in common cause. There is no such thing as a large over-arching problem that leads to broad consensus and cooperation.

The people peddling thermodynamic runaway are doomsday cultists, plain and simple. Their mindset is no different at all from the people who build bunkers, collect dozens of guns, and buy buckets of freeze dried food. Whereas the "guns and butter" doomsday preppers only spend their own money on such things, our doomsday scientologists spend everybody else's money pursuing their wildly contorted interpretation of reality. I'm okay with people spending as much of their own money on their apocalypse fantasies as they can afford, but if they want to spend my money, I draw that line at thermodynamic impossibility. We can spend money on nukes so that nuking us means whomever launches also gets nuked. Whether that's totally nuts or not, it's worked for 75+ years. Everyone who has nukes seems to understand why you have them but never willingly use them. We can maintain a conventional military to deter foreign invasion of America and our allies, although I think our military spending is already beyond what is reasonable and necessary. We've accumulated too many techno-gadgets, but training and raw numbers have been neglected in absurd ways. We've spent far too much on capabilities that we don't require. Wars between roughly equal peers are won or lost on the basis of local numerical superiority and firepower, and they always have been. We should maintain basic social safety net programs to ensure that our people don't starve in the streets when they get old or injured. We shall not use public money to meddle in the internal politics of other nations, nor to spread any kind of ideology, regardless of intent or tenets. We shall not use public money for political purposes. We shall not use public money to make rich people richer.

For starters, a thermal runaway is a thermodynamic impossibility. If such a thing were ever possible, then it would've happened long ago, and there would be no life on Earth as a result. Since that clearly never happened, even when atmospheric CO2 levels were as high as 8,000ppm, these cultists have no argument with a scientific basis. More to the point, the universe as it exists today would never have existed. Any thermodynamic forcing function that don't have an associated negative feedback loop is destined to result in a thermal runaway, and since no part of the observable universe operates that way, we can safely conclude that such a runaway process is impossible in a far less extreme environments found on Earth. Venus is so hot because it's 50% closer to the Sun and its sea level atmospheric pressure is 93X greater than Earth. If the entire Venusian atmosphere was Nitrogen vs CO2, but surface atmospheric pressure was still 93X greater than it is here on Earth, then the surface temperatures would be almost as hot as they are with nearly pure CO2.

How do I know for a fact that a thermal runaway is impossible?:

Temperature-Pressure Profiles for Solar System Planets with Thick Atmospheres

The Venusian atmosphere is nearly pure CO2. At the same atmospheric pressure of 1bar (Earth sea level), temperature rise on Venus is 50K, or 90F. That's the temperature rise on Venus at 1bar of atmospheric pressure and a nearly-pure CO2 atmosphere, given an average TSI value of 2,601.3W/m^2 for Venus at Top-of-Atmosphere (ToA) vs 1,361W/m^2 for Earth at ToA. If you notice, the temperature-pressure profiles for all planets look remarkably similar, despite having very different atmospheric compositions. Proximity to the Sun is the greatest determining factor of temperature at 1bar of pressure. If Earth's atmospheric composition was the same as it is for Venus, in which case no Oxygen-breathing animals would survive, then we would expect a maximum surface temperature rise of 47F purely attributable to a CO2 atmosphere. We also have liquid water oceans that change the surface albedo. Earth utterly lacks enough Carbon to produce that much CO2, so I won't lose any sleep over this.

If Earth were at Venus's distance from the sun, its average surface temperature would be significantly higher, around 440°F (227°C), according to Astronomy Magazine. This is because Earth would receive nearly twice as much solar energy as it currently does.

...

The Earth's average surface temperature is roughly 14 degrees Celsius (57.2 degrees Fahrenheit). This temperature is a global average, taking into account the wide range of temperatures across different regions and seasons.

What actually "controls" Earth's cimate?

Earth's distance from the Sun, quite obviously. 1.9X great solar insolation increases average surface temperature from 57F to 440F.

The politicians and activists are opportunists of the worst variety- people who prey upon the ignorance of the average person to coerce them into buying pointless things to "solve" a problem they have no hope of solving, first because they have no way of even measuring the results of the purported solutions, second because their solutions never actually replace what we presently use for energy. This is downright silly to anyone who is not a member of the death cult. That behavior is what lead people such as myself to initially discount that the effect was even real. Josh changed my thinking on this after pointing me in the right direction. The warming effect of CO2 is quite real, but so small that the warming which has already taken place represents the lion's share of all the warming that will take place without radical changes in atmospheric composition and pressure. Any solution which does not remove nearly all of the atmospheric CO2 produced since the Industrial Revolution is rather pointless.

In actual practice, climate change's most significant effect on human life today, and over the foreseeable future, is to serve as a political identity issue to unite leftists who would otherwise have nothing in common with each other, and would otherwise start fighting each other for power, just as they already are. It's an issue intended to put a bunch of misfits on the same team, in order to "fight the right". If you take away their climate change issue, there's really no other issue most of them agree on.

Right now we see them burning down EVs and EV dealerships here in America. That astronaut fella from Arizona, Mark Whatshisname (sorry, genuinely can't remember, because he's not very important, but he or his brother spent a year in space aboard ISS), sold his Tesla and bought a giant diesel powered truck. He made a big deal about it by posting it to anti-social media. He doesn't give a crap about climate change and never did. Like the vast majority of leftists, Mark is a rapacious liar who will never tell you what he actually thinks, because he mostly doesn't. That's a sad thing to say about someone who holds a PhD, but it would seem that neither intellectual accomplishment nor experience equates to honesty, because honesty requires morality, and leftism has no values it actually adheres to, beyond "follow the other lemmings". His group-think is handed down to him from on-high and he doesn't question it, because independent thought signals the death knell of group-think. They're going to mandate that everyone buys an EV, but it's not going to come from the world's largest and only economically successful EV company. Every other traditional car company has lost many billions on EVs, to the point that all the major car makers are pushing for more affordable alternatives to pure EVs, which they cannot make at scale for anything approaching the price of a gasoline powered car.

The political left in this country used to be dominated by free thinkers who questioned everything, which is the only reason why we discovered that climate change could be a potential problem to begin with. Their boundless creativity was how our nation grew to become what it is today. Unfortunately, after political power was consolidated they quit trying to win intellectual arguments using logic and facts, which was previously the metric that demonstrated to the world which ideas were "closest to being correct". They descended into "might makes right" (the garden variety bullying the political left is so famous for) as their power base became dominant across academia, media, culture, and politics. The political right only wants to be left to their own devices, which now represents a serious threat to the orthodox group think of the radical elements of the left. Anyone who questions the agenda is treated the exact same way the Catholic Church treated blasphemers. The people who used to question everything now question very little. The political right is forced to think through their positions to a far greater degree than the political left, if only for self-preservation from the violence that the left is equally famous for. The left now believes things on the basis of political association. It's an inversion of historical norms. Group association is a terrible reason to think or believe anything.

If you're wondering why this issue animates me so much, it's because I don't really belong to the political right or left, but I'm just as much of a misfit as the people on the left. The only reason I'm not on the left is my abject refusal to imbibe in group-think. I grew up admiring the intellectuals on the left, but they abandoned intellectualism. I can't be a classical liberal / independent because I think their beliefs about most contentious issues are every bit as childish as what the left has become. For the most part, these independents are leftists who are irked by the lack of thought coming from the political left. Much like the atheist left, they cannot agree to adhere to a moral code. I would be fine with something as simple as, "It's never okay to screw people over who merely disagree with you, so treat others the way you wish to be treated". As an atheist myself, I don't feel the need to incessantly attack religion or religious people because I have accepted their reasoning, even when I disagree with it. That's always bothered me. I can't fathom how you can be a true atheist yet imbibe in the same behavior as the religious do.

As best I can tell, that is how and why our political establishment has perverted science to serve a political cause.

#203 Re: Exploration to Settlement Creation » Habitat Design on Mars » 2025-04-11 10:17:54

Calliban,

That's a beautiful city.

Enjoy your vacation / education.

#204 Re: Human missions » Starship is Go... » 2025-04-11 10:06:20

Dr Clark,

Antonov AN-225's wing area was 905m^2 and wing tank fuel capacity was 375m^3.

Concorde's wing area was 358m^2 and internal fuel capacity was 119.5m^3.

For Starship to have 1,800m^2 of wing area, the wing's fuel capacity would be at least 600m^3, which is a third of Starship's present propellant capacity. Since 304L stainless is such a weak structural metal relative to its mass, the wing would need to carry propellant and be shaped to resist both internal pressurization loads and aero loads lower in the atmosphere. This proposal would increase the weight of Starship to impractical levels, which is why it won't be done. A lifting body Starship, which may or may not remain within tolerable mass limits, would require a complete redesign to provide 1,800m^2 of lifting surface area.

#205 Re: Science, Technology, and Astronomy » Utilizing Superpower (Per Rethink X, Tony Seba) » 2025-04-09 15:41:55

Void,

Cost is what matters, but efficiency tends to drive cost, and they said they're betting on solar electricity "only getting cheaper in the future", without explaining where their cheap photovoltaic panels will be sourced from. I like the fact that they used cheap and abundant materials, but they're also using a lot of high energy specialty metals without explaining where they're getting those from.

This group of people would make more of an immediate impact by working for an oil and gas company that decided they were tired of hoping for profits from drilling activities, and instead decided they wanted guaranteed money by building out a pilot scale plant that produces gasoline or diesel instead of natural gas, because gasoline and diesel are easier to store. Heck, Propane is easier to store than natural gas. If we had low cost synthetic Propane, the conversion cost for piston engines is nominal.

Guaranteed money on the basis of energy that does not need to be mined or first provided as electricity is what the focus of President Trump's energy policies should be. We already have all the bits and pieces required, but nobody has built out a synthesis plant that operates at sufficient scale to compete with mined (drilled) hydrocarbon fuels.

The only true invention I saw there was using limestone on a conveyor belt to capture atmospheric CO2. That could be enormously scaled-up to collect CO2 feedstock, but we need an enormous plant to do that.

We also need to build a plant that thermally dissociates Hydrogen from water. Not using electricity implies concentrated sunlight alone delivers the input energy, without a bunch of expensive and complex conversions into electricity or other forms of energy. No specialty metals like Copper or Nickel are required to do that. We cannot easily or quickly scale-up production of electricity without access to vast quantities of specialty metals. Pure thermal plants are easier to build, easier to maintain, and the materials they require are abundant.

Until our creative people move past their fixation on electricity and electronics, none of their good ideas will work at the scale required.

High temperature CSP plants convert 80% of the input energy from the Sun into heat without using anything too exotic. Increase the operating temperature of the receiver to 3,000C and over half of the Hydrogen atoms in water get thermally dissociated without any electricity or catalysts consumed or other forms of energy input. Such plants have to be large and that makes them expensive, but they're not very sophisticated machines at the end of the day.

You need about 100m^2 of solar concentrator area per kilogram of Hydrogen produced per hour, and you have about 6 to 8 productive hours per day. A gallon of gasoline contains about 1.1kg of Hydrogen, so each gallon of synthetic gasoline requires that much Hydrogen feedstock, which implies 110m^2 of collector area per gallon per hour.

We use 376 million gallons per day, so the array must be 5,170,000,000m^2 (5,170km^2, or 70.903km by 70.903km) if we assert we will have 8 productive hours per day. Making the Hydrogen is only one step of the process, but it's one of the most energy intensive steps. Collecting CO2 is the other most energy intensive step. After those two steps are accounted for, and without drawing another Watt of electrical power from our grid, the Sabatier reaction and the Socony-Mobil process that converts natural gas or syn gas into storable gasoline / diesel / kerosene is rather trivial.

Gasoline alone, at a mere $2/gallon, represents $275B of economic activity per year. CSP solar power plants can operate for 75 years, and some have already operated for over 50 years. Over a mere 50 years, there is $13.75T in revenue generated. These plants may be expensive to construct, but they won't cost anywhere near $13.75T. We've already spent $5T to $10T on photovoltaics and wind turbines, and at best they account for 2% of the global total primary energy supply, which means they were a terrible waste of money and time.

Supplying all of the liquid fuels American society requires to operate represents 25% of global consumption. I estimated that it would cost around $300B to $600B to supply the US with 100% of its liquid fuels demand. If we actually build this type of plant, it's guaranteed cash money for the oil company, who can run the plant for the government for a small but guaranteed profit, in the same way that a government arsenal is run by munitions contractors like Winchester / Remington / Federal. The government fronts the money to build the plant, the oil company provides the labor force, and limitless consumer demand ensures there is always a customer waiting to purchase the product. Since President Biden's administration accumulated $12T in debt but produced very little, $392B of which was their "green energy" initiatives that produced nothing, this time we can use the money to build the infrastructure required to ensure that gasoline remains at $2/gallon over the next human lifetime.

We have the land area, the money, the materials, the know-how, and the labor force to build this. You don't need a college degree to operate the plant. Basic mechanical knowledge is sufficient, as it already is for the rest of the oil and gas industry. We're inventing nothing here, and had the basic tech required to do this since the 1970s. It's a direct application of existing technology with very little in the way of patented IP. This is the only realistic way we're ever going to arrest atmospheric CO2 increases, because there's a profit to be made and no input material impossibilities are required for the plant to be constructed, such as a 1,000%+ increase in mining of specialty metals. We need a lot of steel, Aluminum, concrete, a little bit of Graphite, and some dirt common catalysts for the Sabatier reaction. A 5 to 10 year build-out is very realistic.

#206 Re: Meta New Mars » kbd512 Postings » 2025-04-09 13:08:28

tahanson43206,

If you find a refractory metal alloy that matches the coefficient of thermal expansion of Graphite by the time it reaches 3,000K, please let me know, because that would be very interesting to me. Until then, I'm going to presume that we're not using metal since the only metals I'm aware of with melting points at or above the target temperature are Niobium, Molybdenum, Tantalum, Rhenium, and Tungsten. None of those metals have CTEs close to Graphite and none of them transfer heat as well as Graphite.

Edit:

Since you're fixated on using metal, how about a 90% Tungsten 10% Copper alloy, which will still melt near 3,400C / 3,673K?

That alloy is at least weldable and formable without too much difficulty, and is noted for good thermal fatigue properties. The materials cost alone will be around half million dollars for 1,200kg of material or so ($400/kg).

Take a look at how far the yield strength figures drop by the time you reach the temperature range we're operating at:

Yield strength of Tungsten and some of its high strength alloys

25ksi is all you're going to get at 3,000K, as the chart above shows. It's better than the 6.5ksi of fine grain synthetic Graphite, but I have no idea what the creep characteristics of that alloy will be, nor how it will perform with hot flowing Hydrogen. We can use the same aerospace coatings to try to slow the rate of embrittlement, but that's about it.

Edit #2:

In case you're wondering, I chose W-Cu alloy based upon its low CTE and higher than average resistance to Hydrogen embrittlement, but I don't have data for 3,000K. As with all other metals, it's almost silly putty at 3,000K, relative to room temperature, but the only other alloys capable of surviving at the temperatures in question are even more expensive than W-Cu alloy and there's not much data about their Hydrogen embrittlement resistance in the temperature range we're interested in. I think Niobium alloys are the only other type that might be suitable, but I don't know much about them because they're very exotic stuff. Maybe NASA has some data on them. Niobium alloys have been used in uncooled rocket engine nozzles during the Apollo Program. The LEM's main engine, for example, was made from a Niobium alloy of some kind.

#207 Re: Interplanetary transportation » Focused Solar Power Propulsion » 2025-04-09 12:53:12

As far as I'm aware, Total Solar Irradiance (TSI) at top-of-atmosphere (ToA), is 1,361 Watts per square meter.

57,768m^2 * 1,361W/m^2 = 78,622,248W

40,000,000W / 78,622,248W = 50.88% efficiency?

That seems rather low. I can understand a 10% to perhaps 25% efficiency / power loss, but not 50%. High temperature solar thermal power plants operated here on the surface of the Earth capture 60% to 80% of the incoming power as heat, so I'm rejecting your AI program's 50% power loss figure as unrealistic.

Edit:

1,361W/m^2 is an average figure used by NASA. Earth's orbit about the Sun is not perfectly circular, but it's close enough that the average figure will do, and is the figure that NASA uses.

#208 Re: Interplanetary transportation » Focused Solar Power Propulsion » 2025-04-08 22:08:58

tahanson43206,

I would note that in a fission reactor design such as NERVA the heat exchanger tubes are enclosed by the mass of the reactor, which provides support against any pressure that may occur inside the tube.

Resisting pressure from the hot expanding Hydrogen gas was borne solely by the mechanical strength of the Graphite fuel rod assemblies. Please think about why that must be the case. If the Hydrogen gas is not being forced through those tubes, and whatever pressure increase inside said Graphite tubes is not being resisted by the mechanical strength of those tubes, then where the heck is the Hydrogen supposed to go to absorb and remove the heat produced by the reactor?

The reactor pressure vessel was made from Inconel for reasons related to manufacturability of a complete reactor core. There was no such thing as 3D printing in the 1960s. They used a welded Inconel shell and regenerative cooling of the metallic components to prevent them from melting during engine operation. We don't need to do that, because we're not building a nuclear reactor core with multiple discreet components that must be made from different materials due to their intrinsic nuclear properties.

It appears that graphite can be selected for the heat exchanger duty, pending testing that shows it is not suitable.

The test results are already in. NERVA's Graphite fuel rod assemblies did not rupture inside the core, despite being internally pressurized with hot flowing hydrogen well above the pressures we want to use. To assert that the Inconel pressure vessel "supported" those fuel bundles is to misunderstand what "support" actually meant. The shell supported the weight of the entire reactor assembly on the ground and during vehicle acceleration into orbit, and it kept all of its many components "fastened together", specifically because it had to built with discreet components.

A reactor core cannot easily be fabricated as a monolithic device. Even if it could, a 3D printer to sinter a functional reactor core together did not exist back then. The major engineering problem was and is surface erosion of the Graphite Hydrogen coolant channels from the hot flowing Hydrogen. I prefer to spend my time learning about solutions to the actual engineering problems the design and test program encountered, and that was the major problem encountered while operating the reactor. The test program did not encounter major mechanical strength deficiencies with the Graphite fuel rod assemblies, despite being non-homogeneous because they had to contain Uranium fuel particles and it had to contain the resultant fission products and survive extreme neutron bombardment and it had hot Hydrogen flowing through them.

To appease your concerns over the ultimate mechanical strength of sintered pure Graphite, how about wrapping the interior and exterior surfaces with a Carbon fiber tape / tow sintered into the surface using Graphite powder in a vacuum furnace?

The material described above is better known as Reinforced Carbon-Carbon (RCC). You may recall that NASA recently built a non-regeneratively cooled LOX/LH2 engine with a main combustion chamber and nozzle from this material. I posted several documents about it, and the chamber pressure was drastically higher than those we're contemplating using. It's very light, very strong, did not rupture, and repeatedly fired for far longer than RS-25 without any refurbishment. The primary problem they had with it, once again, was erosion of the internal walls from the extreme heat and corrosive combustion products. The solution to that problem, once again, was Zirconium Carbide or Hafnium Carbide surface coatings.

Pure Carbon fiber has a sublimation point every bit as high as Graphite (Carbon)... because Carbon fiber is... wait for it... Carbon. If we need a fiber with "more stretchiness" and more strength to really really "overkill" this heretofore undiagnosed tensile strength problem, then we can use CNT fiber. Heck, we could even mix Graphene flakes into the sintered Graphite powder and then we don't need to deal with the complications of fiber over-wraps. Any of those materials are available for nominal additional cost, because the percentage weight required to impart substantial additional strength is very low.

Therefore, my answer to any theoretical Graphite strength deficiencies, which were not encountered during actual test firing of a nuclear thermal rocket engine, is to address them by using different forms of Carbon with higher tensile strengths.

#209 Re: Interplanetary transportation » Focused Solar Power Propulsion » 2025-04-08 19:54:27

tahanson43206,

You're stuck on the idea that the Graphite cannot withstand the pressures being exerted internally, but adequate resistance to internal pressurization is what the engineers who worked on NERVA relied upon this material to do for their nuclear thermal rocket engine.

Nulcear grade synthetic Graphite costs $10,000 to $20,000 per metric ton:

https://www.eastcarb.com/graphite-nuclear-reactor/

We're not using "natural Graphite":

https://www.eastcarb.com/synthetic-grap … -graphite/

Properties of Graphite:

https://www.eastcarb.com/properties-of-graphite/

Please do me a favor and do a quick Google search on the preferred construction material used to fabricate heat exchangers that are required to process hot Hydrochloric, Sulfuric, and Phosphoric acids. Use the search term "graphite tube heat exchanger". See how many different companies make them, because there are quite a few. Graphite tube heat exchangers are the most common kind of heat exchanger in use in corrosive chemicals processing. If Graphite truly wasn't strong enough to resist the pressure, we'd have constant failures. I think the concern on your part is your limited interaction with Graphite as "pencil lead", which is typically not even pure Graphite, but that is NOT what I'm talking about using here.

Edit:

High-purity Hafnium Carbide costs about $3,300/kg.

We would expect a solar thermal rocket engine's material costs to be around $10,000 or so for a 1,000kgf to 2,000kgf engine. The fabrication cost will be more than that, but gives an idea of how much material we're talking about. The engine itself won't weigh more than 500kg or so.

#210 Re: Meta New Mars » kbd512 Postings » 2025-04-08 15:53:32

More succinctly:

1. We can afford to have a larger expansion nozzle that pulls double duty as our heat input surface area for the sunlight to shine on. We don't have any reactor core mass, length, or radioactivity complications (control rods, fuel and moderator rods, coolant channels to cool the Inconel pressure vessel and Beryllium reflector) to account for. This allows us to achieve higher temperatures within our pure Graphite "engine". The overall weight is less than a reactor. The length of the nozzle could be as long as a complete nuclear thermal engine happens to be for equal power output, and it would make no difference to how difficult it was to transport or deploy the device.

Since we opted for pure Graphite construction, we don't need regenerative cooling. A solar thermal engine doesn't have a reactor pressure vessel, throat, and chamber made from Inconel, nor a giant reflector made from Beryllium, which must be kept cool since those metals all melt at much lower temperatures than Graphite. Inside a nuclear thermal rocket engine the Hydrogen propellant is most definitely confined within the propellant flow passages drilled through the Graphite fuel rods, so that base material absolutely is strong enough to withstand the pressures, because it has to be, else said Hydrogen gas expansion through the propellant flow passages ruptures those Graphite fuel rods and destroys the nuclear rocket engine. We will be applying less pressure, less stress, and to a homogeneous Graphite material, at virtually the same temperatures reached by the hottest parts of the nuclear thermal rocket engine core. The real difference is that our heat application won't be nearly as non-uniform as it is inside a nuclear thermal engine.

2. We benefit from using a very circuitous internal Hydrogen flow path for the propellant to make contact with, to uniformly absorb the externally applied heating from the Sun. Again, this means we don't have temperature gradients quite as high as a nuclear thermal engine. Some of the peripheral fuel pins in the nuclear thermal engine could be 500K+ cooler than the fuel pins in the center of the core, with the end result that much hotter and cooler gas mixes together ahead of the engine's throat after it exits the fueled portion of the reactor core, which is where that "significant heat loss" comes from, which I spoke of.

3. We can achieve a higher expansion ratio, which further improves Isp, because we have this monolithic "hollow" Graphite channel wall nozzle / engine structure. The length of said nozzle is somewhat less critical when there is no nuclear reactor core positioned ahead of the nozzle. NERVA XE Prime was 6.9m in length and was to use a mechanically extendable uncooled nozzle extension made from Carbon. Since our engine uses external surface heating only, we can transform our engine into a "giant nozzle" 6.9m long, and we've given up nothing to the original NERVA design in terms of overall engine length.

4. Our solution will be heavier because it uses fiber optics and/or mirrors to collect photons from the Sun, but in terms of cost and the difficulty of working with the materials, nothing we're doing or working with are government-controlled / furnished materials or classified. Maybe the Hafnium Carbide is a government-restricted aerospace material, but if so we can fall back on Zirconium Carbide. Mylar, Graphite, fiber optics, and such do not even qualify as restricted items. There will be some trade secrets and Uncle Sam will declare that the engine is ITAR-restricted if it receives any government funding, but that's about it. The composite Hydrogen propellant tanks qualify as ITAR-restricted rocketry tech.

5. In terms of cost, I've little doubt that a solar thermal engine will be far less costly than a nuclear thermal engine, thus more attainable if we pursue this technology. We assert that the primary reason for continued development of nuclear thermal engines is the Isp benefit that nuclear thermal can achieve. We can achieve the same or slightly superior Isp using direct solar heating, and there will be fewer objections to the launch. There's nothing truly remarkable about the materials or fuels being used here. It's all very standard stuff that is mass-produced here in America and elsewhere. Foreign allied customers can benefit from the ability to place very heavy satellites in high orbits and send less mass-constrained space probes wherever they so choose, because the mass and cost associated with doing so is half that of the best LOX/LH2 engines. Heavier propellants such as Methane or Ammonia or CO2, which are easier to store and generate more thrust, can be used to achieve Isp figures similar to more complex LOX/LCH4 or LOX/LH2 bi-propellant chemical rocket engines. The solar collector size and thrust generated can be tailored to desired specifications. We're starting with either a 1,000kgf or 2,000kgf engine because that is sufficient to send a truly massive interplanetary transport vehicle on its way, in about a month.

#211 Re: Meta New Mars » kbd512 Postings » 2025-04-08 14:39:03

tahanson43206,

Flow velocity, from core entrance to core exit, and thus residence / "dwell" time, can be computed by using the acceleration across the 80cm fuel pin length containing the hollow "tubes" for the Hydrogen propellant to flow through.

a = (Vf^2 - Vi^2) / (2 * d)

a = (800m/s^2 - 100m/s^2) / (2 * 0.8)

a = (640,000 - 10,000) / 1.6

a = 630,000 / 1.6

a = 393,750m/s

The "dwell time" is thus 0.8m / 393,750m/s, so 0.000002031746032 seconds. That's how fast the Hydrogen gas moves from core entrance to core exit, based upon the acceleration rate through the 80cm / 0.8m long heat transfer "tubes" drilled through the Graphite fuel pins in the core of that nuclear thermal rocket engine.

If we have a 10m long channel wall nozzle for external solar heating to be applied to, then we have:

a = (800m/s^2 - 100m/s^2) / (2 * 10)

a = 630,000 / 20

a = 31,500m/s

That means "dwell time" is thus 10 / 31,500m/s, so 0.00031746031746 seconds. That's dramatically more dwell time.

The actual spiral flow path I envisioned would be at least 100m in length, possibly 300m or more, so 0.0031746031746 seconds.

That means our solar thermal rocket's propellant stream would easily have sufficient time and distance to get the propellant up to temperature, plus a lot more residence / dwell time than a prototypical nuclear thermal rocket engine's fuel pin length.

If the propellant channel is only a few millimeters in diameter and that flow channel winds around the circumference of our large Graphite nozzle, the actual flow path could be very long, which means we have very uniform heating, improved Isp, greatly reduced thermal-hydraulic stress, and an all-around easier design proposition because the Graphite is homogeneous, meaning not loaded with any highly reactive Uranium particles, not subject to rapid fission product release.

Graphite room temperature tensile strength ranges from 8MPa (1,160.3psi) to 30MPa (4,351.13psi), and is highly dependent upon how fine the grain size of the Graphite happens to be. Unlike metals which get weaker at high temperatures, Graphite gets stronger up to a temperature of about 3,200K or so, so at 2,800K I think its tensile strength (ability to resist being pulled apart) and flexural strength (ability to resist bending) properties are about 1.5X greater than at room temperature. NERVA operated at pressures up to 550psi, although about half that at stabilized full power output. Startup and shutdown is what induces a lot of thermal-hydraulic stress. Graphite at room temperature can resist NERVA operating pressures adequately. A very fine grain sintered / molded structure, which will have properties closer to 30MPa, will easily resist the pressurization of the Hydrogen.

Do you recall how I said operating at very low pressures and very high temperatures confers a substantial Isp advantage (reduced propellant mass flow for a given thrust)?

That is what we're going to do with this engine design. We want reduced pressures and higher temperatures. Higher operating pressure leads to smaller nuclear reactor cores, thus reduced reactor weight required to provide that internal heating, at the expense of Isp. At this thrust level, we apparently do not need a "core" at all. We can do all of our heating inside of a large nozzle, which provides a large surface area for even distribution of that 40MWth (1,000kgf) or 77MWth (2,000kgf).

If we further concentrate that Sunlight beyond what was actually used in the 1970s Rockwell experiments using mylar parabolic dish mirrors, then we can increase temperature beyond all known material limits, if we so choose. Rockwell had no trouble at all concentrating the sunlight to a "spot size" that would cause Graphite to sublime. It was more a case of "don't concentrate the sunlight so much that you vaporize your rocket engine". Therefore, the tolerable sunlight concentration limit sets the upper bound on Isp and the lower bound on the surface area of the rocket engine nozzle to spread the heat over. We can easily go hot enough to vaporize Tungsten, but we cease to have a rocket engine at that point.

The solar thermal rocket engine design imperatives are as follows:

1. Build the channel wall nozzle with enough surface area so as not to sublime the Graphite into space when struck by the giant solar death ray, which implies increasing the nozzle's expansion ratio for the express purpose of providing more surface area for the heat to be applied to, until weight starts to become a factor. Graphite is pretty light stuff, relative to virtually all structural metals, and as strong as it needs to be. The Isp benefit from a greater expansion ratio is the "gravy" you get for doing this. Nozzle kinetic efficiency with Hydrogen propellant will bound this as well. Only certain geometries and lengths, for given chamber pressures, are permissible.

2. Operate at as low a pressure and as high a temperature as material thermal limits and reasonable nozzle weight will allow for, because this can dramatically increase Isp, in the range of 40s (5bar at 3,000K) to 200s (5bar at 4,000K). This is due to the percentage of atomic vs molecular Hydrogen generated by the extreme heat causing thermal dissociation of the propellant. If you operate at 60bar, then you only do that to decrease the core volume / length of a nuclear reactor, knowing full well that you're "losing heat", but greatly reducing core mass and size at the same time, because enriched Uranium and Beryllium are very expensive and shielding is very heavy.

They actually performed a study on this and concluded that low pressure / high temperature was "most optimal". The paper is on OSTI's website (scroll down to Page #8, which is actually 9 of 13 in the PDF file, and the next few pages, to see what I'm talking about):

ULTRA-HIGH TEMPERATURE DIRECT PROPULSION

3. We're going to optimize the internal channel geometry and shaping to induce turbulent flow for the express purpose of picking up more heat more efficiently. That resistojet rocket's internal propellant flow channel geometry with the external resistive heating elements did the same thing, in order to economize on heat transfer efficiency, by increasing the propellant's "dwell time", which resulted in higher Isp. Our reasoning for adopting their internal geometry is more about uniform heating and reduction of thermal hydraulic stress than it is about "getting more juice per squeeze". We already have more than enough flow path, as evidenced by the very short length of the fuel pins in the nuclear thermal rockets. What all NTR designs did, which that OSU paper pointed out and sought to correct for, was a relatively poor job of uniform heating, which both reduced effective Isp (sometimes by quite a bit) and made their Graphite fuel assemblies weaker and more subject to cracking failure from induced thermal-hydraulic stresses caused by huge temperature gradients.

#212 Re: Meta New Mars » kbd512 Postings » 2025-04-08 03:24:37

tahanson43206,

1. Flow rate is dependent upon temperature, pressure, and thermal power input into the propellant (a 1,000MWth engine is going to flow more propellant than a 100MWth engine, so if temp / pressure / throat area / expansion ratio are all proportional, we would expect 10X greater propellant flow rate).

2. Engine data for a real engine (XE Prime) and proposed engine for flight testing (Alpha) appears below:

NERVA XE Prime

Core power output: 1,137MWth

Weight (complete engine): 18,144kg

Thrust (vac): 246,663N

Isp (vac): 841s

Hydrogen flow rate: 32.7kg/s (full output)

Core temp 2,270K (full output)

Chamber pressure: 593.1psi

NERVA Alpha (first flight-ready engine design, never built)

Core power output: 367MWth

Weight (complete engine): 2,550kg

Thrust (vac): 72,975N

Isp (vac): 875s

Hydrogen flow rate: 15kg/s (full output); 95.5kg/s (startup); 15kg/s (shutdown)

Core temp 2,880K (full output); 60K temp reduction to provide 2 full hours of operation without excessive fuel loss from erosion

Chamber pressure: not provided

Thermal stress limit: 83K/s (max heating rate ramp-up to prevent thermal over-stress of the Graphite fuel rods)

This document contains Hydrogen mass flow rate, heat transfer surface area, inlet velocity, outlet velocity, and fuel assembly length data:

Idaho National Laboratory - Scoping Analysis of Nuclear Rocket Reactor Cores Using Ceramic Fuel Plates - January 2020

H2 inlet velocity is 100m/s (at 400K), outlet velocity is 800m/s (at 2,800K), the fuel assembly length is 80cm, as found on Page 49.

Check out how radically Hydrogen's thermal conductivity increases between 400K and 3,000K on Page 50 of the above document.

I bet that AI program isn't accounting for Hydrogen thermal conductivity increase as temperature increases, pressure, and surface area of the propellant channel. In any event, this detail level data only reinforces the idea that what I proposed doing with the engine geometry will work, and whatever that AI program is telling us about needing a 1.4km long flow channel to provide the necessary residence time is an error based upon some very simplistic assumptions about geometry and flow that don't come close to approximating a real engine design.

#213 Re: Interplanetary transportation » Focused Solar Power Propulsion » 2025-04-07 13:34:30

To express more precisely and concisely what the engineers did in the Rover / NERVA Programs to adequately heat the Hydrogen before it expands through the throat and expansion bell / nozzle at hypersonic velocities:

Nuclear thermal rocket engines use numerous long (1m to 2m) small diameter (19mm in the final reactor designs, IIRC) "tubes", which are actually carefully designed frustums that account for the results of thermal-hydraulic analyses to arrive at optimum shaping for Hydrogen gas density and volume during heating and expansion, which apparently creates a "choked flow" condition that allows Hydrogen to enter the core at 0.1 Mach or so, and be heated to temperatures of 3,000K or greater, which subsequently increases to Mach 11 as the low pressure / high temperature gas exits the bottom of the nuclear reactor core, after which it flows into a comparatively very narrow throat, then rapidly expands as it exits out the nozzle and cools.

Operating at very low pressures and very high temperatures produces a substantial improvement in Isp. Higher propellant pressures, however, lead to smaller core sizes, at the expense of Isp and erosion rates. Higher temperatures also lead to faster erosion rates. You have a series of trade-offs to make when deciding what temperatures and pressures to operate at, bounded by known material limitations. Graphite and Hafnium Carbide are two of the best known materials for the engine, because they won't melt or sublime at 3,000K. I think 4,000K is near the achievable limit using known solid materials suitable for these engines with Hydrogen propellant heated to that temperature. Hafnium Carbonitride has a melting point of 4,273K, for example, but producing an engine purely from that material, so that it could survive the heating without melting, and without active cooling, would be cost-prohibitive. Therefore, we're only considering the use of molded / 3D printed (laser-sintered) Graphite with Zirconium Carbide or Hafnium Carbide surface coatings on internal surfaces for hot Hydrogen resistance, because Graphite is relatively cheap and easy to mold, and said surface coatings can be applied with great precision to complex geometry parts. Using 3D printing and Carbide aerospace coatings, a Graphite engine requires no active cooling to resist the target temperatures, and its mechanical properties (tensile and flexural strength) modestly improve over room temperature mechanical properties.

The aforementioned tube and engine geometry creates a "choked flow" condition that creates the required propellant residence time in the core to pick up heat. This has two deleterious effects on performance and service life, however. It reduces Isp due to propellant cooling which occurs between the core exit and throat entrance, and it causes more rapid erosion of the protective Carbide coatings that resist the chemical attack associated with very hot flowing Hydrogen. Niobium Carbide, Zirconium Carbide, or Hafnium Carbide surface coatings are required to protect the Graphite from the severe chemical attack associated with hot flowing Hydrogen. Said coatings dramatically reduce the rate of progression of surface erosion from the chemical attack by more than an order of magnitude. Unprotected Graphite is essentially "trashed" after a single full duration engine firing, on the order of 45 minutes to 1 hour. That means these advanced ceramic surface coatings are not optional for the solar thermal rocket engine.

For those who are unfamiliar with a "frustum" shape, its a conical shape with the "pointy end" sliced off parallel with its base. Whereas a cone has a "point" at one end and a "flat" base, a frustum has two flat parallel surfaces at each end. More succinctly, a frustum is a modified "tube" / cylinder with a narrower diameter end and a larger diameter end, so it's not perfectly cylindrical.

The use of common materials with novel (increasingly "less novel" as they're applied to more and more gas turbine engine hot section parts and reentry heat shields) aerospace coatings, suitable to the design operating conditions, is a nod to development and fabrication costs. We must have the ability to manufacture the parts and test them on the ground, as was done during the initial work on the solar thermal rocket. We have better coatings and application methods than they did back in the 1960s and 1970s, but the real "ace" we hold over our equally creative ancestors is our computer modeling and simulation which allows us to try thousands or millions of minor variations to arrive at something approaching "optimal" for our purposes. To the list of "aces" we have up our sleeves, we can add computer-aided manufacturing of 3D printing of structures in various materials, and AI-enabled optimization and physics analysis software which simply did not exist back then. Boilerplate level design work that previously took months or even years can be done in the span of mere minutes. We tend to analyze things to death these days, but if we take an iterative approach to the design, we can arrive a minimum viable product much faster.

The compelling "first use case" for a solar thermal rocket is a fully reusable orbital space tug, refueled on-orbit by Starship propellant tankers, to push payloads between LEO and GEO. After you arrive in GEO, it only takes a small additional push to go anywhere else. It's most economical if the space tug stays near Earth and the payload uses a smaller onboard propulsion system to achieve escape velocity from GEO.

As a "for example", if you want to push a 20,000kg payload to GEO, you need a giant rocket to do that without a high-Isp space tug, or your payload spends months spiraling out from LEO using much lower-thrust ion engines. A small and simple Falcon 9 can get your 20t satellite to LEO, and then a space tug with more than double the propellant mass efficiency of LOX/LH2 can send that heavy payload on its way for a fraction of the launch cost. You can afford to make your satellite from cheap / economical / durable steel, without too much concern over launch costs (which are far more related to "fuel mass cost" than "payload mass cost"), thereby saving yourself a very healthy amount of fabrication cost over light alloys and composites, because there's not a compelling benefit to using expensive materials if the propellant mass cost of that "push" to higher orbits or other planets is less than half that of LOX/LH2. Alternatively, if you want to spend the money on lighter materials, then you can deliver multiple satellites to GEO for the cost of one satellite.

There's no real flexibility on this with pure chemical propulsion because the Isp, even with LOX/LH2, is simply too low. Nearly all of your vehicle mass ends up being propellant instead of payload. Once you move just a little bit beyond 1,000s of Isp, you arrive at 50/50 payload and propellant mass fractions. Long range airliners can be up to 35% fuel by weight, so our fuel vs payload starts to look a bit more like a large commercial transport aircraft than a conventional chemical rocket, which are always 90%+ propellant by total vehicle weight.

#214 Re: Science, Technology, and Astronomy » Google Meet Collaboration - Meetings Plus Followup Discussion » 2025-04-06 20:12:24

tahanson43206,

Edits:

Non-Uniform Heating Impact on Specific Impulse in Nuclear Thermal Propulsion Engines

Nuclear Reactors - Spacecraft Propulsion, Research Reactors, and Reactor Analysis Topics

Mechanical properties of molded graphite-modulus and strength

Scientists successful in 3D printing complex graphite parts with 97% purity

In our meeting, I asked about the idea that a very large / high expansion ratio "channel wall nozzle", 3D printed in Carbon / Graphite, with an enormous amount of internal surface area and volume for heating of the Hydrogen propellant, could provide sufficient residence time to allow the Hydrogen propellant to achieve 3,000K:

An incredible variety of internal heat transfer tube configurations are possible, if they prove beneficial:

NERVA Fuel Element Diagram:AS.1943-5525.0000313/asset/c5aeca43-a962-4e4e-aea6-9cef2e00fad5/assets/images/large/figure2.jpg)

Proposed NVTR Fuel Element Diagram:

#215 Re: Not So Free Chat » When Science climate change becomes perverted by Politics. » 2025-04-06 02:37:39

Void,

Rather than take anyone's word for it, let's do our own research here.

This is Jim Hansen's 1984 research paper (from University of Chicago because NASA's copy is nearly unreadable in some sections):

Climate Sensitivity: Analysis of Feedback Mechanisms, J Hansen, A. Lacis, D. Rind, G. Russell, 1984

This is Pat Frank's 2019 research paper showing how any single error in climate sensitivity magnifies over time:

Propagation of Error and the Reliability of Global Air Temperature Projection, Patrick Frank, 2019

I kinda sorta understood why this was included, for purposes of explaining how input error magnifies over time, but I don't think it's directly relevant. The form of error expressed in Frank's paper would be quite serious if that's what the climate models were actually doing, but they don't appear to function in a way that would make Frank's work relevant to their outputs.

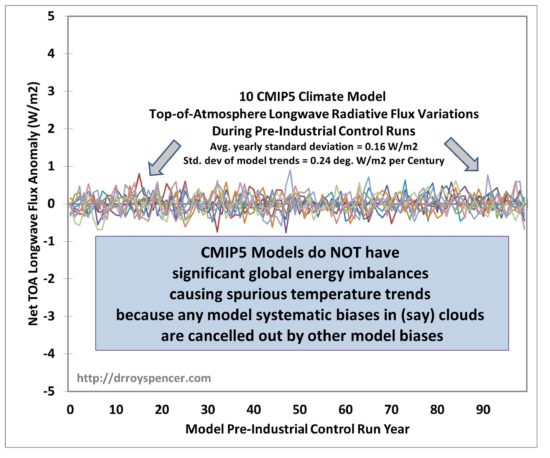

The reliability of general circulation climate model (GCM) global air temperature projections is evaluated for the first time, by way of propagation of model calibration error. An extensive series of demonstrations show that GCM air temperature projections are just linear extrapolations of fractional greenhouse gas (GHG) forcing. Linear projections are subject to linear propagation of error. A directly relevant GCM calibration metric is the annual average ±12.1% error in global annual average cloud fraction produced within CMIP5 climate models. This error is strongly pair-wise correlated across models, implying a source in deficient theory. The resulting long-wave cloud forcing (LWCF) error introduces an annual average ±4 Wm−2 uncertainty into the simulated tropospheric thermal energy flux. This annual ±4 Wm−2 simulation uncertainty is ±114 × larger than the annual average ∼0.035 Wm−2 change in tropospheric thermal energy flux produced by increasing GHG forcing since 1979. Tropospheric thermal energy flux is the determinant of global air temperature. Uncertainty in simulated tropospheric thermal energy flux imposes uncertainty on projected air temperature. Propagation of LWCF thermal energy flux error through the historically relevant 1988 projections of GISS Model II scenarios A, B, and C, the IPCC SRES scenarios CCC, B1, A1B, and A2, and the RCP scenarios of the 2013 IPCC Fifth Assessment Report, uncovers a ±15 C uncertainty in air temperature at the end of a centennial-scale projection. Analogously large but previously unrecognized uncertainties must therefore exist in all the past and present air temperature projections and hindcasts of even advanced climate models. The unavoidable conclusion is that an anthropogenic air temperature signal cannot have been, nor presently can be, evidenced in climate observables.

The math that Pat Frank produced accurately portrays the magnification of errors over time. He choose to look at the albedo change associated with cloud cover, and propagated the cumulative error over the number of time steps the climate models simulate, with the end result that the temperature error from cloud cover alone results in a temperature uncertainty range of -8C to +16C.

That sort of "baked-in" energy imbalance would become very noticeable, and thakfully is not what the climate models are doing, because they're forced to maintain energy balance at the beginning and end of the simulation runs, but not at every time step, which would produce a wild uncertainty range over enough time steps.

Please read this rebuttal to Frank's work from Roy Spencer:

Additional Comments on the Frank (2019) “Propagation of Error” Paper

Yesterday I posted an extended and critical analysis of Dr. Pat Frank’s recent publication entitled Propagation of Error and the Reliability of Global Air Temperature Projections. Dr. Frank graciously provided rebuttals to my points, none of which have changed my mind on the matter. I have made it clear that I don’t trust climate models’ long-term forecasts, but that is for different reasons than Pat provides in his paper.

What follows is the crux of my main problem with the paper, which I have distilled to its essence, below. I have avoided my previous mistake of paraphrasing Pat, and instead I will quote his conclusions verbatim.

In his Conclusions section, Pat states “As noted above, a GCM simulation can be in perfect external energy balance at the TOA while still expressing an incorrect internal climate energy-state.”

This I agree with, and I believe climate modelers have admitted to this as well.

But, he then further states, “LWCF [longwave cloud forcing] calibration error is +/- 144 x larger than the annual average increase in GHG forcing. This fact alone makes any possible global effect of anthropogenic CO2 emissions invisible to present climate models.”

While I agree with the first sentence, I thoroughly disagree with the second. Together, they represent a non sequitur. All of the models show the effect of anthropogenic CO2 emissions, despite known errors in components of their energy fluxes (such as clouds)!

Why?

If a model has been forced to be in global energy balance, then energy flux component biases have been cancelled out, as evidenced by the control runs of the various climate models in their LW (longwave infrared) behavior:

Figure 1. Yearly- and global-average longwave infrared energy flux variations at top-of-atmosphere from 10 CMIP5 climate models in the first 100 years of their pre-industrial “control runs”. Data available from https://climexp.knmi.nl/Importantly, this forced-balancing of the global energy budget is not done at every model time step, or every year, or every 10 years. If that was the case, I would agree with Dr. Frank that the models are useless, and for the reason he gives. Instead, it is done once, for the average behavior of the model over multi-century pre-industrial control runs, like those in Fig. 1.

The ~20 different models from around the world cover a WIDE variety of errors in the component energy fluxes, as Dr. Frank shows in his paper, yet they all basically behave the same in their temperature projections for the same (1) climate sensitivity and (2) rate of ocean heat uptake in response to anthropogenic greenhouse gas emissions.

Thus, the models themselves demonstrate that their global warming forecasts do not depend upon those bias errors in the components of the energy fluxes (such as global cloud cover) as claimed by Dr. Frank (above).

That’s partly why different modeling groups around the world build their own climate models: so they can test the impact of different assumptions on the models’ temperature forecasts.

Statistical modelling assumptions and error analysis do not change this fact. A climate model (like a weather forecast model) has time-dependent differential equations covering dynamics, thermodynamics, radiation, and energy conversion processes. There are physical constraints in these models that lead to internally compensating behaviors. There is no way to represent this behavior with a simple statistical analysis.

Again, I am not defending current climate models’ projections of future temperatures. I’m saying that errors in those projections are not due to what Dr. Frank has presented. They are primarily due to the processes controlling climate sensitivity (and the rate of ocean heat uptake). And climate sensitivity, in turn, is a function of (for example) how clouds change with warming, and apparently not a function of errors in a particular model’s average cloud amount, as Dr. Frank claims.

The similar behavior of the wide variety of different models with differing errors is proof of that. They all respond to increasing greenhouse gases, contrary to the claims of the paper.

The above represents the crux of my main objection to Dr. Frank’s paper. I have quoted his conclusions, and explained why I disagree. If he wishes to dispute my reasoning, I would request that he, in turn, quote what I have said above and why he disagrees with me.

The rest of the "good to know" info detracts from Monckton's main point about correction application of the operations and units associated with a closed-loop dynamic control system algorithm, which if you look at the paper Jim Hansen published in 1984 (and he directly references Bode's 1945 publication, so it's pretty clear how the feedbacks are being treated, and he explicitly states how he's using that work to represent the temperature feedback mechanism in his paper), which does appear to be missing something. The diagram from Schlessinger's supporting paper in 1988 is definitely wrong.

The control system loop diagrams and equations from the various papers mentioned, from the 1980s to the present day, do appear to muck up either the units or do something weird with the control algorithm itself. That's easy to do, though. I had trouble understanding op-amp feedback circuits when I first learned about them as a child while reading about and then building radios. My father bought a series of books for me about basic electronics and radio theory. I then spent the next several years struggling to learn the collegiate level math presented in them. My mother was able to help, because she was a computer scientist who completed course work with a very heavy emphasis on math, which she actually had to use professionally.

My own conclusion about this is that both sides are trying way too hard to "debunk" the other, and both are failing to address real methodological issues. I would hope that climate scientists with billions of dollars on tap would have access to electrical engineering and mathematics experts who could easily spot such errors and correct them.

Monckton showed his math and it doesn't appear to mangle the units or operations, which is great, but not the experimental results from the electronic control system analog he had built, that our climate models are supposedly based upon, so what I would like to see to settle this matter is to play the climate models forward for a thousand years to see if all of them veer off towards a thermodynamic impossibility. If they do, then regardless of Spencer's point about energy balance being enforced at the start and end of the simulations, the models are still wrong, and the algorithm representing the feedback mechanisms need to be corrected.

I've asked my father to contact his brother about this to ask some pointed questions about what Monckton is pointing out. My uncle teaches stats at University of Texas, and has done so for longer than I've been alive. This is his last year, and then he's retiring. He has expertise in development of mathematical models for dynamic control systems.

I want to get my hands on a copy of the paper Monckton's group published on their electronic temperature feedback apparatus.

It's going to take some time to go through the rest of the papers he referenced.

I have a hard time believing that nobody else found such errors, but stranger things have happened when ideology is involved.

#216 Re: Science, Technology, and Astronomy » Google Meet Collaboration - Meetings Plus Followup Discussion » 2025-04-05 18:06:06

GW,

My opinion is that we will use induced turbulent flow to do a better job of transferring the heat into the Hydrogen. Maybe we don't even need a porous graphite core as such, for a flow rate of only 1 to 2kg/s, and a hollow channel wall cylinder design will work better. If we need more channel wall surface area for super heating or extended residence time in the engine, then we can use our large 100:1 expansion ratio nozzle as part of our heat exchange surface area. This will keep the engine as light as possible.

I won't pretend to know what the optimal approach is, so I'm not ruling out any particular approach unless it results in impractical component sizes, performance requirements, or costs. The major parts of the propulsion system have to be transportable to orbit in a Starship, or they're not very practical. As far as materials are concerned, we have the materials of the NERVA Program (Graphite structure with Zirconium Carbide cladding) which are known to work, as well as newer materials (Hafnium Carbide cladding) known to work even better.

#217 Re: Meta New Mars » kbd512 Postings » 2025-04-05 14:44:10

tahanson43206,

Could the disconnect be the surface area over which the 2kg of Hydrogen is in contact with?

That seems like the most probable explanation.

#218 Re: Science, Technology, and Astronomy » Long Service Life Energy Storage Infrastructure » 2025-04-05 14:41:54

Electrostatic energy storage devices, which we know as "super capacitors", are an example of a long-lived electrical / electronic form of energy storage. Those devices can achieve 1M+ cycles with little loss of storage capacity, relative to electro-chemical batteries. If we can figure out how to get their energy density on-par with Lead-acid batteries while reducing manufacturing cost, perhaps with mass production, then I see a future which uses them as durable solid state electrical energy storage devices that provide us with our future mass electrical energy storage for data centers and computers. Super capacitors are typically based upon Carbon and plastic materials, which are reasonably plentiful. Graphene-based lab-scale super capacitors have achieved 50Wh/kg to 80Wh/kg.

As modern computers become increasingly photonic vs electronic, and photonic chips consume many times less electrical energy for a given compute capability, then these advance super capacitor devices can store the energy required to ensure that sophisticated AI-enabled super computing infrastructure continues to operate, even if the grid is less than totally stable, or worse, has to shrink because we cannot devote the personnel and logistical support to these "mega project" compute devices.

Computers are not going anywhere. They're here to stay. We do need to arrest the growth of power consumption associated with large data centers and AI, but then we need to power them through a combination power buffer / fast energy storage that precisely regulates voltage and enables them to continue operating, uninterrupted.

#219 Re: Interplanetary transportation » Focused Solar Power Propulsion » 2025-04-05 13:51:17

tahanson43206,

That 1.4km figure is almost certainly a miscalculation of some kind. The flow path of Hydrogen gas through the core of the NERVA reactor was not 1.4km in length.

Consider this nuclear thermal rocket engine design, with a 3,000K core temperature:

A COMPARISON OF PROPULSION SYSTEMS FOR POTENTIAL SPACE MISSION APPLICATIONS

A ground-based test article achieved 3,000K during the Rover / NERVA Program. I forget if it was XECF, Phoebus-2A, or Pewee, but it was one of the reactors they ran in 1968. They experimented with Niobium Carbide and Zirconium Carbide to protect the Graphite (Carbon) fuel elements from excessive erosion in hot flowing Hydrogen, and achieved a dramatic drop in erosion rates over those two programs. Zircalloy is now a very common fuel rod cladding material used to protect UO2 pellets from hot highly pressurized water in PWRs. The only downside is the Hydrogen gas production.

An Historical Perspective of the NERVA Nuclear Rocket Engine Technology Program

In our application we don't need to worry about radiation, but Graphite erosion from high temperature flowing Hydrogen is a problem. BTW, the pressures used are fairly low, relative to pump-fed chemical engines, and I learned that Graphite's tensile strength is about 2,000psi at the temperatures we're looking at. Max propellant pressure within the XE Prime reactor was about 450psi with less than half that during steady state operation after startup, so 10bars of pressure should not cause a problem for a solar thermal reactor, and our design's chamber pressure should probably emulate what was historically used during the NERVA Program, because we know that works, even with the materials available during the 1960s.

The rocket stage diagram on Page 3 shows that the entire propulsion module is only 14.79m in length, most of which is the H2 propellant tank. The 320MWth reactor's core length is less than 1m, and core power is much closer to the 40MWth system we're envisioning here.

If we consider the 320MWth reactor design from the linked paper as an empirical example of operating at 3,000K:

320,000,000Wth / 67,000N = 4,776.119402985074627Wth per Newton of thrust generated

40,000,000Wth / 4,776.119402985074627Wth = 8,375N

77,000,000Wth / 4,776.119402985074627Wth = 16,121.875N

#220 Re: Science, Technology, and Astronomy » Plastic Car Automobile Truck Diamond Carbon No Metals » 2025-04-04 18:57:46

Calliban,

We have commercialized 700bar tanks that withstand 25,000 pressure cycles. The use of stronger fibers and matrices could enable us to develop 1,000bar tanks. A 1,000 bar storage tank's energy density, even when combined with hot water, should match that of actual Lithium-ion battery packs, which are much heavier than the cells themselves. It's close enough to Lithium-ion that there ought not be much of an argument against compressed air.

A 700bar 350L CFRP H2 storage tank weighs 211kg. This is commercialized tech.

Source:

NPROXX 350L 700 bar Hydrogen Tank

From Wikipedia:

Thus if 1.0 m3 of air from the atmosphere is very slowly compressed into a 5 L bottle at 20 MPa (200 bar), then the potential energy stored is 530 kJ. A highly efficient air motor can transfer this into kinetic energy if it runs very slowly and manages to expand the air from its initial 20 MPa pressure down to 100 kPa (bottle completely "empty" at atmospheric pressure). Achieving high efficiency is a technical challenge both due to heat loss to the ambient and to unrecoverable internal gas heat. If the bottle above is emptied to 1 MPa, then the extractable energy is about 300 kJ at the motor shaft.

I presume 0.3MJ per 1m^3 of air compressed to 200bar means 1.05MJ per 1m^3 at 700bar and 1.5MJ per 1m^3 at 1,000bar.

A 350L / 700bar tanks should then store 257.25MJ of energy, or 71,458Wh.

A 350L / 1,000bar tank should then store 525MJ of energy, or 145,833Wh.

If we further assert that only 70% of that potential energy can provide useful power input to an air turbine and flywheel, then we have 50kWh and 102kWh compressed air "batteries" that never lose capacity.

Making composite gas storage tanks requires a lot less materials and energy than Lithium-ion batteries, and those tanks can store compressed air, Hydrogen, Methane, or Propane in a vessel that meets US DoT standards for compressed gas storage. There is no electro-chemical battery I'm aware of that will retain 100% of its original storage capacity over 25,000 cycles, and the inability of electro-chemistry to completely reverse the chemical processes that take place during charge and discharge virtually ensures that there never will be.

We have plenty of places along our coastlines where we can sink trompes into the ocean floor to compress air to between 400 and 600psi without having to dump a lot of waste heat from that initial compression, at which time further compressing that supply air, to between 700 and 1,000bar, generates a more manageable quantity of waste heat, which can be converted to hot water just below its boiling point, whereupon compressed air and hot water pipelines can deliver those products to gas stations. Tanker cars could also deliver the energy, and use the same energy to power themselves.

It's an elegantly simple solution to a complex energy storage and distribution problem, so that the average person who only drives 50 to 100 miles per day, or less, has a very inexpensive way to get to work. Since such cars don't need complex control systems, a guy with a few hand tools can work on the car.

#221 Re: Not So Free Chat » Politics » 2025-04-04 12:20:38

RobertDyck,

World Trade Organization - Canada Part A.1 Tariffs and imports - 2022 to 2023

The World Trade Organization's records are a more trustworthy source for information on Canadian tariffs / import duties applied than the CBC or you. If you don't like those facts, then tell your leadership to start changing them.

#222 Re: Not So Free Chat » Politics » 2025-04-04 09:51:05

Let me put this in even simpler terms that maybe everyone can understand.

What happens to the rest of the global economy if the US collapses under the weight of all the debt we're accumulating through this toxic combination of massive trade deficits, insane money printing that's not backed by organic economic growth which would warrant increasing the M1 money supply, spending policies that are counter-productive at best, and unsustainable entitlements?

The problem isn't the taxes. We're already taxed to death. The problem is that our government keeps spending money it doesn't have by writing an ever-growing stack of IOUs, which we cannot possibly pay. Their spending policies make the rich ever-richer and the poor ever-poorer. Rather than investing in the local economy, most of the rich people find every possible way to send our money and jobs overseas. We're not stupid, we know quite well what they're doing to us, but since they can and do buy off our politicians from both parties to formulate policies intended to relegate the rest of us to serfdom, we could not find anyone at all to represent our interests until President Trump was elected.

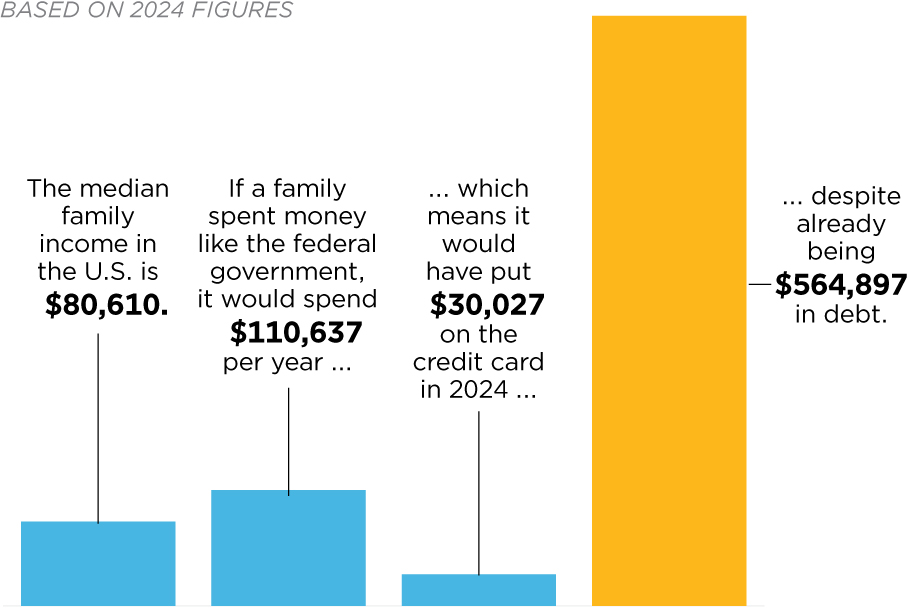

If American citizens spent their personal money like the US federal government spends everyone's money:

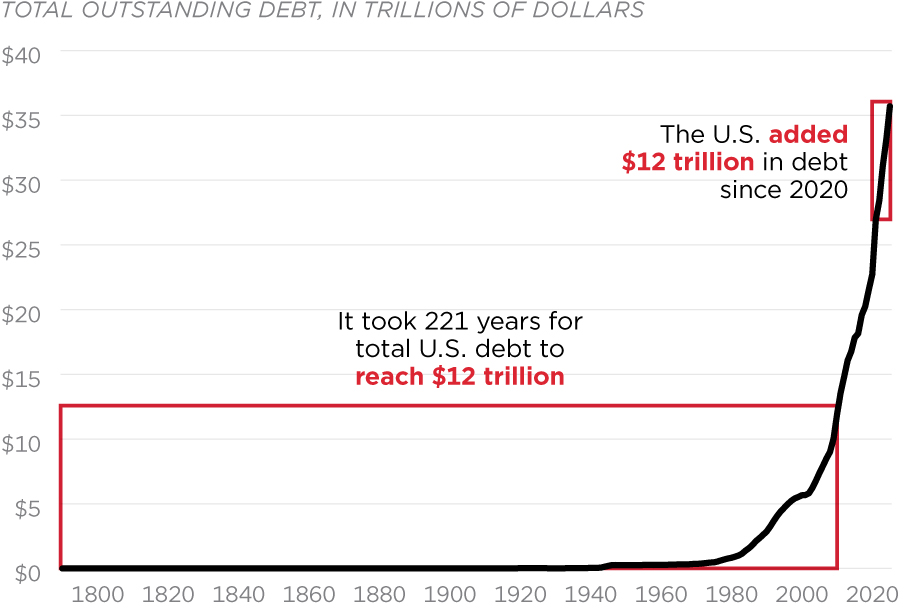

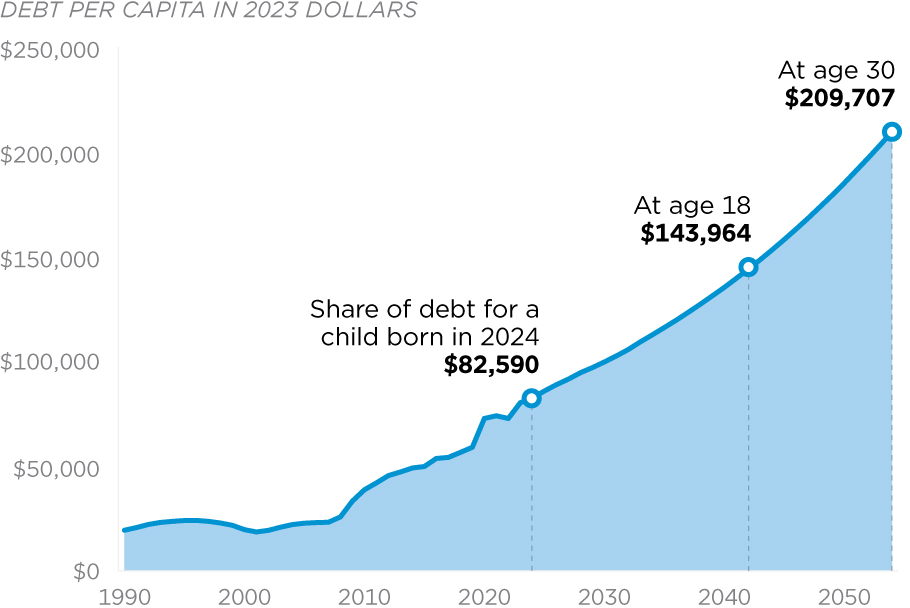

The Biden administration's addition to the US debt (the equivalent of 2 centuries worth of debt in only 4 years):

These people never met a dollar they didn't want to spend, and their pens are writing cheques we can't cash:

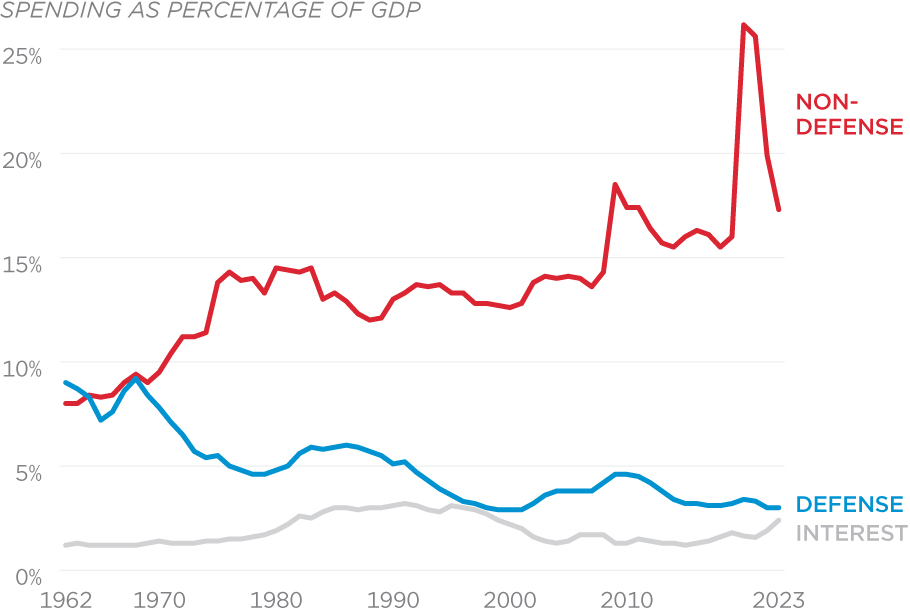

Defense spending vs every other form of government spending:

The interest on debt payments is 11% of the entire federal budget.

Spending on defense and every other discretionary program is 13% of the entire federal budget.

Spending on entitlements is now 68% of the entire federal budget.

We could cut defense spending to zero and it would have no effect whatsoever on our government's spending problem. They'd make absolutely certain that all of the defense spending was both retained and even increased by the very next day, it would merely go to some other idiotic idea.

How much do you figure other countries will be able to sell to Americans who can no longer afford to pay for necessities and a government that's either defaulting on its debt or making its currency even more worthless than it already is?

Who else are you going to start trading with, and why not do that right now?

If the Russians or Chinese decide they want a piece of your country for themselves, who is going to stop them?

Everyone else is talking a good game about defense spending while not actually making the required investements into their own defense.

In many western nations, the local population is aging out. It's becoming an old folks home. Old folks are not going to drive consumption-led organic economic growth.

If America collapses, then what?

What's your end game?

#223 Re: Not So Free Chat » Politics » 2025-04-04 09:29:01

Calliban,

I'm after proximal solutions to our problems. I don't need the obvious problems pointed out to me, because they are so obvious that I already know what those underlying problems are and I know that they need to be addressed. Rather than continuing to dither while whining about the problems, I want to aggressively pursue workable solutions. I don't want any country to fail, least of all the country I live in, but that's exactly where we're headed if we keep doing what we've been doing.

I do not delight in the problems and sorrows of others, either. However, I do not fixate on the problems of others when I have so many problems of my own to work through. Furthermore, I do not see any point to complaining. I only care about solutions, and whether or not what is proposed approximates a workable solution. We've spent the past 20 years and 5 to 10 trillion dollars pursuing photovoltaics and wind turbines, which have thus far failed to do more than merely add a couple of percentage points to our overall energy mix. Since that has failed to live up to its promises, it's on to the next potential solution.

We've extensively evaluated the major technical points, merits, and demerits of various "new energy" alternatives that we require to lead us back to prosperity. I think we should push hard for those solutions while everyone is trying to figure out what's next, because they will continue to serve us and our children.

#224 Re: Not So Free Chat » Politics » 2025-04-03 23:22:40

RobertDyck,

The Fulcrum - Business Democracy - Just the Facts: Canadian Tariffs

Before President Trump took office, what tariffs did Canada impose on U.S. goods, and what percentages were there on various products exported to the US?

Before January 1st, Canada had tariffs on certain U.S. products, primarily in sectors protected under its supply management system. These included:

Dairy Products: Tariffs ranged from 200% to 300% on items like milk, cheese, and butter to protect Canadian dairy farmers.

Poultry and Eggs: Similar high tariffs were applied to chicken, turkey, and eggs.

Grain Products: Some grains faced tariffs, though these were generally lower than those on dairy and poultry.These tariffs were part of Canada's long-standing trade policies to support domestic industries.

...

In 2023 and 2024, was the amount the U.S. imported from Canada greater or less than what the U.S. exported to Canada…i.e., What is the level of trade imbalance?

In both 2023 and 2024, the United States imported more from Canada than it exported to Canada, resulting in a trade deficit:

2023: U.S. exports to Canada totaled approximately $354.4 billion, while imports from Canada were about $418.6 billion, leading to a trade deficit of $64.3 billion.

2024: U.S. exports to Canada were around $349.4 billion, and imports from Canada reached $412.7 billion, resulting in a trade deficit of $63.3 billion.

Let's carry this trade imbalance forward for a decade... $600B is an enormous amount of money. Persistent trade deficits drain the wealth and jobs out of an economy, which is precisely what has been done to working class Americans, and we're tired of it.

The Fulcrum - Governance and Legislation - Trump's Trade Deficit

QUESTION: Has the overall United States trade deficit increased or decreased in the last 4 years?

The U.S. trade deficit has increased over the last four years.

Here's a brief overview:

2020: The trade deficit was $-626.39 billion.

2021: It increased to $-858.24 billion.