New Mars Forums

You are not logged in.

- Topics: Active | Unanswered

Announcement

#101 Re: Not So Free Chat » Cancel SLS Question in early 2025 » 2025-06-13 16:05:59

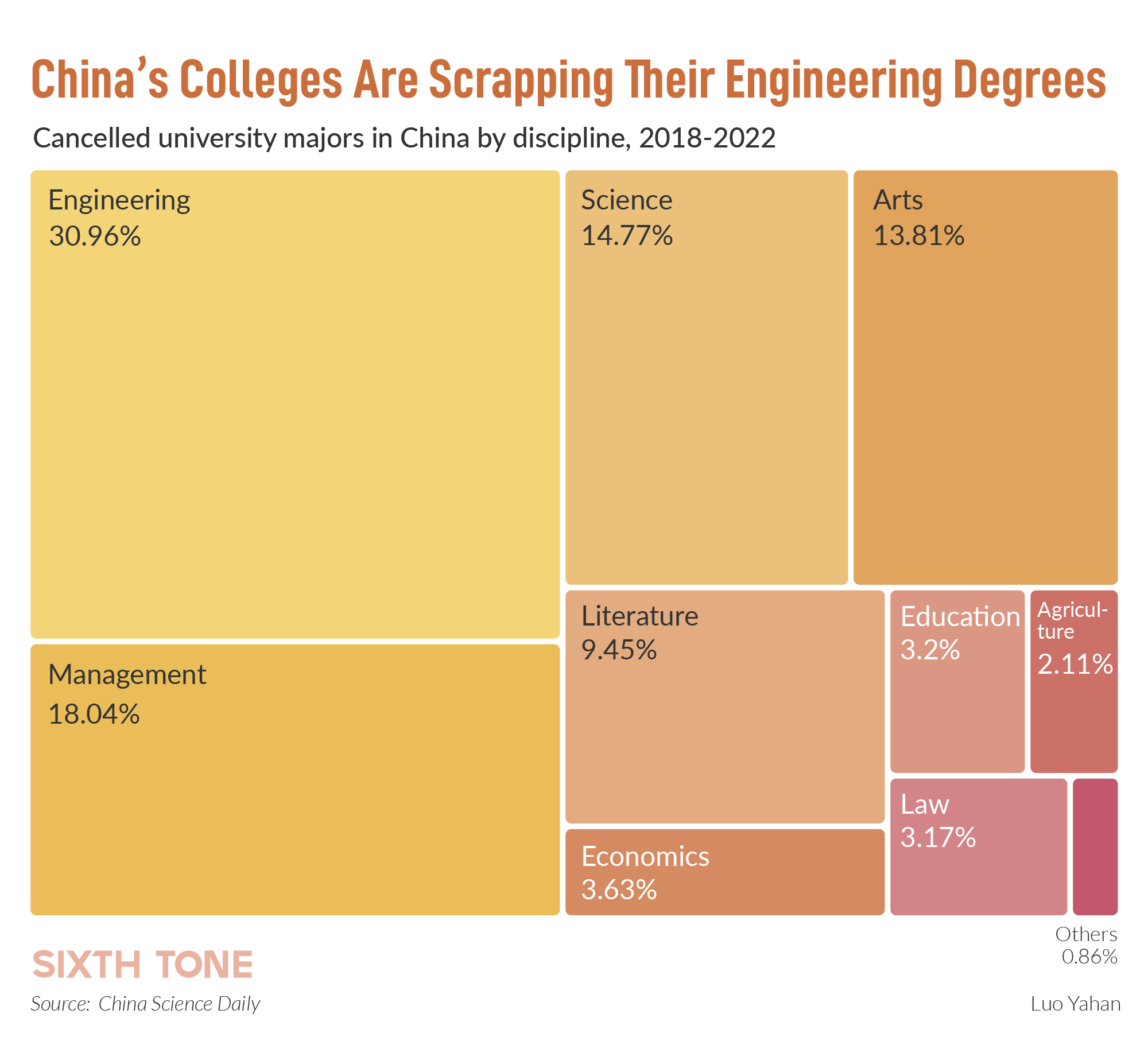

It sure looks like there's still plenty of money available for human exploration missions:

Here's where all of our "science money" went to:

Multiple estimates exist for the cost of illegal immigration to U.S. taxpayers during the Biden administration. Some estimates are:

$150.7 billion annually: This is a figure calculated by the Federation for American Immigration Reform (FAIR) and cited by multiple sources. FAIR estimates that this is the net cost after accounting for taxes paid by illegal immigrants.

$61.4 billion since Biden took office: This estimate comes from the House Budget Committee and refers to the cost of releasing migrants into the U.S.. This is based on the number of migrants stopped and subsequently released, with a per-person cost of $8,776 per year.

$451 billion: The House Homeland Security Committee has estimated that taxpayers could pay up to $451 billion to care for those who have entered the U.S. unlawfully since January 2021.

After you've already made your choices about what to vote for, be prepared to live with the result. Everyone who voted for Joe Biden chose funding illegal aliens over science. The American tax payers are not a piggy bank to raid for every nutty self-destructive idea. If you want more science funding, then stop forcing everyone to fund the people breaking the law. Stop funding people to riot, loot, and burn your cities to the ground while you're at it. There's nothing truly "creative" about self-destruction.

#102 Re: Science, Technology, and Astronomy » Rotating Detonation Engine » 2025-06-13 12:03:50

Void,

Absent exceptionally powerful new propulsion technologies, likely involving fusion, TSTOs like Starship are always going to have their place in the expanding menu of orbital launch vehicle options.

The trends are clear:

1. full reusability with minimal repair and refurbishment

2. streamlining of operational costs by reducing repair costs and time

3. substantial improvements to engine performance as we push towards the theoretically achievable Isp values and combustion efficiency limits for conventional chemical engines

4. exploration of non-rocket launch methods for lower value cargo such as propellants and metals

5. new materials for thermal protection

That said, no amount of materials science is going to overcome basic orbital flight physics. A TSTO will have a higher payload mass fraction than a SSTO, period. Thus far, no miraculous new strong but light material nor more efficient engine technology can compete with the ability to discard 2/3rds of your vehicle's inert / dry mass at booster burnout, because pretty much any tech you can apply to a SSTO can also be applied to a TSTO, to even greater effect, especially if full reusability is important. The only area where TSTO cannot compete with SSTO is the simple fact that a SSTO nominally stays in one pretty piece, from launch to landing, and doesn't require multiple separate recovery, transport, and stacking operations. Starship Super Heavy partially reduces that problem by returning the booster to the pad it launched from. That's a great first step, but the upper stage stilll has to land somewhere and get stacked on top of a booster again. Whatever savings there are in terms of propellant cost reduction, it probably cannot offset all that added operational complexity.

SpaceX wants to launch Starship several times per day. With a HTHL SSTO, after the vehicle lands, it's towed back to the gate, inspected, gassed up, towed back to the runway, and then it's ready for its next flight. That might feasibly be done in the span of a few hours. Even a VTHL SSTO can be pretty straightforward to relaunch. A VTVL TSTO requires multiple pads with supporting infrastructure, if only because the upper stage cannot readily land on top of its own booster, multiple cranes are needed for stacking, inspecting two vehicles vs one must be done (only a major time imposition because it probably needs to be done in two or more different places for a large fleet operation), etc. All that adds up to more time, more touch labor, and more cost.

I want SpaceX to succeed, or any body else to succeed for that matter, but launching this way 1,000+ times per year (at least 3,500 per year to send 1,000 Starships to Mars every 26 months, or about 10 per day) is going to be challenging without a standing army of support personnel and sprawling infrastructure investment. Doable? Yes. Very cheap and practical with limited head count? Almost certainly not, at the scale required- too many simultaneously moving pieces. Maybe AI and those Tesla automatons can coordinate the touch labor operations to the degree required, plus they can stay in a shelter on the pad, immediately ready to inspect the booster or upper stage the moment it's captured on the pad. We will never run recovery and vehicle inspection operations that way with humans for obvious reasons. Ground crew are not allowed anywhere near the vehicle during the launch and recovery.

All I know for sure about vertical launch and vertical landing is that it's very tricky to do correctly and routinely. It's not impossible, obviously, but far from simple and easy. Automated landing helps mightily, but what about 24/7/365 airline transport style operations? We don't do that with rocket launches. If a hair looks out of place, we stop the clock, reassess, and everyone involved weighs in before we proceed. We run launch operations that way to stave off disaster. The primary precious cargo we're transporting to Mars is lots and lots of people. Losing even one vehicle per year is unacceptable. Starship is clearly not an optimal solution for an airline transport style operation, even though it's acceptable for exploration missions. Neither a SSTO nor the upper stage of a TSTO alone is the correct solution for colonization. Refueling up to a half dozen times in LEO is impractical at the scale they want to implement. We need some kind of fusion-based in-space propulsion system for practical large scale colonization operations. A solid core NTR is woefully inadequate for that task.

#103 Re: Science, Technology, and Astronomy » n-body 3-body 3 body problem Celestial Mechanics » 2025-06-13 10:25:54

tahanson43206,

There are only numerical methods for solving 3-body problems using the equations of motion, and despite the fact that we're dealing with a chaotic system, the accuracy of the solution is generally increased by increasing the number of time steps used by the numerical solver, so this sort of solution will always be computationally-intensive.

However, I would propose a hybrid approach that provides a "good enough" approximation to use for mission planning:

1. For a fixed set of bodies (Sun, Earth, Mars) Create a multi-dimensional table of coefficients describing the gravitational acceleration vector values for the bodies involved, based upon distance from the surface of the planet. This should be feasible because we're only going to launch from certain places, along specific trajectories, the orbits of planetary bodies about the Sun are periodic in nature. The planets are so far apart from each other that their gravitational interactions are quite small, which is at least part of why the orbits are stable to begin with, although the overwhelming mass of the Sun obviously has the greatest influence.

We're not trying to store all the coefficients for every possible trajectory or point in space between all bodies involved, merely the ones describing the net acceleration effect along practical intercept trajectories (a "corridor of space" similar to a shipping lane) from orbits accessible from actual launch sites (KSC, Boca Chica, Vandenberg).

There's no fuel burn optimizer to tell a ship's Captain how to optimally power itself and what course to plot to go from Port of New York to Port of London, via the Pacific Ocean, for example, because no real world fuel burn shipping optimization problem involves doing such an asinine thing. In reality, you're going to sail through a part of the Atlantic Ocean that helps reduce your ship's fuel burn rate by using the current to its advantage, or minimizing losses when fighting against the current. The same constraining of stored data applies here to reaching Mars from Earth. A 3BP optimization involving a direct ascent trajectory, launching from the North Pole towards Mars, is of academic interest only.

Applying that restriction to the stored data reduces the quantity of data contained in the lookup table from an infinite number of values to a highly constrained set of values. We would use the coordinates for these "assigned blocks of 3-dimensional space" and applicable gravitational acceleration vector coefficients to plot our minimum energy trajectory from Earth to intercept Mars.

2. For a given initial starting point in time, use those "lookup values" to compute their effect on the velocity of the spacecraft to approximate their effect on vehicle acceleration magnitude and direction. Anything that veers outside of the stored table of coordinates either requires a spacecraft Delta-V input (trajectory adjustment using onboard propulsion systems), or it doesn't, in which case that solution is "more optimal" because it requires no Delta-V increment applied by the propulsion system. Set limits on how much total Delta-V you're willing to devote to mid-course correction burns, so you can reject any solutions that exceed your onboard propulsion capabilities.

3. With the range of potential solutions appropriately restricted, you're now left with something that ceases to attempt to derive a solution the moment established onboard propulsion limits are exceeded. Better still, you no longer need to compute the results for a series of differential equations because you've already derived that output. That should drastically reduce the computational power required. If relevant parts of the table data are loaded into memory only as required, that also tends to improve execution time.

What do I actually mean we should do here to arrive at potential solutions with personal computing hardware?

For points in space inside the Lagrangian Plane associated with the planetary body in question, compute and store the results from the differential equations required to describe the effect on the trajectory of the vehicle as a result of the 3BP. For points in space outside of the Lagrange Point, where gravitation from the Sun has the greatest effect on the motion of the vehicle, revert back to solving a 2BP since that is easily determined using analytic methods. If/when the vehicle trajectory arrives at the Lagrangian Plane associated with the destination body, then revert back to using the table lookup values to reduce the compute-intensity of a 3BP.

Assuming you intend to minimize future Delta-V input using your onboard propulsion system (propellant consumption from mid-course correction burns), your initial trajectory also has the greatest overall effect on where you end up at the time step coinciding with breaking the Lagrangian Plane upon arrival near the target body since there are an ever decreasing number of time steps remaining to intercept (or miss) as you proceed along your outbound flight trajectory. If you're going to bother with continuing to compute the results for a 3BP past the Lagrangian Plane, then that should probably only be done on the outbound portion of the flight trajectory while you're still relatively near Earth, assuming the target body is a planet with the mass of Mercury / Mars / Venus. That probably doesn't hold true for a much more massive body such as Jupiter / Saturn / Neptune / Uranus.

GW,

If that seems like a reasonable approach, please let me know. I know very little about orbital mechanics. This is just an idea for reducing the computing power and time required. If there's some reason this won't work, please let me know.

#104 Re: Science, Technology, and Astronomy » Rotating Detonation Engine » 2025-06-13 06:56:20

Void,

33X 200:1 TWR Raptor-3 engines weigh 49,500kg.

33X 800:1 TWR RDEs would weigh about 12,375kg.

The propellant tanks weigh 80,000kg when made from 304L stainless, and the interstage weighs about 20,000kg. If the propellant tanks were made from CFRP, then mass is around 20,000kg. Those two changes can save around 97,125kg, cutting the dry mass of the Super Heavy Booster in half. That is a massively consequential upgrade to both engine performance and propellant tank dry mass.

Between those two night-and-day performance enhancements to our chemical rocket technology, SSTOs become not merely technically feasible but reasonably practical. Flight physics dictates that a TSTO will always soundly beat a SSTO on payload performance unless dry mass approaches zero. We really can't do much about that, and any SSTO mass reduction will likely carry over to a TSTO design with better payload performance. However, TSTO does not beat SSTO in overall simplicity of operation. By its very nature, any vehicle that deliberately breaks apart into multiple pieces during its flight, with separate recovery locations for the different pieces of the vehicle, is going to be more complex, time consuming, and expensive to operate. When a mixed propulsion SSTO can take off and land on a conventional runway like other jet aircraft, VTVL TSTOs will be relegated to specialist heavy cargo delivery roles because we won't be able to justify the operating expenses or dangers associated with operating them for routine commercial orbital passenger service.

So long as it's not too extreme, justification for the additional propellant consumption of a SSTO vs TSTO for passenger service is little different than justifying the additional fuel consumption of much faster jet aircraft capable of carrying more passengers over shorter duration flights vs slower piston engine aircraft that carry fewer passengers. Reduced propellant consumption is always desirable, but by mass most of the propellant is oxidizer rather than fuel. That is why a mixed propulsion scheme that significantly offsets the onboard oxidizer mass by consuming atmospheric Oxygen is the most likely path to a commercially successful SSTO that is at least GLOW-competitive with TSTOs.

I view RDEs, mixed propulsion consuming atmospheric Oxygen, advanced high strength composites, and advanced ceramic composites for engine components and thermal protection as the technological underpinnings required for the success of the National AeroSpace Plane (NASP) Program. NASP began in earnest in the 1980s, although conceptual studies began in the early 1970s. Here in 2025, it's starting to look like we finally have the technology to make a practical NASP vehicle, which was intended to be a SSTO with mixed propulsion.

It took about 50 years to go from Wright Flyer to Boeing 747, 50 years from Saturn V to a rapidly reusable super heavy lift launch vehicle (Starship Super Heavy), and about 50 years yet again, to go from a 1.5 stage-to-orbit semi-reusable space planes (Space Shuttles) to rapidly reusable SSTO space planes (NASP), so time-wise I would say we're on-track.

RAND Report on NASP:

The National Aerospace Plane (NASP): Development Issues for the Follow-on Vehicle - Executive Summary

#105 Re: Science, Technology, and Astronomy » Rotating Detonation Engine » 2025-06-11 09:02:48

Void,

Correct. After you're in orbit, overcoming the gravity losses associated with low TWR becomes far less important than other factors such as engine mass / complexity / physical size, because you would generally take advantage of the Oberth effect during thrusting periods to minimize gravity losses. Aerodynamic drag losses are almost nonexistent in LEO, although there is still some atmosphere which will ultimately reduce your vehicle's velocity through collision, over a period of weeks to months.

In a low orbit, traveling at roughly 7.8km/s, your vehicle already has quite a bit of momentum, which ought to be in your desired direction of travel, previously established by your launch trajectory. To go to Mars on a 6 month free return trajectory from an ISS-like orbit, you need to add another 3.9km/s. Whether said velocity increment is added over 15 minutes or 5 hours should be impacted relatively little by gravity losses.

#106 Re: Science, Technology, and Astronomy » Rotating Detonation Engine » 2025-06-11 02:23:05

Void,

Brief impulsive burns are the preferred way to operate all rocket engines to minimize gravity losses, but some engines don't generate enough thrust to do that. The insufficient thrust problem primarily applies to the various electric propulsion schemes, but sometimes applies to low power chemical propulsion for interplanetary spacecraft and satellites as well.

Gravity loss reduction is critical when attempting to achieve orbital velocity from a launch pad. Some user here, maybe PhotonBytes, has proposed using an air-breathing mixed propulsion scheme whereby a spaceplane repeatedly dips into the upper reaches of the atmosphere to obtain Oxygen for combustion, following a sinusoidal trajectory around the Earth until sufficient velocity is achieved that very little pure rocket propulsion (onboard oxidizer plus fuel), at greatly reduced Isp, is required to attain orbital velocity. Gravity losses will be higher in this scheme simply due to total thrusting time required to attain orbit, but the Isp is so much higher from not having to carry most of the oxidizer onboard the vehicle that so long as said spaceplane can tolerate the extreme aerodynamic heating it will be subjected to, the end result is a significant propellant consumption reduction. How well that works in real life remains to be seen. I place this scheme in the "easier said than done" category. I think it's probably attainable, but at what cost?

Gravity loss is highest (near or at 100%) when thrusting "straight up" to clear the dense lower atmosphere, as is typical of a TSTO design that uses most or all of the propellant in the booster stage to rapidly ascend through the lower atmosphere before the gravity turn and firing of the second stage, which provides the majority of the delta-V necessary to achieve orbit. If your rocket was powerful enough and you only intended to attain escape velocity, you could theoretically thrust "straight up" or at least "straight to your intended interplanetary target", and leave Earth's gravity well that way.

I believe the Nova booster concept, which was to be equipped with 8 F-1 engines in its first stage, was designed for a "direct ascent trajectory". For various reasons, mostly related to energy and monetary economics, direct ascent was ultimately rejected as the most practical means to achieve the lunar landings during the Apollo Program. Direct ascent requires a much more powerful rocket. The Saturn V, with only 5 F-1 engines in its first stage, was the "economy model" moon rocket. We had to master orbital rendezvous and docking to use a comparatively less powerful Saturn V, but those on-orbit maneuvers were subsequently proven to work quite well, and are used today every time a spacecraft visits ISS. Saturn V could deliver 140t to LEO. Saturn C8 (Nova) could deliver 210t to LEO, and the SpaceX Starship Super Heavy V3 aims for about 200t with full reusability or up to 300t when fully expended. If we were to expend the upper stage of Starship and include a Hydrogen powered third stage, then we'd have a very powerful direct ascent moon rocket.

After you're already in orbit, you can perform multiple burns, firing the engine at certain points along a highly elliptical orbit that are most favorable to increasing velocity (gravity assist) along your intended trajectory. This typically applies to escape maneuvers, but is also used by satellites going to higher orbits to reduce propellant consumption. GW will correct me if I'm wrong, but I believe his space tug concept also capitalizes on gravity assist thrusting to take the payload to just shy of escape velocity so the upper stage can be refueled and reused. Any engine capable of multiple restarts makes this possible. RL-10 upper stage engines could restart 15 and later up to 25 times. Sometimes restart limitations are imposed due to ignition, especially when using TEA/TEB or other pyrophoric compounds to achieve ignition, and sometimes stress on various engine components or cumulative damage. This will vary from engine to engine. RS-25 engines use the equivalent of a spark plug, so if there is enough electrical power then the number of possible restarts are not limited by the count of onboard pyro cartridges.

To the best of my knowledge, RDEs can achieve thrust-to-weight ratios (TWRs) better than conventional chemical rocket engines, so engine thrust would not be a limiting factor. Gravity and atmospheric drag, however, are always going to be limiting factors. After you're in orbit, as you noted, a RL-10 thrust level engine, provided that all components can handle the stress of firing, could perform long duration burns or multiple burns. Examples of small engines performing extended duration burns include engines powered by storable chemical propellants. Russian interplanetary space probes have upper stages with small engines that fire for quite some time, for example. Some engines can fire for cumulative times measured in terms of multiple hours, although most have single burn duration limits because their nozzles are made from uncooled refractory metals that can only withstand burns of a given duration. If the engine combustion chamber and nozzle were regeneratively cooled or made from Carbon-Carbon that can readily withstand the temperatures generated by even the hottest burning propellants, so long as the turbopumps / valves / seals / injectors / combustion chamber / nozzle and any other attached machinery can withstand the forces and temperatures involved, such an engine could theoretically fire for hours on end to achieve the desired Delta-V, if need be.

At least in theory, since RDE fuel injection systems operate in a manner more similar to a piston-driven internal combustion engine with electronic fuel injectors, they don't use turbopumps to feed the propellants. RDEs have very few components and fewer moving components than typical turbopump-fed rocket engines. They do require electronic propellant feed control with exceptionally precise injection timing. When sequenced correctly, it's as if the detonation of the propellant forms a sort of "standing detonation wave" that endlessly "swirls" around the combustion chamber. After initial ignition, in steady state operation no igniter is required to maintain combustion. Just as the first propellant injection and detonation sequence completes the second detonation sequence begins, and in so doing generates continuous thrust using a very rapidly pulsing rather than continuous fuel injection. Heat from the combustion chamber and nozzle can also be used to convert cryogenic liquids into gasses. You have more heated surface area to work with, as compared to a conventional rocket engine design with a bell nozzle, so the engine can generate more thrust than a typical pump-fed expander cycle engine like the RL-10, before a conventional expander cycle engine runs into heat transfer rate limitations from the limited surface area available to provide input power.

NASA has experimented with Hydrogen, Methane, and RP1 fueled RDEs with regenerative cooling. NASA has also experimented with Reinforced Carbon-Carbon (RCC) nozzles that don't absolutely require regenerative cooling. RCC is 1/4th the mass of the commonly used Nickel-Copper alloys (2g/cm^3 vs 8g/cm^3) and won't melt if left uncooled. The first goal behind using RCC was engine mass reduction / TWR improvement for upper stage engines. The RDE scale engine models used thus far are about half as heavy as conventional rocket engines for the same thrust output and dramatically more compact. That means a RDE fabricated from RCC could be about 1/8th the mass of a typical RL-10 for the same thrust output, so putting 8 RDEs on the back of an upper stage would provide about 880kN of thrust for the mass of a single RL-10. Add a few more engines and you achieve J-2X / Merlin thrust levels from something that's not much more mass than the RL-10. At that point, even with a fairly substantial payload, your burn is less than an hour in duration.

The current / active RL-10 versions are 170kg to 300kg, so a thrust-equivalent RDE would weigh about 85kg to 150kg. If advanced materials such as RCC are used throughout the engine, then the engine might weigh as little as 21.25kg to 37.5kg. That's a trivial amount of weight for a RL-10 thrust-class Hydrogen burning engine, mostly because it doesn't require an enormous Hydrogen turbopump. At some point traditional turbopumps are likely required to feed enough propellant, but the 10kN to 44kN RDEs that NASA has experimented with thus far don't require them. It's easy to see what the real advantages are. There's a modest Isp bump from using detonation, but engine size, mass, complexity, and fabrication time / cost matters at least as much. As we've already noted, as long as the engine can withstand the stress of firing, multiple hours of firing time are feasible and increased total burn time can be favorably traded-off against other engineering considerations such as the force that an upper stage engine will subject the entire vehicle to as the propellant mass is expended. Maybe you don't want an excessive amount of acceleration applied to a large interplanetary vehicle equipped with fully deployed solar and radiator arrays. A single large pump-fed engine can only throttle to a certain point. Multiple smaller RDEs would give you more throttling range to work with.

Air-breathing RDE variants have been simulated to function up to ~5.8km/s, which is well beyond burnout velocity for all booster stages I'm aware of. After that, you only need to add a couple more km/s using onboard oxidizer to attain orbital velocity. You also get a badly-needed TWR improvement with Hydrogen burning RDEs for a SSTO vehicle, although there's still not much you can do to reduce the additional Hydrogen tank mass, nor TPS mass if you intend to make the vehicle fully reusable. It's an interesting pathway towards a practical SSTO, but one that requires a lot more work. Mixed propulsion with air-breathing Methane burning RDEs is probably the way forward for SSTOs. You get to offload a lot of the oxidizer mass you'd need to carry in the vehicle, an Isp increase over RP1 without resorting to absurdly large LH2 tanks, and sky-high TWRs. Raptor 3's TWR is 200:1. Even if the best we can do is 400:1, the mass of the engine hardware becomes trivial, but 800:1 is at least possible. We could implement almost arbitrarily large SSTOs (300t to 500t to LEO) with payload mass fractions comparable to today's TSTOs.

#107 Re: Science, Technology, and Astronomy » SMR Small Module Reactor » 2025-06-07 12:13:47

SMRs would be a great capability to have, and absolutely necessary for powering colonies on Mars. Unfortunately, every one of these designs has ultimately gone nowhere in about 5 to 10 years time. A bunch of money is spent to construct a scale prototype, and then for reasons unknown to me we never drag the finalized design across the finish line where the first commercial units are certified to start producing electric power. That's a shame because a number of innovative designs, arguably safer / easier to use / easier to maintain, have been on offer. The closest thing to a SMR we have are the nuclear submarine reactors, and all of those seem to work quite well, decade after decade.

I don't know if the hurdle is a regulatory issue or because the companies involved in SMR design lack the depth of engineering talent or financing talent or something else like institutional bias against smaller reactors. I'd be quite pleased if this time is different and we end up with real tangible hardware, ready to deploy to remote locations around the world and on other planets. I know that many of the engineering challenges are the same regardless of reactor power level, but SMRs must also have some unique challenges of their own.

Maybe someone can speak to the engineering issues unique to SMRs. I listened to a YouTube podcast on Decouple Media where a US DoE expert was talking about working with various reactor designers to evaluate the technical merits / demerits of SMR designs. He talked a lot about cooling the reactor and operations, especially maintaining the balance-of-plant for SMRs, which is apparently a bit of an issue due to close proximity to the operating reactor. My gut-feel on this is that maintaining the power turbine and electrical equipment is something of an afterthought in these highly integrated reactor designs, and that the inability for a human worker to go physically tweak components makes them unreliable from the standpoint of having to shut down the reactor to work on everything else that is not the reactor itself.

The gist of the conversation on Decouple Media is that when you design a SMR, even if the reactor core itself is readily truck-transportable, we're going to stuff that reactor into a subsurface concrete containment structure with a bunch of infrastructure built on top of it, just like a conventional power plant. The net-net is that the reactor core and electric generating equipment is transportable by truck or rail, but the facility that will operate the reactor is not transportable in any sense of the word. It's a fixed site that involves excavation and must be fabricated over multiple months. The upside is that following fuel depletion the reactor itself can then be returned to the "reactor factory" for refueling and refurbishment, so there's no pile of fuel rods that accumulates onsite the way they would with a traditional GW-class PWR or BWR. This approach is "cleaner" in the sense that the fuel stays inside the reactor core and will never leave the core while the reactor is on onsite.

I think the key to making these reactors profitable is operating them at elevated temperatures and using sCO2 turbines so that the balance-of-plant is tiny and doesn't require a fresh water supply. I don't think most people recognize how great a technological windfall it is to have "steam turbine equivalents" that are 10 times smaller than steam turbine equipment and don't need a water supply. So long as you can deliver the materials to fabricate the power plant facility, to include the power transformers / switching yard / power lines, all the rest of the equipment can be trucked into and out of the facility. That is simply not feasible using steam turbines and traditional GW-class reactors, which must be fabricated onsite over 5 to 10 years. It should be possible to get a SMR facility up and running over 2 years.

#108 Re: Meta New Mars » kbd512 Postings » 2025-06-06 13:20:38

tahanson43206,

A much cheaper option would be using full ceramic bearings with minimal Silicone-based lubricant. At 6rpm, the duty cycle is 3,153,600 cycles per year.

A drastically cheaper option would be using standard Babbitt metal bearings with lubricants. Much hay is made over this, but the battery operated tools used by the astronauts to repair the Hubble Space Telescope, such as the bit driver they used to unscrew and re-torque bolts, did in fact use bearing lubricants. The photovoltaic arrays on the ISS do in fact use sealed metal bearings with a vacuum-compatible grease.

That means there are 3 ways to solve this centering and bearing problem:

1. Contactless permanent magnets or electromagnets.

2. Dry or minimally lubricated ceramic bearings.

3. Lubricated metal bearings.

Option 1 is scientifically interesting because it will theoretically never fail from direct contact friction.

Option 2 can work, but is also expensive at the scale required and will still require periodic replacement.

Option 3 will work for many years before replacement and is exhaustively well proven on Earth and in orbit aboard ISS.

#109 Re: Meta New Mars » kbd512 Postings » 2025-06-06 12:30:07

tahanson43206,

Using rings of permanent magnets in a Halbach array could lock all rotating and "stationary" parts of the vehicle in place without physical contact. Permanent magnets are typically heavier than electromagnets for the field strength they provide, but they do not require any input electrical power. For the strength of the field required for this application, stronger fields are likely irrelevant. Beyond that, we really don't want the magnets to be "turned off" in this application. The vehicle will function best if the magnets are "on" at all times, and the various parts "float" perhaps a centimeter away from each other.

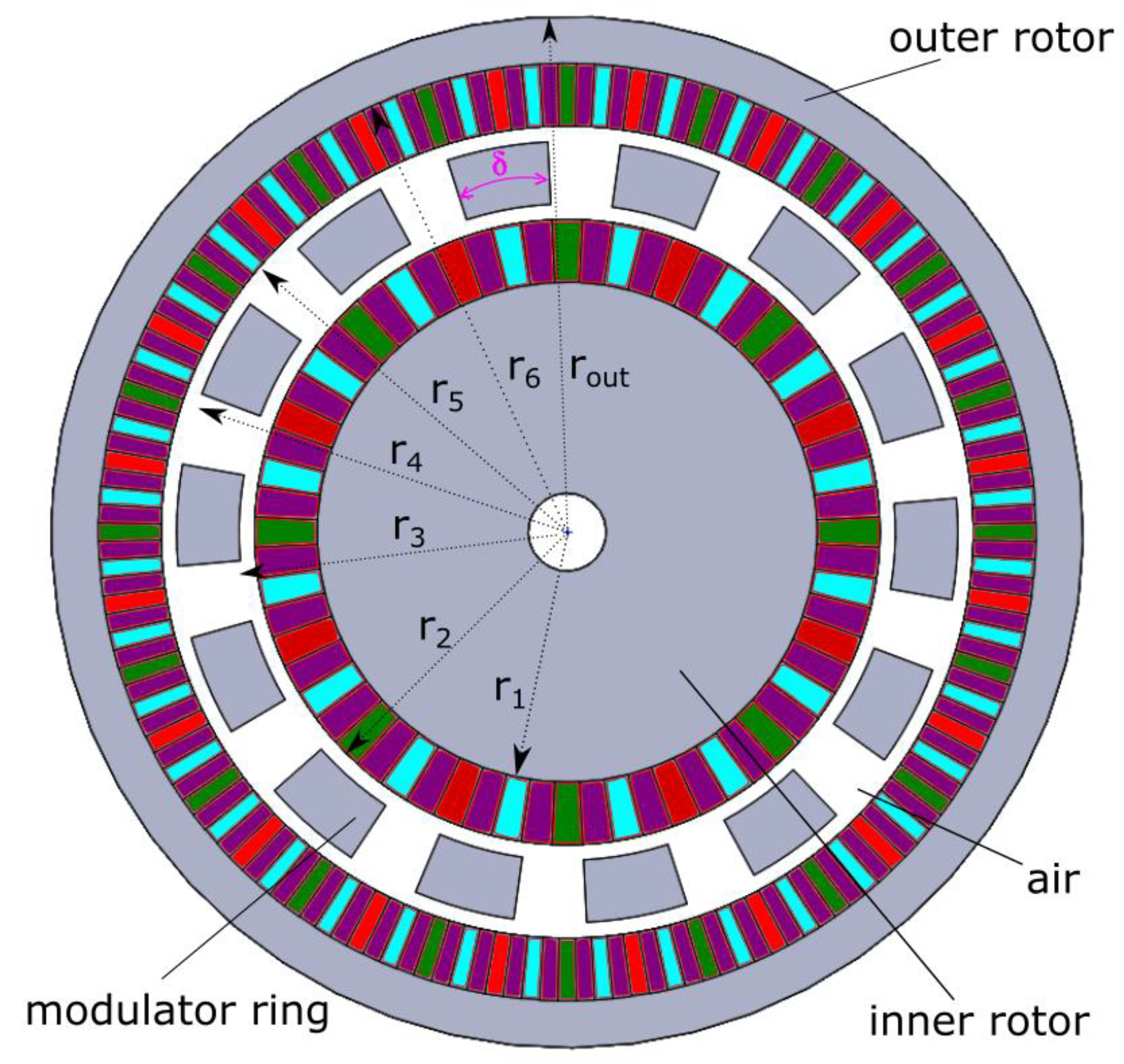

The label "air" would be replaced with "vacuum" in space:

A planar permanent magnet array:

Axial flux electric motor:.jpg)

Halbach arrays can also be incorporated into a "curved" inner and outer lip type of geometry, so that the magnetically suspended habitation modules cannot readily "fly apart", even if the magnets themselves fail.

#110 Re: Interplanetary transportation » Miniature ITV for Mars Flyby and Exploration Missions » 2025-06-06 01:47:24

I think composite structures are going to have less difficulty resisting the forces applied to them than light alloys. For starters, composites have sky-high stiffness (resistance to bending / deformation) and tensile strength, as compared to 2xxx series Aluminum alloys and 3xx series stainless steels. Coefficients of Thermal Expansion are much lower for Carbon-based composites than they are for virtually any suitable metal alloys, so temperature deltas across areas of the structure exposed to full Sun vs shadow are far less. Composites don't corrode from atomic Oxygen attack in LEO, so that's not much of a consideration, either.

We can make fairly thick composite structures from very thin layers of Carbon Fiber tow / roving / tape material, such as IM7 fiber, because the composite's density falls somewhere between 1.5g/cm^3 and 2g/cm^3, with 1.7g/cm^3 to 1.8g/cm^3 being the most probable value for an IM7 fiber composite consisting of 60% fiber fill and 40% resin matrix. Magnesium is 1.737g/cm^3. The strongest Magnesium alloy I could find would yield around 50.75ksi. IM7/5320-1 fiber/resin yields between 245ksi (compression) and 270ksi (flexure). Maraging steel yields between 250ksi and 350ksi, dependent upon the steel's heat treatment, and it's density is around 8g/cm^3, or 4.7X less mass for the bulk composite for similar strength to C300 maraging steel. The big and small of this matter is that we have an exhaustively proven aerospace composite combination available at affordable prices. When used to fabricate cryogenic propellant tanks, the cost of the finished product was 25% to 30% below the cost of a comparable Al2195. Most of the cost of composites is the energy consumed to create the materials. The tape winding fabrication process takes place over a matter of weeks (typically 1-3 weeks, maybe 5 weeks for complex parts), and is no more time consuming than the months of milling and welding Al2195 "potato chips" into finished structures. The curing process then takes place over the next month or two, but involves very little touch labor and no movement of the part or structure. Additional painting or coating processes may be required, perhaps a fluoropolymer sealant to inhibit absorption of moisture, for example.

Hexcel HexTow IM7 Carbon Fiber Material Properties

Fiber Density: 1.78g/cm^3

Cytec CYCOM 5320-1 Epoxy Resin System Material Properties

CYCOM 5320-1 is a toughened epoxy resin prepreg system designed for vacuum-bag-only (VBO) or out of autoclave (OOA) manufacturing of primary structural parts. Because of its lower temperature curing capability, it is also suitable for prototyping where low cost tooling or VBO curing is required.

CYCOM 5320-1 handles like standard prepreg, yet can be vacuum bag cured to produce autoclave quality parts having very low porosity. It offers mechanical properties equivalent to other 350°F (177°C) autoclave-cured toughened epoxy prepreg systems after a 350°F (177°C) post-cure. The laminate can support a freestanding post-cure after an initial low temperature cure. The material can also be cured under positive pressure in an autoclave or press.

CYCOM 5320-1 Cured Resin Density: 1.31g/cm^3

NCAMP 5320-1 Resin System Processing Documentation:

Cytec, a Syensqo Company 5320-1

NCAMP NMS 818 Carbon Fiber Specifications Documentation Repository for Qualified Materials:

NMS818 Carbon Fiber Specifications

NCAMP NMS 818/8 Fiber Specification for Hexcel HexTow IM7 Unidirectional Fiber Tape:

Document No.: NMS 818/8 Revision N/C, February 8, 2013 - for NMS 128/2 IM7 8552 Unitape

60% Fiber Fill: (1.068g/cm^3) + 40% Cycom 5320-1 Resin (0.524g/cm^3) = 1.592g/cm^3

I prefer to use 1.7g/cm^3 as my bulk density "fudge factor figure" in lieu of the manufacturer's exact stated material properties. If I use 1.7g/cm^3, then I know I'm not going to end up with a primary structure component with greater mass than what I intended. If the component ends up modestly lighter, that's fantastic. If not, then I'm still covered. Since the mechanical properties of this IM7 fiber used in conjunction with the 5320-1 resin system are so well defined, relative to many other composite materials, we can have high confidence in the mechanical properties of the components fabricated from this combination. One of the major complaints engineers have about working with composite materials is not knowing exactly what they're getting. I think that problem is mostly solved by using this combination. We're not getting as much strength or as much stiffness as we possibly can, but we are getting a good mix of properties suitable for this application.

One underappreciated aspect of selecting these fabrication materials is just how thick our parts can be at reasonable mass. If our parts range in thickness between 10mm and 25mm, we're going to have exceptional resistance to deformation relative to much thinner cross-section Aluminum alloys, with tensile strength very comparable to maraging steel. The only mass-comparable structural metal alloys similar in density to composites are Magnesium alloys, none of which begin to approach the tensile strength of maraging steel. The relationship between moment of inertia and resistance to bending / deflection under load means doubling the thickness of a part doesn't increase stiffness by only 2X, it's 8X as stiff. If we were limited by the yield strengths and masses of structural metal alloys, this would quickly increase mass to impractical levels. Thankfully, we're not.

#111 Re: Interplanetary transportation » Miniature ITV for Mars Flyby and Exploration Missions » 2025-06-04 18:19:10

tahanson43206,

YES! There we go! That's the basic idea.

To prevent the gas leaks GW is concerned about, my "idea", if you will, was that both rotating modules would be completely sealed and if you wanted to transfer people between modules then you would "spin down" both units and then attach a "docking ring" between the two rotating units to allow people to pass between them.

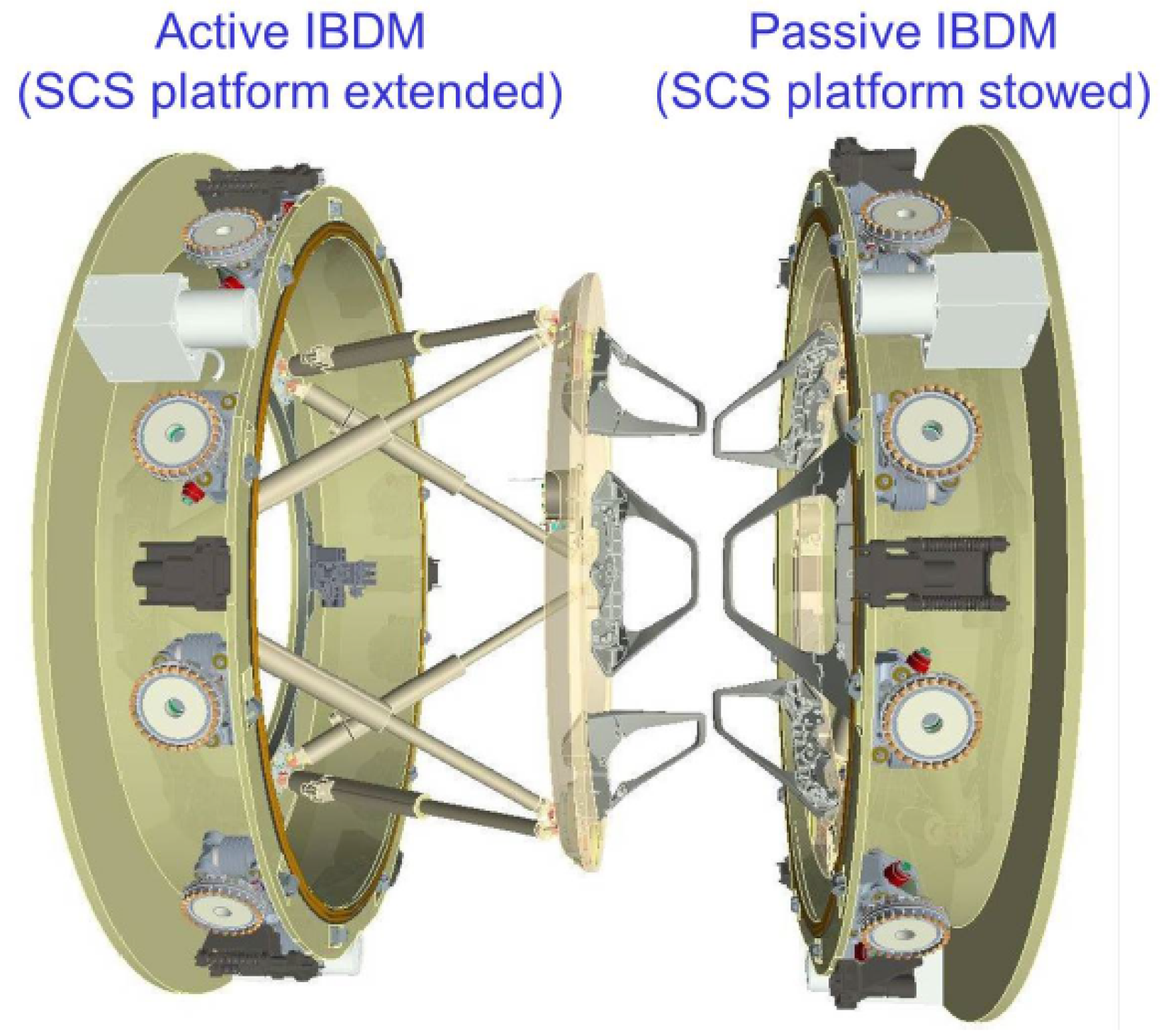

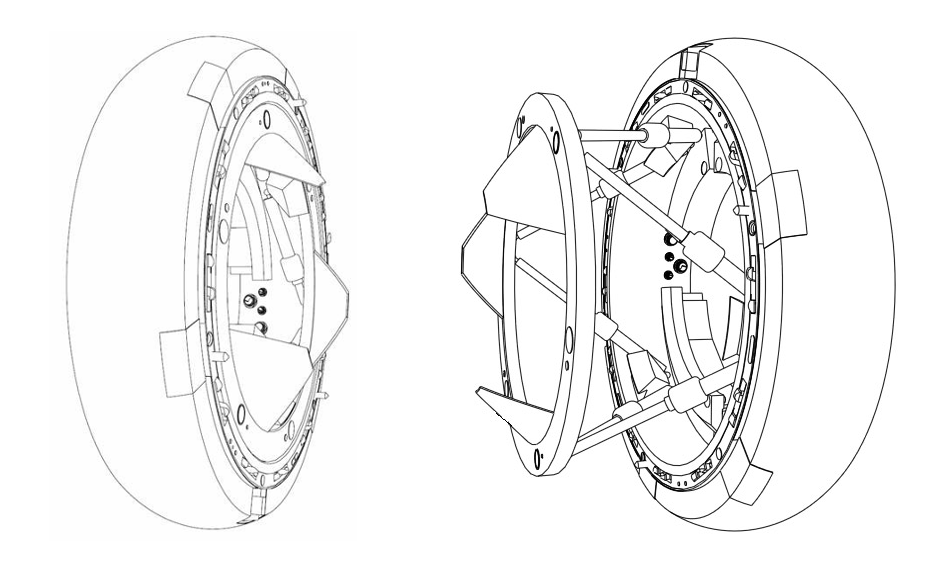

There's a part of the "docking module" on ISS and/or visiting spacecraft that "extends" or "retracts" when docking occurs. I will post a picture of it below. The same basic system would be used here to connect both rotating units for personnel transfer, first in LEO to transfer to the ITV from a Dragon / Orion / Starliner / Dreamchaser / Soyuz, then in LMO to transfer to a descent capsule (probably a Dragon with SuperDraco thrusters to effect a propulsive soft landing on Mars, and once again in LEO or near Earth (direct entry) to depart the ITV via a capsule for splashdown in the ocean.

NASA Low Impact Docking System:

NASA DOCKING SYSTEM (NDS) USERS GUIDE

Low Impact Docking System (LIDS)

Visualize a flexible seal running around the circumference of the extensible portion of that docking mechanism, likely the same materials used to construct a standard space suit.

#112 Re: Not So Free Chat » Education » 2025-06-03 14:41:13

This is from NPR, back in 2006:

Figures on Chinese Engineers Fail to Add Up - by Adam Davidson, June 12th, 2006

A report cited in The New York Times and quoted on the House floor claimed China graduates nine times as many engineers as the U.S. Skeptical, a Duke professor had students check the numbers.

...Mr. REISING: We would ask deans how many colleges do you have affiliated under your name, and they couldn't tell us.

DAVIDSON: If a dean doesn't know how many colleges he's running, he certainly can't say how many engineers are graduating.

It took a while, but eventually Reising and his team came up with a number they felt was close to right. The media accounts had India graduating 350,000 engineers a year. Reising says the real number is closer to 100,000.

With India done, the team turned to China.

Mr. REISING: Universities there were simply unwilling to provide us with information. We'd get through, and they'd be like, why do you want to know this information? You know, we're unwilling to provide it. We won't even give you our names.

DAVIDSON: Pretty soon, they realized how the system works. The Chinese central government in Beijing had simply decided that 600,000 is the number of engineers they want China to graduate each year.

Vivek Wadwha...

Prof. WADWHA: Government has told the provinces that they have to graduate more engineers, and so the provinces tell the Chinese government what they want to hear.

DAVIDSON: Some universities counted every student who studied anything vaguely associated with engineering as engineers. In provinces where there just weren't enough students of any kind, the government counted repairmen and factory laborers as engineers. This forced the Duke researchers to realize they had to answer a more fundamental question. What is an engineer?

Ben Reising...

Mr. REISING: It's a difficult question, but an engineer is someone who can take technical know-how and apply innovative solutions that are going to ultimately benefit mankind.

DAVIDSON: I interview people and then I use my technical knowledge of how audio editing works, and then I benefit mankind. So am I an engineer?

Prof. WADWHA: If you were in China, you would be classified as an engineer. In India you probably wouldn't. In the U.S.A. you wouldn't be.

DAVIDSON: In the end, the Duke project came up with an estimate of 351,000 engineering students graduating every year in China. That's just over half of the 600,000 number that had gained so much attention.

So China is graduating about two and a half times as many engineers as the U.S. does, but China's population is, of course, more than four times as big. The Duke researchers said these numbers should reassure Americans that we're doing just fine. And there's even better news, Wadwha says: our engineers get a much better education.

Prof. WADWHA: This is what America's advantage is. This is why our graduates have a much better chance of competing and, you know, doing innovation and rising within the corporate world than others. Their best is as good as our best are. However, the average engineer from the U.S.A. is much, much, better than the average engineer from India and China.

DAVIDSON: Chinese engineering classes are typically in huge rooms, where a lot of students learn mechanically, by rote. Americans get a far broader education. They learn at least some art and business. They leave school with better skills. So, Wadwha says, he can't understand why so many people think China is on the verge of replacing the U.S. as the world's leading innovator.

Prof. WADWHA: We're not a little ahead of India and China, we're miles ahead of India and China. The problem with this debate is that by constantly saying that we're weak, we're bad, we send the message to our children saying that if you get into engineering you're going to lose, you're going to have your jobs outsourced, and we become bad.

DAVIDSON: The National Academy of Sciences has now changed its report to reflect the numbers that the Duke team came up with. Most media accounts now use the Duke figures.

The researchers are quick to point out that there is no cultural explanation for all of this. Many students in U.S. schools are from India and China, and they tend to do very well here. The best ones, they say, usually decide to stay in the U.S. There's so much more opportunity for great engineers here.

Adam Davidson, NPR News.

The China Academy - How Did China’s Top Major Become the Worst?

How Did China’s Top Major Become the Worst?

Taking Civil Engineering as an example, due to the limited anticipated growth in the Chinese construction industry, the admission scores for Civil Engineering majors in Chinese universities are continuously decreasing. However, the rise of the Intelligent Construction major is changing the landscape.

The implication from those articles is that some part of China's leadership has now recognizes that quality eventually has greater importance to their benefit to society than sheer quantity. Beyond that, in the realm of true engineering there is no amount of fakery that will pass muster if your skills are put to the test. Calling a guy who can do basic electrical work an "engineer" is a bit of a stretch. By that metric, I would be considered an electrical engineer, except that I'm not and refuse to even pretend to be.

Dr Sabine Hossenfelder recently lamented that within the realm of physics, across the entire world, a lot of "scientific" publications pertain to "BS research". There's nothing particularly useful about knowing the precise speed of light to 1,000,000 digits of precision vs 1,000. If that is the sort of "scientific challenge" that your physicists are taking on these days, then whatever their true talents may or may not be, their time is being squandered on "trivial pursuits". The results from such an experiment may be of academic interest, but for all practical purposes nothing fundamentally new will be learned. We're still no closer to capitalizing on our new-found knowledge of precisely how fast the speed of light actually is.

We discovered this new miracle material named "Graphene", but we're not meaningfully closer to being able to use Graphene to construct a space elevator than when Geim and Novoselov first isolated that material 21 years ago at Manchester University. Do we now possess vastly more economical methods for making Graphene today vs 21 years ago? Absolutely. What we presently know is still nowhere near "good enough" for a functional space elevator using Graphene support cables. Quite a number of programs and research fellows from around the world, including China, have all contributed to humanity's Graphene knowledge base since then, but it remains a highly specialized material with few applications where the extreme cost of making it can be justified.

Is the next discovery, regardless of where it happens, poised to overturn the present paradigm and enable this miraculous new material to shine where previous efforts failed to produce the material in the quality and quantity required for Graphene macro structures?

Possibly, but I wouldn't hold my breath waiting for that to happen.

The point worth remembering would be that a slew of inter-disciplinary innovations are required to construct something like a space elevator. You can throw as many people as you like at solving that problem, but it doesn't mean any particular scientific / engineering innovation problem will get solved any faster. There is also an opportunity cost associated with pouring all your brightest young minds into Graphene innovation. Other potential solutions get ignored out of necessity, to focus on a single challenging problem.

What if someone figured out how to economically generate enough thrust to accelerate upwards, without using reaction mass or megastructures, while all of your engineers were tinkering with Graphene space elevators?

A large part of that awesome research effort immediately became a waste of money, intellectual capability, and irreplaceable time. The Chinese communist government is prone to repeatedly making such mistakes, because that's a "feature" of central planning. When most of what you do is dictated by political power structure and you incessantly prioritize maintenance of that power structure over true innovation or useful engineering projects with coherent national priorities, as opposed to "just doing something to give your engineers something to do", you go from "Civil Engineering Is Everything" to, we're no longer attracting students because we have nothing for that many Civil Engineers to do that is minimally useful to society. That's why they built multiple "fake cities" where nobody could actually live.

#113 Re: Not So Free Chat » Education » 2025-06-03 13:22:05

tahanson43206,

Why China's Universities Are Ditching Their Engineering Programs

Question posed to Google AI:

Why has China been unable to capitalize on its engineering graduates?Answer:

While China graduates a large number of engineers annually, several factors hinder the country from fully capitalizing on this talent pool. These include: the quality of engineering education, limited opportunities for innovation, and a preference for state-sector employment among graduates.Quality of Education:

Some surveys suggest that engineering graduates may lack basic knowledge and critical thinking skills, hindering their ability to innovate and contribute to cutting-edge research.Limited Innovation Environment:

China's entrepreneurial environment, with its emphasis on state-owned enterprises, may discourage graduates from pursuing innovative ventures in the private sector.Preference for State Sector:

Many accomplished graduates prefer working in the state sector due to the stability and security it offers, potentially diverting talent away from the private sector and innovation.Political and Corporate Culture:

The political climate and the grinding corporate culture in China can also discourage innovation and limit the ability of engineers to fully contribute their skills.Global Competition:

China's engineering graduates face intense competition from a large talent pool, both domestically and internationally.Lack of Cross-Disciplinary Training:

Engineering graduates may lack the breadth of training across disciplines, which could limit their ability to contribute to interdisciplinary research and innovation.Focus on Specific Fields:

While China produces a large number of engineers, the focus on specific fields like infrastructure and manufacturing may limit the diversification of innovation across different sectors.Inadequate Infrastructure:

The lack of robust infrastructure to support innovation, such as research and development facilities, may also limit the ability of engineers to fully utilize their potential.

#114 Re: Interplanetary transportation » Miniature ITV for Mars Flyby and Exploration Missions » 2025-06-02 21:07:10

Moon to Mars (M2M) Habitation Considerations A Snap Shot As of January 2022

The following NASA Technical Memorandum (TM) is intended to provide a snapshot in time of NASA’s current considerations (ground rules and assumptions, functional allocations, logistics) for habitation systems for the lunar surface (non-roving) and Mars transits. As NASA continues to refine the reference designs to meet the needs of an evolving architecture, it is expected that this information will also be updated as a result. Where appropriate, relevant publicly released documents will be referenced to provide further detail.

#115 Re: Not So Free Chat » Education » 2025-06-02 20:24:06

Edit:

Post removed because it was accidentally placed in the wrong topic.

Edit #2:

One aspect of education within a freer capitalist system is that it doesn't enforce quite as rigid adherence to a doctrinal curriculum as a communist system. The ultimate benefit from freer expression of thought is innovation rather than engineering of solutions only. If test scores alone were reflective of a much more complex depiction of knowledge vs intelligence, then the most industrious students ought to be your best innovators as well. While the willingness to work harder is commendable, the ability to work smarter always pay greater dividends over time. As important as industriousness and engineering is to a well-functioning of society, absent new inventions every society will ultimately stagnate and fall behind as those existing solutions, however well refined, are replaced by entirely new ways of doing things.

With all the engineers and high test scores that Chinese society has produced over the past 25 years, for example, why is it that there are only "fake cities" where nobody can actually live in China?

Here in America, if you were to propose building entire cities, complete with block after block after block of high-rise apartment complexes with no running water or doors that lead to a wall, even the high school educated workers here in America, with below average test scores, would look at someone who wanted them to do such a thing and say, "Nah, we're not doing that." There is no point to them risking life and limb to erect an entire city that nobody could ever live in. Every Chinese person residing in their cities could and should live in opulent luxury, if only they'd built buildings and indeed entire cities designed for people to live in. The scale of that activity indicated that standing armies of architects, engineers, and construction men were absolutely required to make 10+ story structures that would stand at all, yet all those people never said to themselves, "This is entirely pointless and we're not going to do this because it's nuts."

What was the point of all that phenomenal education if it was never allowed to be used for something half-way intelligent?

Building an entire city for nobody would be more soul-crushing than my financiers telling me that my building was ugly. Even if I was greatly enamored with my artistic flair, I could still create buildings in a style pleasing to someone else rather than myself. What must it be like to build something that nobody outside of the construction crew will ever see?

How do you explain what you do to your children?

Well, son, I design these entire cities that only exist because everyone from the local party leadership to the investment bankers to the newest construction man can use to obtain "appreciation valuation" from, on the premise that what we built will increase in value as "developed real estate", even though nobody could ever live there, and deliberately so. Highly educated men and women collectively decided that this is what we would do with our invested time and money, rather than improving our own lives and your life by using our education and ingenuity to create higher quality buildings to house the people we do have.

Western society is not without such absurdities, just to be crystal clear on this matter. We flare off natural gas at the well head because when said gas is contaminated with Sulfur, for example, it's not permitted to be pumped to a gas-fired turbine to be converted to electricity. The pollution will still be generated, but nobody benefits at all from its creation. If that sounds idiotic, that's because it is and everyone outside of government recognizes it as such. The difference is that this sort of activity is solely the machination of governance policy. Business and industry never had a "get together" where they all agreed to uselessly burning natural gas. They were forced into that position by their own government. They would much rather sell that somewhat contaminated gas, perhaps at a reduced price since it's less desirable, and then someone gets their lights turned on at a price they can afford. When wells are drilled, they cost a lot of labor, materials, and therefore money, which means we want to maximize the benefit of each well. Nobody deliberately drills for contaminated gas. The problem is that you never know what you're going to get, but you have to make the investment to find out.

I knew a petroleum engineer from the Middle East who complained that while he was drilling wells in Africa, the local government priced the gas out of the ability of the locals to pay for it, even though selling it to them was the cheapest and easiest way to exploit it, and would maximize its potential benefit to society. He had natural gas in his room to cook meals with, but the locals had to continue burning dung. As an engineer, he viewed liquefying the gas to ship it all the way to Europe or America or Asia as stupid, pointless, and wasteful, largely because Europe could afford to drill its own local wells, but refused to do so out of some sense of "not in my backyard".

These examples were intended to illustrate why there must be something beyond paper that determines the true value of education and its benefits to a society. I'm not a philosopher, so I lack the education to determine what that value proposition should be, but someone ought to make the case for why we should or should not focus on test scores, and then periodically reexamine whether or not the theory agrees with the results.

#116 Re: Interplanetary transportation » Miniature ITV for Mars Flyby and Exploration Missions » 2025-06-02 20:17:37

A high level slide deck to go along with the document from Post #12:

Transit Habitat Concept and Mars Analog in Cislunar Orbit

#117 Re: Interplanetary transportation » Miniature ITV for Mars Flyby and Exploration Missions » 2025-06-02 20:10:52

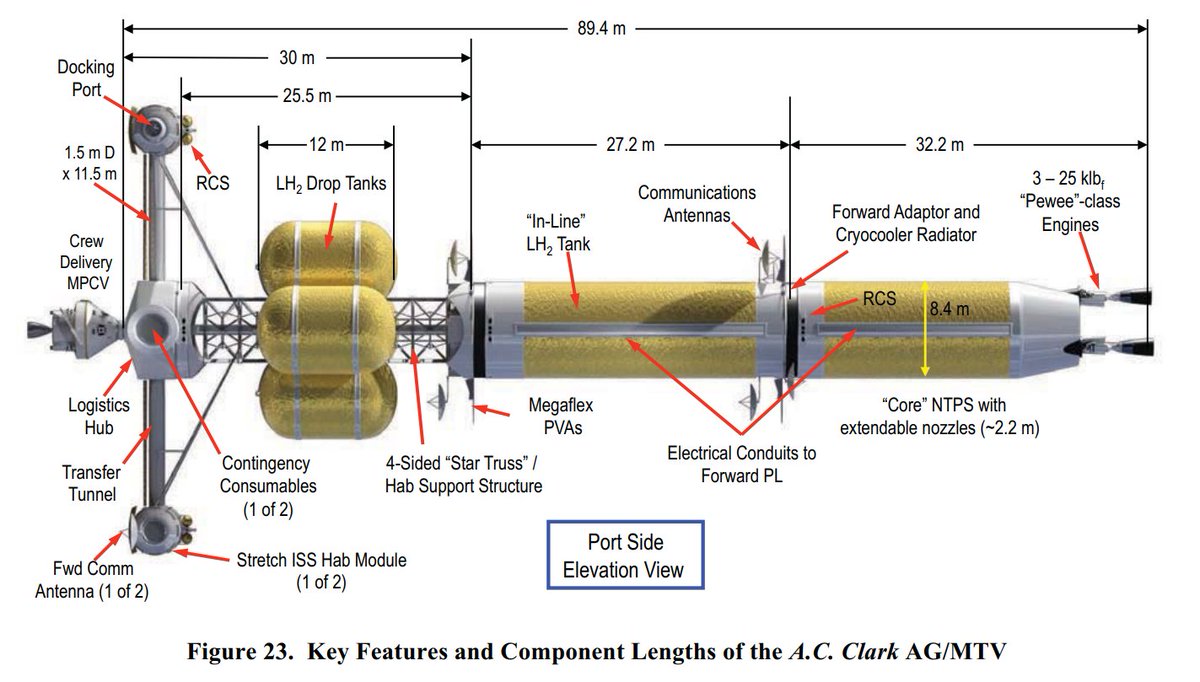

One of the most recent refinements to NASA's ITV design:

NASA’s Moon to Mars (M2M) Transit Habitat Refinement Point of Departure Design

Abstract

As NASA prepares for the next human footsteps on the lunar surface, the Agency is already looking ahead to systems that will enable a sustained human presence on the lunar surface and mission to Mars, including a lunar Surface Habitat (SH) and Mars Transit Habitat (TH). This paper describes the latest NASA government reference design for the TH and how it will support NASA's Moon to Mars human exploration architecture. First, it will serve as a test and demonstration platform in lunar orbit, demonstrating capabilities required for long-duration microgravity human spaceflight as part of the lunar-Mars analog missions. Then, the TH will serve as a major Mars exploration element to support crew habitation during their transit from the Earth’s orbit to Mars and returning safely before TH’s return to a lunar orbit. This paper will cover several considerations contributing to the latest habitat design refinement, including the TH's concept of operations, system functional definition, subsystem assumptions, notional interior layouts, a detailed mass and volume breakdown, and identify future trade studies and analyses required to close identified technology/development/architecture gaps.

#118 Re: Interplanetary transportation » Miniature ITV for Mars Flyby and Exploration Missions » 2025-06-02 18:19:52

Imagine one of these ships had 2 sets of habitation modules rotating in opposite directions:

NASA has already designed the simplified form of it with 1 pair of rotating habitation modules rotating in 1 direction. Imagine that we had a second pair rotating in the opposite direction.

Please see Page 8 of this document (look at the actual page numbers on the page, not what the PDF says the page number is):

Conventional and Bimodal Nuclear Thermal Rocket (NTR) Artificial Gravity Mars Transfer Vehicle Concepts

AN ARTIFICIAL GRAVITY CONCEPT FOR THE MARS TRANSIT VEHICLE

The "Lunar Gateway" has been designed as a purpose-built Mars Transit Vehicle that also happens to be a good miniature space station:

Gateway Lunar Habitat Modules as the Basis for a Modular Mars Transit Habitat

I've heard lots of people claim that the Lunar Gateway is a waste of time and money, yet NASA is quite literally designing and building it to support a crew of 4 people for up to 500 days in deep space without resupply, possibly more, use nearly closed-loop life support that doesn't hog too much electrical power or generate too much heat, have a solar storm shelter, incorporate artificial gravity, and use a combination of chemical and electric propulsion so as not to blow out the mass budget. It's an Exploration class ITV by any other name, but wait... They actually call it that, because that's what it's really designed to do.

People like Dr Zubrin make a claim that goes like, "Hey, you know, with your capsule and my lunar lander and their giant rocket, we could do a lunar mission." However, these people are talking to each other, via systems engineers, and there are systems integration engineers who take all the bits and pieces and transform them into coherent working vehicle designs with specific missions in mind.

This long duration interplanetary transport vehicle concept dates back more than 20 years. That's how long NASA has wanted to build it, but didn't have the budget, or the giant rocket, or various other important bits like closed-loop life support that is durable and reliable. We're almost there now.

#119 Re: Life support systems » Cast Basalt » 2025-06-01 00:31:46

Someone actually sells a Basalt "moon dust" simulant for 3D printing experiments:

Matter Hackers - The Virtual Foundry Basalt Moon Dust Filamet - 1.75mm (0.25kg)

The Virtual Foundry's Basalt Moon Dust Filamet™ is a unique 3D printing filament and pellet, composed of 60-62% basalt, designed to replicate the moon's surface. Compatible with any Fused Filament Fabrication 3D printer, it requires minimal equipment for easy printing. Less hygroscopic than PLA, it offers advanced properties and becomes 100% basalt when sintered, with minimal warping. Ideal for lunar construction research and similar applications.

#120 Re: Interplanetary transportation » Miniature ITV for Mars Flyby and Exploration Missions » 2025-05-30 01:06:19

GW,

Your complaints about Elon Musk, President Trump, and DOGE are duly noted, but this topic is about ITV technologies for exploration class Mars missions. There is a political topic for politics.

I think space tugs and propellant depots are a splendid idea, but again, this topic is about ITV technologies. I think we're already in agreement that all real exploration missions thus far have begun in LEO. At this point, I would be pleased with any crewed mission to another planet, including a fly-by mission, irrespective of what orbit it starts in or what propulsion tech it uses. A fly-by mission would at least demonstrate that our ITV is mission capable and that the crew is no worse for wear after the mission.

Propulsion is obviously a very important part of making all interplanetary missions possible, and I understand your affinity for those systems, which I share, but do you have any specific ideas on how we can create a more practical long-duration in-space vehicle to carry exploration crews to Mars?

I've already mentioned some of my ideas:

1. Counter-rotation sidesteps the precession and loss-of-control issues with artificial gravity.

2. Composites fabricated by robotic tape winding machines can create durable yet light vehicle hulls with excellent volume-to-mass ratios.

3. SEP, storable chemicals, and CMGs can provide adequate in-space propulsion and maneuvering after achieving escape velocity using already available cryogenic chemical propulsion upper stages.

Maybe we'll have ZBO tech perfected in another 5 to 10 years so that long duration on-orbit storage of cryogens becomes a more practical proposition. ZBO tech development began over 25 years ago. I think the required insulation tech is ready to apply, but not much else is. My understanding is that insulation alone, and perhaps some modest cryocooler hardware to re-liquefy small amounts of boil off as compared to LH2, is sufficient for LOX and LCH4. Unfortunately, NASA is dead set on using LH2. If the daily loss rates weren't so high I understand the potential benefits from using LH2. Thus far, long-term LH2 storage has proven to be a very tough nut to crack.

4. Rather than trying to store absolutely everything because we're afraid of equipment failures, capitalize on the excellent progress being made on low-temperature / low-power / high reliability CO2 scrubbers and water filtration systems. I see these technologies as "de-risking" the mission, rather than adding risk. Highly efficient air and water recycling is a mission enabler. We already spent the money to develop this tech to a very high degree of reliability and readiness, so we may as well use it. The mass reduction from water recycling alone is key. IWPs provide a very consistent 98% water recycling rate. CO2 recycling would provide another significant mass reduction.

There are near room temperature processes for CO2 splitting that leaves pure elemental Carbon dust floating on top of Gallium metal as the Gallium strips the O2 from CO2. An electrostatic attraction process could remove the Carbon dust for storage, although even mechanical separation methods appear to work. Heating the metal to 500C to 700C in a vacuum is sufficient to release 2 of the 3 Oxygen atoms. Hydrogen gas will strip the remaining Oxygen, producing water and Gallium metal. By the end of the mission we have 546kg of elemental Carbon from the respiration of 4 crew members. Human feces is 40% to 55% Carbon, so an additional 200kg to 275kg of Carbon could be recovered. This recovered Carbon is one of the important precursor materials for synthetic fibers, plastics, and rubbers.

5. Use the most modern avionics suite and sensors we have, because the reduction in power consumption and heat rejection are incredible. Power-over-fiber combined with optical chips is a radiation hardened by design computing environment. Some researchers here have already built a fully optical computer that performs all the necessary logic functions of legacy Silicon-based CPUs, to include RAM, but it does this with a 100GHz clock cycle. Some simpler designs are operating at up to 800GHz. That's 200 to 300 times faster than Silicon-based CPUs. Even a very modestly capable CPU with a clock cycle that fast can still perform the same functions as more sophisticated Silicon-based chips. The result is orders of magnitude power reduction.

Photonic crystal displays can render painting-on-paper quality colors. In the tech world, there's a recently established "fetish" for near-infinite color range and quality that looks as if the image was inked onto a piece of paper, even though it's been rendered onto a clear piece of glass.

Optical computers have been tested using specialized lasers and particle accelerators to withstand radiation doses in the range of 10s of megarads- a near-instant lethal dose for the crew. There's even been some talk of putting optical computers inside the cores of fission reactors, or at least ones operated at steam generating temperatures, as integrated monitoring and control systems. That makes them highly resistant to both damage and upset from normal radiation fluence ranges found in space.

#121 Re: Human missions » Starship is Go... » 2025-05-29 08:00:38

I think the most expedient solution will be to transplant the RCS thrusters from Dragon into Starship. The volume of the propellant and pressurant tanks can be increased to deliver an appropriate Delta-V margin for use with the much larger Starship. Anything beyond that is nice to have but not required for testing.

#122 Re: Interplanetary transportation » Miniature ITV for Mars Flyby and Exploration Missions » 2025-05-28 17:00:25

I think we should pursue a modified minimum risk mission that uses existing air and water recycling technologies that we already paid through the nose to develop:

Minimum Risk Deep Space Habitat and Life Support

I think the technological risk associated with using regenerative life support is greatly over-played. ISS wouldn't have been habitable for 20+ years if the tech didn't work.

The next generation life support tech, specifically the Amine Swingbed CO2 Scrubber and Ionomer Waste Water Processor have been quietly doing an even better job aboard ISS, using a lot less power and suffering fewer system casualties, for over 2 years now. They're much closer to closed-loop systems, drastically lighter / more compact, and they've had very few teething problems. If the ionic liquid CO2 scrubber experimentation pans out, the total amount of power both systems consume will be under 1kWe for a crew of 4. The total power requirement should be 2.5kWe or less. That means photovoltaic array deployment mechanisms are not required. Waste heat management radiators can be fixed / hull-integrated designs as well.

600W - Counter-Rotation Motors (CRMs)

400W - Skylab Control Moment Gyros (for 8 CMGs at normal operating rpm)

Source:

[url=https://ntrs.nasa.gov/api/citations/19790007076/downloads/19790007076.pdf]NASA TM-78212 - 25 kW POWER MODULE UPDATED

BASELINE SYSTEM - December 1978 - Page Labeled 39 in lower right-hand corner[/url]

800W - Carbon Dioxide Removal by Ionic Liquid Sorbent (CDRILS) System

Source:

Scale-up of the Carbon Dioxide Removal by Ionic Liquid Sorbent (CDRILS) System

Novel Liquid Sorbent CO2 Removal System for Microgravity Applications

60W - Ionomer Waste Water Processor (Direct, Single Cycle, or Dual Cycle)

Development of Ionomer-membrane Water Processor (IWP) technology for water recovery from urine

Demonstration of a Full Scale Integrated Membrane Aerated Bioreactor- Ionomer-Membrane Water Purification System for Recycling Early Planetary Base Wastewater

140W - Avionics / flight computers, electronics, communications, sensors (redundant mobile chips using power-over-fiber sensors)

2kWe is a very modest amount of power for 4 crew members. That can be supplied by hull-integrated thin film arrays. Additional power can be stored in Lithium-ion batteries.

#123 Re: Human missions » Starship is Go... » 2025-05-28 12:03:56

GW,

I agree with the part about SpaceX's engineers needing "hardware they can hold in their hands". At this point, an actual recovered Starship would be worth more than all the telemetry data in the world. The younger generations think a computer can tell them everything, if only there's enough data. At the point that you're able to fully simulate physical reality, that might be true, but at that point you're no longer modeling anything and then using real world testing to confirm what the model tells you. They're probably swimming in data, but none of that is telling them what they need to know.

We cannot accurately predict the weather more than a few days out for the same reason. By the time the full suite of variables is incorporated into the "model", it's no longer a model, rather a "high-fidelity simulation of physical reality". The computing power to accurately simulate physical reality is many orders of magnitude beyond even complex modeling. Models become incredibly useful when they can tell you how to bound a problem and accurately predict a range of plausible real world outcomes. Unfortunately, models will never be an acceptable substitute for the complexity of physical reality, until they simulate reality, and then they're far less useful as models. Even if you had the computing power, if the model can give you most of the answer in a fraction of the time, there's little benefit to simulating reality.

I would tell people to model to with 10% of what reality ought to be, and then use experimental verification to confirm that the model accurately predicts physical reality. In supply chain management forecasting, we use models in this way, run a handful of simulations to "what-if" analytical engine results when the results look questionable (the "experimental verification" that what the larger / more complex model spit out is actually usable data), and then adjust forecasts accordingly. After the actual results are in, re-adjust the inputs fed into the analytical engine, rinse-and-repeat. We frequently have to use human knowledge and experience to accurately predict what input data alone could never convey to a computer program. Forecasting the materials consumed drilling for oil is a good example. Sometimes the computer program does a better job of forecasting than the human forecasters do, and sometimes the computer's results are so far off the mark that we'd be doomed as a company if we had to rely on computer output alone. Human experience and judgement complements machine speed and precision. You must have both. Solely relying upon one or the other doesn't work very well in the real world. There's simply too much complexity to try to simulate every aspect of business or complex engineering exercises.

#124 Re: Human missions » Starship is Go... » 2025-05-28 08:47:53

Starship Flight 9 delivered a smooth ride to orbit aboard a flight proven booster. All indications were that the performance of the Raptor engines was nominal. The Starship itself did not "blow up", so at this point the issues with the main propulsion system seems to be reasonably well sorted out.

SpaceX attempted an extreme AoA "broadsiding" maneuver with the booster, which failed. Their simulations told them this was the most likely outcome, but they needed to confirm this through real world testing to obtain flight data in agreement with their simulations.

Starship itself still suffers from severe propellant leaks somewhere in its plumbing, quite likely due to excessive thermal load. Both the Super Heavy Booster and Starship intentionally vented some propellant after the Raptor burn was completed, earlier in their respective flights, which did not appear to cause a problem. However, Starship suffered another propellant leak later in the mission.

Starship needs an effective RCS system to prevent loss-of-control from propellant venting. GOX / GCH4 would be pretty good candidate propellants since there's clearly an over-abundance of that stuff in the propellant tanks causing leaks.

There appears to be frozen condensation forming inside Starship's cargo bay, likely from the cryogenic propellant tanks below, or a propellant leak, which may have caused the payload bay door malfunction that precluded deployment of StarLink satellite simulators.

Edit:

I think Starship needs Spray-On Foam Insulation (SOFI) on the parts of the vehicle not covered by TUFI tiles, even though that will cost more and add more weight.

#125 Interplanetary transportation » Long Duration LH2 Propellant Storage Challenge » 2025-05-26 04:21:35

- kbd512

- Replies: 4

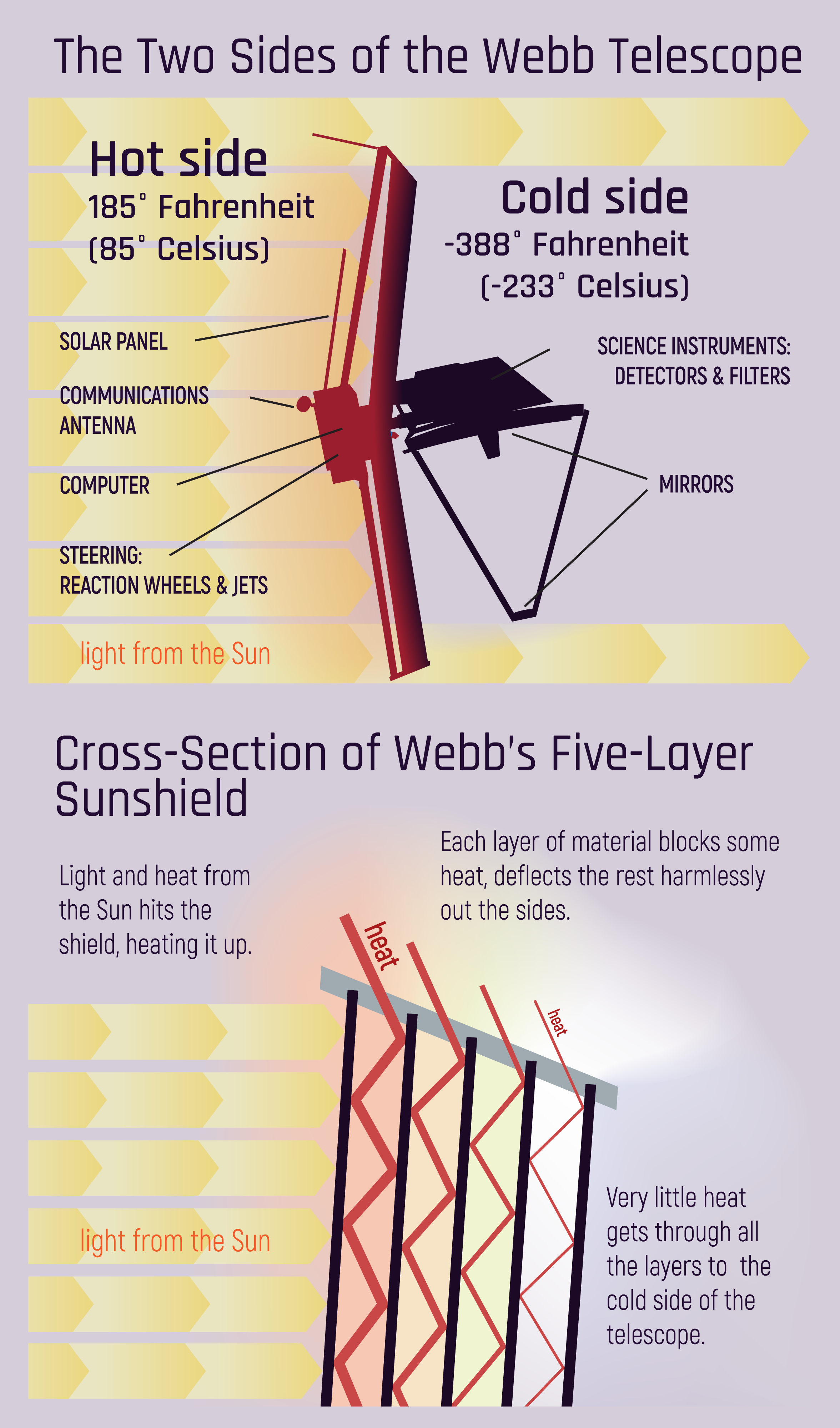

During our Sunday meeting, tahanson43206 asserted that the Sun shield technology created for the James Webb Space Telescope (JWST) would enable passive long duration storage of hard cryogens such as LH2, apparently based upon the belief that the Sun shade was able to passively keep a scientific instrument cooled to 7K. This is not accurate, according to NASA, but he asked me to write a post to pose the question to The New Mars Forums readership to evaluate how closely a similar Sun shade system would enable us to come to passive LH2 storage.

Webb's Sunshield - NASA Science

My assertion or opinion is that trying to use JWST as a proxy or analog for the kind of cryogenic cooling systems (passive and active) required for long duration LH2 storage for a crewed Mars mission ignores the problems of location (LEO vs L2), scale (many tons of propellant vs mere kilos of LN2 and LHe2 for scientific instruments), and mass (because ALL rocket stages need to minimize structural mass and maximize propellant mass). I don't consider that last point to be debatable in any real sense due to "The Tyranny of the Rocket Equation".

However, I'm not content with merely illustrating that JWST does not actually keep the operating temperatures of shaded instruments at or below the 20K required by LH2, using passive means only. That's not an intellectual argument which demonstrates any fundamental underlying truth about the validity of the idea of long duration LH2 storage propulsion stages using some combination of passive and active cooling to provide a meaningful payload performance improvement for an in-space propulsion stage, relative to softer cryogens such as LOX/LCH4 or LOX/RP1. I consider a meaningful payload performance improvement to be 10% or greater. If we can achieve a 10% greater "throw weight" to the moon or Mars or other destination in the solar system, provided the solution is not absurdly expensive, complex, or delicate, I would chalk that up as a major "win" for LH2 or any other technology that does the same job. If the "throw weight" advantage is less than a 10% improvement, then we need scrupulous evaluation of cost. Is a 5% payload performance improvement worth the cost? The answer to that question is, as with most trade-offs, is, "it depends". Regardless, engineering over ideology, forever and always.

Use of effective lightweight thermal insulation and vacuum between layers is at least a partial solution, insofar as multi-layer Mylar insulation is very light and dramatically reduces the thermal load from direct solar radiation from the Sun or re-radiation from the Earth (rejection of solar radiation back into space), onto the cryogenic propellant storage tanks. Unfortunately, as we're about to discover, passive Mylar insulation alone is insufficient to achieve the 20K storage temperature LH2 requires to remain liquid. Achieving 20K temperatures in the inner solar system requires more layers of Mylar than JWST used and power-hungry cryocoolers, photovoltaic panels for electrical power to run the cryopumps, pump motors, heat exchangers, and cryoradiators (chemically milled or 3D printed monolithic Al-1350 alloy with 99.999% purity, which is what JWST used), all of which adds significant dry mass and cost to LH2 propulsion systems with so-called Zero Boil Off (ZBO) technology incorporated. If we can somehow justify the added mass and expense with a substantial payload performance advantage, then it might still be worth it.