New Mars Forums

You are not logged in.

- Topics: Active | Unanswered

Announcement

#401 2024-09-05 07:50:41

- Calliban

- Member

- From: Northern England, UK

- Registered: 2019-08-18

- Posts: 4,261

Re: Why the Green Energy Transition Won’t Happen

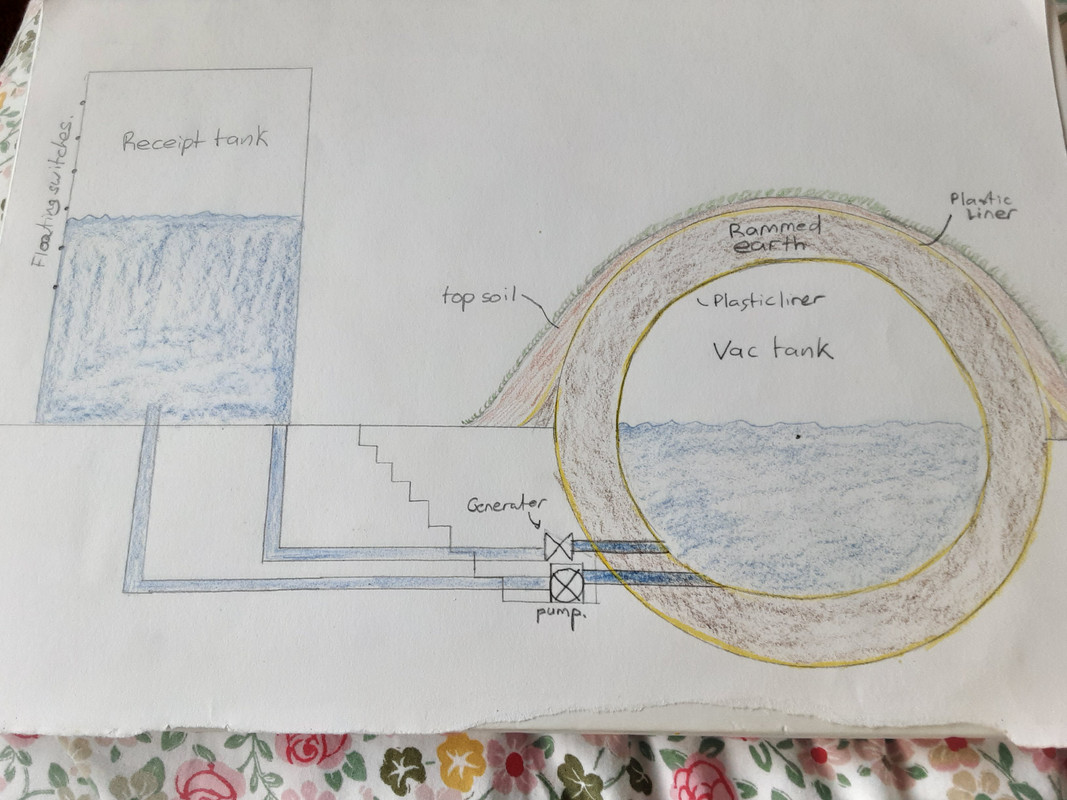

Very simplistically, maybe something like this:

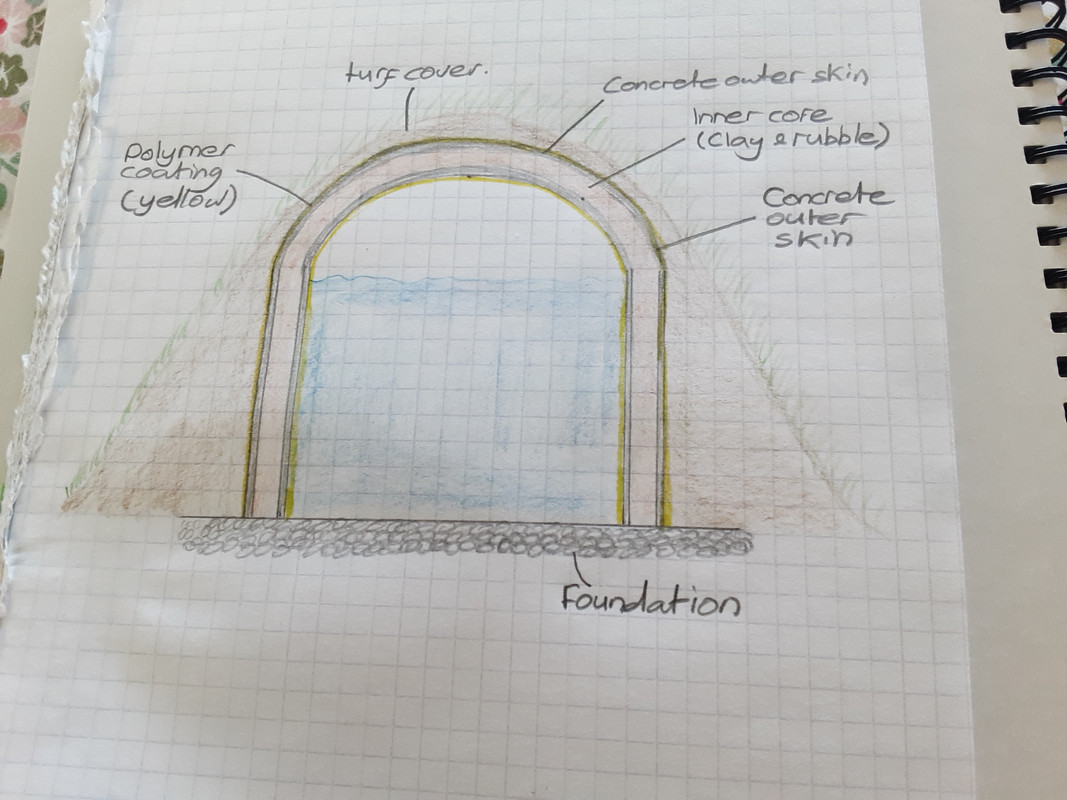

Or maybe this for the vac tank, using concrete with a clay and rubble filling.

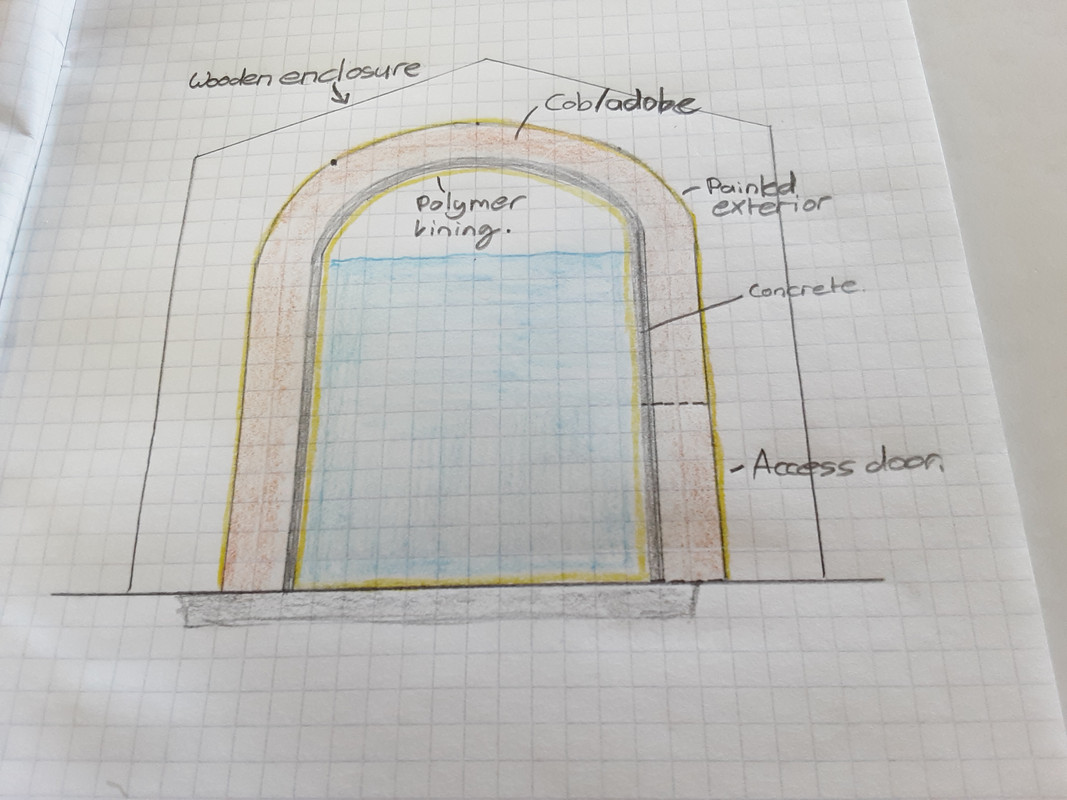

Or maybe the tank can sit within a weather proof enclosure, like a shed. This ensures that the clay providing the compressive strength is kept dry. You may notice that I am trying to use as little concrete as possible, as the tank needs to be cheap.

Pumping water instead of air allows greater energy efficiency overall, because water is an incompressible medium. Pumping water therefore minimises heat generation. But it is also presents a problem as concrete and cob, rammed earth and adobe, all lose a great deal of compressive strength when wet. So a good waterproof lining is important.

Last edited by Calliban (2024-09-05 08:39:26)

"Plan and prepare for every possibility, and you will never act. It is nobler to have courage as we stumble into half the things we fear than to analyse every possible obstacle and begin nothing. Great things are achieved by embracing great dangers."

Offline

Like button can go here

#402 2024-09-05 13:38:19

- kbd512

- Administrator

- Registered: 2015-01-02

- Posts: 8,342

Re: Why the Green Energy Transition Won’t Happen

Calliban,

We've seen steel compressed gas cylinders used for welding fail during highway crashes. They don't blow entire vehicles hundreds of feet into the air. They can't generate enough force to do that. Heck, even fuel-air explosions with gasoline and natural gas don't do that. Lithium-ion battery explosions don't do that, either. 10 feet, sure. 50 feet, maybe. Some of the most powerful high explosive detonations can toss a vehicle or heavy chunk of metal like the engine block that far, but they tend to rip the vehicle apart, which is worse in some ways. Let's not wildly over-exaggerate any particular danger. A Lithium-ion battery fire is not going to burn a hole through the center of the Earth (China Syndrome), nor will a nuclear reactor meltdown ever do any such thing. The cap or valve on a compressed gas cylinder might get blown that far from the vehicle, but the entire vehicle is not going to be launched into the air, Hollywood style, unless it's done with high explosives. Granted, the energy release from any of these energy stores is fairly spectacular, but it's also a relatively rare event (ignoring severe quality control issues with Chinese products), and we are in fact running H2 powered vehicles on American highways which are laden with highly combustible Hydrogen gas pressurized to 700bar.

On the issue of "range anxiety", I think the "real anxiety" revolves around the myriad of other related issues with Lithium-ion batteries, such as waiting 1 to 2 hours for the battery to recharge on a "fast charger", the fact that the vehicle cannot be left unattended during that process, and all the other supposed benefits which turned out to be pure marketing drivel not tethered to the practical operation of an EV as most people use them. No significant planning process is required to use a vehicle that can be refueled in a matter of minutes.

Vehicles made from the 1970s backwards frequently had a range of 200 miles if they had large displacement V8 engines or poorly tuned carburetors and ignition systems. Some burned so much fuel that you could literally see the fuel gauge needle rolling back while the engine was running. Assuming "range anxiety" wasn't a serious issue back then, it was because there was a gas station every 50 feet and you could refuel your car in about 5 minutes for a reasonable price. You can still do that with compressed air. You get your 200 miles and then you can fill the tank up again in less than 10 minutes.

For the EVs, if you recharge at night from the convenience of your own home, then you're not drawing power from renewable energy in most cases. Unfortunately, this is when it's most convenient to recharge the car, because grid demand and prices are low and you're not using the car. You cannot put your car in your garage, plug it in, and then go to sleep while your car recharges. If anything goes wrong, your house might burn down if you're not present to unplug the vehicle. EVs typically quit functioning entirely if it has an internal electrical short somewhere that the computer detects, which causes it to turn off the car, so then the vehicle cannot be moved to a safer spot where a vehicle fire might be inconvenient but not life-threatening. Worse still, serious failures during operation frequently leave you trapped in a car that might go up in flames, due to "electronic everything", which includes the stupid door handles. Someone needs to accept that not everything can or even should be electronic. Door handles are one example of a device that should remain mechanical forever.

If you routinely fast charge the car, doing so rapidly degrades the battery capacity, decreasing its useful service life and increasing the risk of a thermal runaway that destroys or seriously damages the entire car. If some cell or electronic component within the battery pack fails, you have to replace the entire battery pack. If minor damage occurs during an accident, then the insurance company typically totals the vehicle. An accurate mechanism to evaluate true vs superficial or repairable damage doesn't seem to exist.

If a pressure regulator valve fails in a compressed air car, you don't have to replace the air tank, which is the most expensive part of the propulsion system, nor all the other valves and connected components in the car, in most cases. No power electronics are required to operate the propulsion system. The electronics in a modern car, especially an EV, represent 50% of the cost of the car. If anything but the air tank fails, all the rest of the components can be repaired or replaced in isolation from the air tank, at nominal cost. A pressure regulator valve is a rather cheap and simple part to replace using hand tools. No special skills are required, beyond knowing how to use a torque wrench and how to check for leaks. Special test equipment is not required to "know" that the valve repair was successful. If it was not, then you can both see (using soapy water) and hear the air escaping, as well as monitor the pressure inside the main tank.

As a mechanic, you don't have to worry about being instantly electrocuted by a battery subsystem capable of delivering hundreds of kilowatts to about a megawatt of power. Due to its complexity and materials costs, a very large battery pack is never going to be "cheap" in the sense of it being something you stock on the shelf as a spare part. It's so large and heavy that it's literally integrated into the frame of the vehicle to save weight. Thus, removal is never going to be fast and easy, in the same way the removing an engine is not fast or easy. On top of that, a variety of highly specialized and very expensive electronics diagnostics tools are required to test the entire battery pack and evaluate serviceability. A diagnostics check can be run very quickly in most cases, but that's the extent of how user-friendly maintenance and repair will ever be. It's certainly still doable, but never fast / cheap / easy.

If I roll into a compressed air car repair shop and tell the mechanic I hear a hissing sound from this spot near the tank, he's going to be able to rapidly figure out what's leaking, how badly using a pressure gauge, and how much time and materials it will cost to fix it. There could be other problems discovered after the initial repair, but all the parts except the tank, engine, and atmospheric heat exchange radiator are low-cost bits of mass manufactured metal that you can hold in your hand and replace with your hands using hand tools.

Offline

Like button can go here

#403 2024-10-22 02:46:08

- Calliban

- Member

- From: Northern England, UK

- Registered: 2019-08-18

- Posts: 4,261

Re: Why the Green Energy Transition Won’t Happen

Gail Tverberg's latest article is well worth reading.

https://ourfiniteworld.com/2024/10/14/o … -even-war/

In her opinion, Climate Change is spin designed to tackle the real issue without publicly acknowledging it: Energy Resource Depletion. The problem is that the politically popular solutions to climate change (wind and solar power) have thus far proven incapable of replacing the abundant low-cost energy provided by fossil fuels during the post-war growth period of the second half of the 20th century.

Growth in OECD countries has been anaemic since 1973. Until the first oil crisis, wages and living standards were growing rapidly. But growth stalled after 1973. Real inflation adjusted wages for the bottom 90% of US workers has not grown in the past 50 years. Wages for the top 10% have grown, but even this growth appears to have stalled. Why is this happening? Wealth is a product of surplus energy. Whilst overall energy production has risen, the Energy Return on Energy Invested (EROEI) of new energy projects is no longer as high as it was for the abundant onshore oil & gas resources that met world demand until the 1970s. The energy cost of accessing new energy has increased steadily since the early 1970s.

Last edited by Calliban (2024-10-22 03:19:32)

"Plan and prepare for every possibility, and you will never act. It is nobler to have courage as we stumble into half the things we fear than to analyse every possible obstacle and begin nothing. Great things are achieved by embracing great dangers."

Offline

Like button can go here

#404 2024-10-23 11:09:07

- kbd512

- Administrator

- Registered: 2015-01-02

- Posts: 8,342

Re: Why the Green Energy Transition Won’t Happen

To maintain our present quality of life, an annual per capita energy expenditure of 80 million BTUs per person, equates to 23,445,686Wh per person per year. This is a huge amount of energy to generate using other methods, be it coal, natural gas, liquid hydrocarbon fuels, or sunlight, wind, and water. Frankly, the other methods are hilariously inefficient in terms of input materials required, even when the "fuel" comes from the Sun. The sunlight and wind are "free". The materials required to convert that into electrical or thermal power are not "free", and all of the current methods used to create and process those materials into energy generating machines are incredibly environmentally destructive. Furthermore, all of those "other methods" are not truly sustainable because all of them are artifacts of burning hydrocarbon fuels. That means it's in everyone's best interest to find the least consumptive and most productive methods to generate the energy required to lead a "technologically advanced modern life".

In terms of what we call "high level nuclear waste", the US alone has accumulated 90,000t / 90,000,000kg of "un-reprocessed spent fuel" over the past 75 years of using commercial nuclear power. The reason we don't reprocess fuel is to keep our Uranium miners in business, not because there's a shortage of Uranium or Thorium fuel. If we never mined another kilo of Uranium for the next few centuries, we already have accumulated sufficient fuel to supply ALL of humanity's energy needs. Estimating actual energy consumption can be difficult to quantify, so below I'm also going to include the total US annual electrical energy consumption of 4,000TWh as a measuring stick. Americans consume about 1/5th of the world's energy inputs, which means extrapolating to the rest of the world, using America as a proxy, is very easy to do. Take whatever America consumes, multiply by 5, and that's a reasonably good proxy for global energy consumption of the electrical or thermal variety.

When we talk about "spent" nuclear fuel or "high level nuclear waste", what are we actually talking about?

Each kilogram of spent fuel or high level nuclear waste contains a mixture of U235, U238, U238 transmuted into Pu239 or various other fissionable or non-fissionable isotopes, and lighter elements created during fission. Inside a commercial electric power reactor, there are an incredible variety of lighter daughter products created by fissioning, some of which can actually kill the fission process over time by absorbing neutrons thrown off of fissioning atoms, which could otherwise be used to split other fissionable atoms. These are true nuclear waste products that must be removed during fuel reprocessing. Whenever the "spent" fuel comes out of the reactor, it consists of a bunch of "cracked" ceramic metal pellets in metal cladding. This definition of "high level nuclear waste" actually means "otherwise usable Uranium and Plutonium fuel containing 97%+ of its original energy content". If the neutron poisons could be removed and the cracking didn't matter, instead of 18 months, the fuel could stay in the reactor for about 900 months, and then it truly would be "spent" fuel. Reprocessing includes extracting the cracked fuel pellets from the rods, grinding them into fine powder, chemically or centrifugally stripping the various daughter products and neutron poisons, re-sintering the remaining "good fuel" into new pellets, and sorting them back into a fresh fuel rod based upon remaining energy content. Some fuel pellets require nothing more than stuffing into a new rod cladding because the reaction rate was so low, due to their physical position inside the reactor, which affecs reactivity rates. Until this process has been repeated about 50 times, there is nothing "truly spent" about a load of Uranium fuel extracted from a reactor after 18 months of operation. There is merely accumulated thermal / pressure / radiation damage, along with neutron "poisons" that kill the fission reaction, and fission daughter products, such as radioactive Cesium and Strontium, that truly are "nuclear waste". The swelling that ultimately cracks / destroys the fuel pellets stems from the release of Radon and other trapped radioactive gases, as well as volume expansion from the production of lighter elments during fission.

Approximately 2.5% of our "high level nuclear waste" is "true nuclear waste" (Strontium-90, Cesium-137, Radon, etc) which must be removed and stored over varying lengths of time:

90,000,000kg * 0.975 = 87,750,000kg

The length of time something must be stored, because it's radioactive, is a total misnomer. The shorter the half-life, the greater the radioactivity. The longer the half-life, the less the radioactivity. Radioactive for thousands to millions of years means, "this substance is barely radioactive at all". In all probability, it can't hurt you so long as you don't eat it or inhale it. Isotopes that emit gamma rays or neutrons are the exception to that rule. If you don't make a habit of cuddling up to your spent fuel rods at night, the probability of this kind of waste hurting you ranges between nonexistent and exceptionally remote. Radioactive for 5 minutes to 5 years means, "this substance is insanely lethally radioactive". You don't need to be exposed to it for decades before it might cause cancer in old age. Fatal radiation doses from very short-lived radioactive substances can be accumulated in minutes to hours. This best describes nuclear fuel rods the moment after we shut down the reactor. Inside of a few years, most of the really radioactive stuff has decayed into otherwise harmless elements- but once again, harmless to be in close physical proximity to, not to eat or inhale. If you eat non-radioactive Cesium metal that's never been in a nuclear reactor, it will still kill you. Heavy metals are still heavy metals. Lack of radiation, on top of being a heavy metal, doesn't render them any less intrinsically toxic. In all probability, all such "insanely radioactive" material is so thermally "hot", in addition to being radioactive, that it's kept in a spent fuel pool so it can "cool off" (dump its residual radiation and thermal energy harmlessly). Some notable exceptions include the Cobalt-60 metal rods from nuclear medicine facilities or radio isotope thermo-electric generators, which have killed a literal handful of people through improper disposal. This is far more common in the developing world. The last American killed by radiation from a nuclear reactor died in 1964 or 1965, IIRC. Nobody has died from commercial nuclear power since then, unless they fell off a ledge or ladder or balcony at the power plant, were electrocuted by the electric portion of the plant, or a steam pipe ruptured and cooked them.

Whatever the potential for civil nuclear power is to kill lots of people, such a scenario has never been actualized here in America. We can "what-if" all the possibilities to exhaustion, but at the end of the exercise, exhausted is all we'll end up "being". If a modicum of care and thought is devoted to handling of nuclear materials, they're no more intrinsically dangerous than Lead poisoning of the non-Judge-Roy-Bean variety. The moral of the story is, don't treat radiactive materials like play toys, because they're not. Put them to work for you, the same way we put explosive gases and flammable liquids to work. Oil ended slavery. It's not profitable to use forced human labor when forced machine and materials labor is so much cheaper and benefits everyone (even when it doesn't benefit everyone equally, everywhere, at all times).

UO2, the most common ceramic metal fuel type used in most light and heavy water reactors, is 88.15% Uranium by weight:

87,750,000kg * 0.8815 = 77,351,625kg

Fissioning U235 releases 24,000,000,000Wh / 24 GigaWatt-hours of thermal energy per kilogram of virgin U235. After 18 months, when "spent" fuel is taken out of the reactor, it's only generated 600,000,000Wh of thermal energy. The remaining unfissioned Uranium and Plutonium still contains about 23,400,000,000Wh of thermal energy. Pu239 provides a little less, at 23,000,000,000Wh/kg, but we're going to treat U235 and U238 transmuted into Pu239 as possessing equivalent energy content for evaluation purposes, because all those short-lived daughter products add to the total thermal energy output, and U235 mixed with U238 in a nuclear fuel rod ultimately transmutes fertile U238 into fissionable Pu239. U235 is the only type of natural fuel source that can be used to "make" or "breed" more fuel. For example, if you drop a lump of coal into a gallon of diesel fuel, you don't magically "get" more diesel fuel, merely because the coal contains a lot more Carbon than diesel fuel. In any event, whether we use 24GWh or 23GWh as our energy content figure, the math really doesn't change much, as the back-of-envelope calculation below shows, with regards to our accumulated "spent" nuclear fuel stockpile.

77,351,625kg * 24,000,000,000Wh/kg = 1,856,439,000,000,000,000Wh

77,351,625kg * 23,000,000,000Wh/kg = 1,779,087,375,000,000,000Wh

Whenever this nuclear fuel is "stored" inside a working nuclear reactor, rather than sitting outside on the ground, not only is it a very slim nuclear weapons proliferation risk, it's actually doing something rather useful- generating gobs and gobs of heat energy without combustion.

24,000,000,000Wh per kilogram of U235 / 39,750Wh per gallon of diesel fuel = 603,774 gallons of diesel fuel

A kilo of U235 has the same thermal energy output as an olympic swimming pool filled with diesel fuel.

The actual volume of waste produced is so small that all the spent nuclear fuel in the entire world, after 75 years of commercial electric nuclear power, will fit inside a single football field. Is there really no patch of land in the entire world, the size of a single football field, which we cannot declare "off limits" to everyone?

If one football field per century is "too much", to power all of humanity, then how much CO2 are we willing to release and how many cubic miles of land are we willing to destroy to get at the metal underneath it?

Such a tiny volume of waste could be buried many miles underground, far below any aquifer, if we decided to do that and quit playing dumb games with the waste.

Onwards...

4,000,000,000,000,000Wh per year (4,000TWh) = US total annual electrical energy consumption (about 1/5th of the global total)

1,856,439,000,000,000,000Wh of total thermal energy / 4,000,000,000,000,000Wh per year = 464 years of thermal energy, or 232 years of mostly electrical output with waste heat recovery (combined heat and power). If we convert all of that heat into electricity, then we get 35% to 50% of that energy content as electricity, dependent upon how we choose to generate electricity. Steam will be 35%. Supercritical CO2 will be 50%. Most plants get around half of the energy out through various means, so 232 years is a good approximation for how much accumulated nuclear fuel energy the US has sitting around uselessly at various "spent fuel" storage sites.

350,000,000 people * 23,445,686Wh per person = 8,205,990,100,000,000Wh for all 350 million people per year

1,856,439,000,000,000,000Wh of total thermal energy / 8,205,990,100,000,000Wh = 226 years (covers all energy consumption of all types, for the entire US population)

If nuclear fuel was our only option for reliable power, we are not short of energy to maintain our present standard of living, but it's not even close to our only option at this point. We still have incredible natural gas and oil reserves as long as the person in charge of our government is not canceling drilling permits to commit treason by handing our money to terrorists to appease our pistachios. We still have entire mountains of coal, which should probably be upgraded to diesel and bunker fuels using thermal power input from a nuclear reactor. We have a functionally inexhaustible source of heat energy from the Sun. We have wave action thanks to our moon, which can be used with trompes to compress air. We have hydro power from all the dams, some of which may need to be rebuilt at this point. We have plenty of wind in specific spots.

To build-out the wind and solar energy reserves, we need a LOT of energy input to get the enormous quantities of metals required. That energy has to come from somewhere. It can come from burning coal and natural gas, as it presently does, or it can come from electricity generated by nuclear fuel. At the present time, nobody makes photovoltaic panels using previously manufactured photovoltaic panels. It's not impossible, but it would mean ALL of the energy they generate is consumed by the act of expanding photovoltaic panel manufacturing, which means for quite some time they generate nothing on our electric grids.

To that total for existing Uranium sitting in spent fuel casks, America alone can add around 400,000t of Thorium-232 in known deposits or actual separated Th232 stored in the ground under our deserts at various undisclosed locations. Th232 converted into U233 generats 22,764,000,000Wh/kg. We can safely assume that we can economically recover half of that total as a ballpark estimate, so 200,000t buys us another 502 years of energy. Our Thorium reserves are estimated at 595,000t. If we truly could recover all of that, then 1,494 years. IAEA estimates 13,000,000t of Thorium are recoverable at a cost of $130/kg or less. That would provide for our energy needs for the next thousand years, at the very least. More Thorium is recovered every day during rare Earth metals mining. Th232 may be transmuted into fissionable U233 by sticking it inside a commercial power reactor. The daughter products from fissioning U233 are short-lived, relative to fissioning U235 and Pu239. It's a quirk of the nuclear decay chain for U233 vs U235 and Pu239. However, you still need some U235 or Pu239 to begin transmuting Th232. That seemingly "small change" has a dramatic effect on how long the waste remains radioactive. Almost all of the dangerous radiation is gone in far less than a human lifetime, about the same amount of time it takes for a child to become an adult. We can handle 10 to 20 year sequestration processes quite easily.

We can now passively recover Uranium from sea water, at costs ranging between $400 to $1,400/kg. There's about 4.5 billions tons available. That's plenty for everyone for a period of time longer than homo sapiens have existed. I would also very much like to believe that we will coax fusion into working for our benefit in less than a thousand years. Henri Becquerel discovered Uranium in 1896. It was about 60 years before the first commercial power reactor appeared in the UK, in 1956. The first fusion reaction was discovered in 1933. The first non-weapon fusion reaction in a Z-pinch machine took place in 1951. Our first operable fusion reactor of sufficient size / scale will come online in 2035 and is anticipated to be fully operational by 2039. ITER won't be a power reactor, but it will pioneer all the basic concepts required to run commercial fusion reactors. If some upstart runs a fully functional fusion reactor before 2039, then kudos to them. 60 years from discovering Uranium to a commercial power reactor is a break-neck pace of innovation. Creating sustainable fusion reactions is easily multiple orders of magnitude more difficult, so if we achieve that in about 100 years that is a blistering rate of technological advancement. Either way, we have plenty of energy and time. We're not going anywhere, unless we choose to self-destruct.

If all of 7.5 billion of us consumed energy the way Americans do, and we burn U233 from Th232 only:

7,500,000,000 * 23,445,686 = 175,842,645,000,000,000Wh per year (total primary energy consumption for 7.5B Americans)

13,000,000,000kg * 22,764,000,000Wh/kg = 295,932,000,000,000,000,000Wh

295,932,000,000,000,000,000Wh / 175,842,645,000,000,000Wh = 1,683 years

There are many many more millions of tons of Thorium available, but the Thorium shown above is economically recoverable at today's natural Uranium prices. Thorium is about twice as abundant as Uranium.

Here's how long 4.5 billion tons of natural Uranium would last, if everyone lives like Americans do:

4,500,000,000,000kg * 24,000,000,000Wh/kg = 108,000,000,000,000,000,000,000Wh

108,000,000,000,000,000,000,000Wh / 175,842,645,000,000,000Wh = 614,185 years

You can extrapolate out how long 9 billion tons of Thorium would last.

Divide by 2 or 3 if you wish to account for any inefficiencies, but there's enough energy there to provide heat, food, fuel, lighting, medicine, and housing to 7.5 billion people, all living the way Americans do, for an exceptionally long time. After we crack fusion, we have enough materials here on Earth to last until the Sun goes supernova, although I'd suggest we get serious about finding our next star long before that happens. Maybe we can create a warp drive large enough to move our entire planet. That's how much energy we can get from materials dug out of the ground or filtered out of sea water. Our green goofballs don't want humanity to have access to it, because they're pistachios.

We don't presently collect Uranium from sea water, but we've proven that we can do it, and have done it just to prove that we could. One method using plastic fibers has a cost of $80.70/kg to $86.25/kg. For nations that already have nuclear power, there's enough stored energy in terms of on-hand un-reprocessed fuel to cover the next century or two of operations, even if we didn't mine another kilo of Uranium from all the existing Uranium mines. That means we have fuel galore, but no political will to use it. I suspect that if we run short of energy, the political will to start using the fuel will be "discovered" quite rapidly. No explanation that passes muster has been given as to why we're burning so much coal, oil, and natural gas, when the energy available in Uranium and Thorium is so plentiful. If we reprocess our spent fuel, there is zero danger of ever running out of Uranium and Thorium over the next two million years.

Climate change is either a fraud / hoax of truly epic proportions, or else the same people advocating for the phase-out of hydrocarbon fuels are charlatans to their own cause, pushing fraudulent "non-solutions" that they should be intelligent enough to know cannot possibly function without hydrocarbon fuels, or quantities of batteries we simply cannot make, or nuclear energy providing the backstop whenever natural energy sources run out. Norway has 85%, maybe 90% by now, EV adoption. They only burn 10% less oil. Replacing 100% of the existing gasoline and diesel powered vehicles reduces hydrocarbon energy consumption by 15% at most, if you have oil money from selling oil to developing nations, which you then use to make your own nation appear to be some clean green economy that it's not, never has been, and never will be. At the same time, you drive out all heavy industry, and make virtually none of what you use and consume.

The longer our pistachio-colored climate changing scientologists go without creating an examplar national economy that runs purely on wind turbines and photovoltaics and batteries, the more self-evident their fraud becomes. The utter lack of progress shows they are not serious about confronting their boogeyman. All the "green energy" added has not simply maintained pace with the rate of energy consumption increase as our population peaks. If such an energy system cannot keep pace with increased demand, nor readily contract if less energy is required, then it's neither durable nor scalable. Our pistachios are only serious about using their fraud to continuously extract wealth and resources from otherwise productive economies, for as long as they can possibly perpetuate their green energy fraud.

Offline

Like button can go here

#405 2024-11-16 16:10:58

- Calliban

- Member

- From: Northern England, UK

- Registered: 2019-08-18

- Posts: 4,261

Re: Why the Green Energy Transition Won’t Happen

Refining Reality: The hidden problems of a world still dependant on oil.

https://www.artberman.com/blog/refinery-crisis/

The last refinery built in the US was finished in 1977! Yikes. That is older than I am. This sort of infrastructure is expensive to build and older plants are now retiring without being replaced.

"Plan and prepare for every possibility, and you will never act. It is nobler to have courage as we stumble into half the things we fear than to analyse every possible obstacle and begin nothing. Great things are achieved by embracing great dangers."

Offline

Like button can go here

#406 2024-11-17 06:34:10

- kbd512

- Administrator

- Registered: 2015-01-02

- Posts: 8,342

Re: Why the Green Energy Transition Won’t Happen

1bbl of crude = 139.3kg

Carbon = 127.6kg (468kg CO2)

Hydrogen = 11.6kg

1kg CO2 (direct ocean capture) = 1.8kWh

1kg H2 (water electrolysis) = 50kWh

CO2 = 842.4kWh/bbl

H2 = 580kWh/bbl

Sabatier reaction = 4,637.3kWh/139.3kg

Input Insolation = 6.85kWh/m^2/day (in a Midwest desert)

Polished Aluminum Mirror = 6.165kWh/m^2/day

6,059.7kWh / 6.165Wh/m^2/day = 983m^2 of solar thermal collector area per bbl per day

20,246,575bbl/day * 983m^2/bbl/day = 19,902,383,225m^2 = 19,903km^2 (141km by 141km)

2mm x 1m x 1m = 34.5394lbs/m^2 of rolled / stamped steel (hot-dipped in Aluminum, then polished)

19,902,383,225m^2 * 34.5394lbs/m^2 = 687,416,375,162lbs / 311,807,194t

$880/t * 311,807,194t = $274,390,330,720

Let’s double that figure to $550B to account for the steel support structure for the solar thermal mirrors.

Crude currently sells for about $67/bbl.

$67/bbl * 7,390,000,000bbl/year = $495B

Let’s double the cost again to account for fabrication and installation labor. We’re sitting at around $1T.

Over 20 years, we’re spending around $50B/year to supply our hydrocarbon energy input requirements for the next century.

3.11Bt of steel produces sufficient energy input for global supply of petroleum of petroleum base product (Methane). We produce about 1.85Bt of steel per year, so the materials input to supply the petroleum base product for at least the next human lifetime is equivalent to 2 years of steel production. We'll have to add more equipment and energy to go from Methane to gasoline or diesel, but after you produce the Methane, the input energy requirements to go from Methane to gasoline are not nearly so high.

Almost anyone with a pocket calculator could quickly and easily figure out that not running out of energy is dramatically more important than the paltry amount of money and steel required to ensure that never happens. It's nuts (to me) that an oil company hasn't already determined that making sure the profits never stop rolling in is the absolute best reason to recycle CO2. If there are energy surpluses to be had, then we make sure we capture extra CO2 and convert that to solid Carbon ("coal") using Gallium.

No tire company functions without Carbon Black. No coal-fired power plant functions without coal. No Carbon Fiber company functions without Carbon. None of our advanced manufacturing tech, or not-so-advanced tech, functions at all without on-demand energy. That is a fact, no matter how much we attempt to ignore reality.

An actual synthetic petroleum production facility is at least guaranteed to produce the goods. Another oil well may or may not, and it won't produce for very long since we've already consumed the easy-to-get oil.

It seems as if we need some long-term thinking here.

Offline

Like button can go here

#407 2024-11-17 12:01:37

- Calliban

- Member

- From: Northern England, UK

- Registered: 2019-08-18

- Posts: 4,261

Re: Why the Green Energy Transition Won’t Happen

Kbd512, you and I both know that synthetic hydrocarbons can be made and you have worked out a viable plan. But for whatever reason, this isn't being done at scale. At least not yet.

The refinery problem is something I don't entirely understand. But Art Berman suggests that refining is being squeezed between a high price of oil ($70/bl) and the limits of what consumers can afford to pay for refined products. This is squeezing refiners margins, making new refining capacity unproffitable to build. Electric vehicles make this problem worse by reducing the proffitability of gasoline sales. The problem is that refineries produce a range of products from tar for roads and waxes, all the way up through lube oil, bunker fuel, middle distillates (diesel), gasoline, naptha, LPG and methane. If one of these products is reduced in value (gasoline) it undermines the business case of the refinery and creates a disposal problem. But the other products are still needed and EVs do nothing to reduce demand for lube oil, diesel or tar for roads. Refineries can marginally reduce production of one product and expand production of another. But only to a limited extent.

This suggests to me that any plan to reduce global oil consumption needs to simultaneously reduce consumption of all of the products from oil refining. Using less gasoline needs to be accompanied by reductions in consumption of every product coming out of the refinery. Otherwise, efforts to reduce consumption of one oil product (gasoline) creates a disposal problem and really is a waste of time. If EVs reduce gasoline consumption on the roads, but we continue to need other oil products, then gasoline will end up being burned somewhere else.

Last edited by Calliban (2024-11-17 12:05:29)

"Plan and prepare for every possibility, and you will never act. It is nobler to have courage as we stumble into half the things we fear than to analyse every possible obstacle and begin nothing. Great things are achieved by embracing great dangers."

Offline

Like button can go here

#408 2024-11-19 09:29:39

- Terraformer

- Member

- From: The Fortunate Isles

- Registered: 2007-08-27

- Posts: 3,988

- Website

Re: Why the Green Energy Transition Won’t Happen

There are people working on this -- Terraformer Industries, solar powered methane synthesis.

Personally I think the money is in Dimethyl Ether more, but, we presently have infrastructure for using Methane. Pricey for heating but usable for electricity generation.

Use what is abundant and build to last

Offline

Like button can go here

#409 2024-11-19 11:16:31

- kbd512

- Administrator

- Registered: 2015-01-02

- Posts: 8,342

Re: Why the Green Energy Transition Won’t Happen

Terraformer,

It's about time somebody started working on this. If we want to do solar and wind power in a way that makes an actual difference to global CO2 emissions, then we're looking at using solar thermal power, mechanical wind turbines, and a surrounding ecosystem of energy storage technologies that don't require quantities of specialty metals we can't realistically obtain.

Capturing and repurposing CO2 emissions into saleable products was always the correct way to address this issue. If recycling is not a dirty word when it comes to metals or plastics, then it shouldn't be looked down upon when it comes to CO2, either.

We've so little to show for the money spent thus far because we pursued academic ideological solutions past the point where technology could keep pace with the rhetoric broadcasted by mass media. Making everything electrical is no longer a technological dead-end when we can source and recycle the specialty metals required, but not a moment before. Thankfully, electrification is not required for reducing or capturing our CO2 emissions, and may be an impediment in some cases.

What I've proposed is still a "wind and solar" solution at the end of the day, so it would be nice if the people advocating for "wind and solar" took a hard-nosed look at what we can do using what we can actually make in the volumes required to put a measurable dent in the overall problem. We're going to progress a lot faster by pursuing scalable solutions with a low entry bar, technologically speaking.

Offline

Like button can go here

#410 2024-11-20 16:42:44

- Calliban

- Member

- From: Northern England, UK

- Registered: 2019-08-18

- Posts: 4,261

Re: Why the Green Energy Transition Won’t Happen

Solar thermal vs solar PV on the moon.

https://youtu.be/3oFi6S-4mp8?si=jvEhmCNcoHujZpzX

The comparison appears to neglect the enormous energy cost of semiconductor grade silicon. Compared to that cost, the energy cost of supporting frames is small.

Last edited by Calliban (2024-11-20 16:43:05)

"Plan and prepare for every possibility, and you will never act. It is nobler to have courage as we stumble into half the things we fear than to analyse every possible obstacle and begin nothing. Great things are achieved by embracing great dangers."

Offline

Like button can go here

#411 2024-11-20 18:14:17

- tahanson43206

- Moderator

- Registered: 2018-04-27

- Posts: 23,356

Re: Why the Green Energy Transition Won’t Happen

For Calliban re #410

There may be a shortage of energy collection equipment on the Moon.

However, that may be a temporary problem.

My understanding is that the flow of energy TO the surface of the moon is substantial, and reliable, and what it more, it is provided at no charge.

It seems to me that if humans learn how to make solar panels on the Moon from local materials, then there should be enough energy available to support an exponential growth process.

The last time I looked, humans are harnessing a pathetic small fraction of Solar output.

(th)

Offline

Like button can go here

#412 2024-11-20 18:40:05

- kbd512

- Administrator

- Registered: 2015-01-02

- Posts: 8,342

Re: Why the Green Energy Transition Won’t Happen

Calliban,

I wrote a rather lengthy comment on that video illustrating a basic math breakdown of Supercritical CO2 gas turbine component masses based upon a real installation here in Texas, and about two days later either the channel owner or YouTube deleted my comment, despite praising the effort he put in and subscribing to his channel.

What I've found is that the people who believe in this electrification of everything silliness are so lost in their fantasy world that when ugly reality is presented to them, they ignore it and try to pretend that reality isn't real. Sadly, physics is not very charitable to ideological beliefs.

In the very first sentence of my comment, I illustrated how the mass of Copper wiring alone would exceed the mass of the entire solar thermal solution based upon sCO2, even if I was "off" by a factor of 2X.

I'm reproducing my comment left on his channel below so that people with basic math skills can see what reality actually looks like:

Photovoltaics require about 5,500kg of Copper per 1MWe of output, or 5,500,000kg per 1GWe, especially low efficiency thin film arrays, especially when the copper wiring will be heated to 121C before we start trying to pump electricity through it. Most Copper wiring ampacity charts only go up to 90C.

Major component mass breakdown for a 50% thermally efficient solar thermal Recuperated Closed-loop Brayton Cycle (RCBC) Supercritical CO2 (sCO2) gas turbine power plant operating at 255bar and 715C:

Commercially available material:

52,872kg for 2,591,726m^2 of 15 micron / 20.4g/m^2 Aluminized mylar collector to generate 2GWth on the lunar surfaceEstimates based upon an actual working sCO2 gas turbine power plant components already built by SWRI and operating in Texas:

6,250kg sCO2 gas turbine rotor, Haynes 282, at 160kW/kg, but 200kW/kg is closer to reality for 50MW to 300MW sCO2 turbines, so I went with a working system component mass from a sCO2 pilot plant that's already making power

25,000kg sCO2 gas turbine rotor and casing, Inconel 740H

31,250kg sCO2 re-compression turbine rotor and casing

368,098kg 316L diffusion bonded printed circuit heat exchanger, at 16.3MWth/m^3Estimate based upon Wright Electric's liquid-cooled plain old Copper and Iron, non-superconducting aircraft electric motor:

62,500kg 1GW high speed electric motor-generator, at 16kW/kgEstimates using NASA high temp space radiator tech info from NTRS:

990,040kg for 20mm ID Haynes 282, at 1.146kg/m, hoop stress is 22.491ksi at 255bar, ~3m of collector area required to generate 715C, I think (may actually be closer to 4m, I just guessed at this based upon a high temp solar thermal receiver tube design built for NREL, and zero flow or thermal-hydraulic analysis to determine if other factors will prevent this from working at all)

250,000kg for Carbon Fibers (not CFRP) vacuum brazed to Inconel 718 (NASA high-temp space radiator for nuclear power), at 600CMass Total: 1,786,010kg

There will be additional masses associated with the mounts for the sCO2 turbine and generator, plumbing, valves, CO2 thermal power transfer fluid, a separate coolant loop to cool the generator, and thermal energy storage materials since most places on the moon have 336 hours of light followed by 336 hours of darkness. Storing 336GWh of electricity in Lithium-ion batteries will also be a mass-related show stopper for thin film photovoltaics, if the batteries are protected to NASA standards. I didn't bother to calculate how many kilos of CO2 are in the loop, either. I could be off by a factor of 2X and a 1GWe solar thermal power plant is still substantially lighter than the mass of Copper wiring required by a 12% efficient 1GWe thin film solar array. The same holds true on Earth. The ERoEI of photovoltaics and wind turbines vs solar thermal is not much of a comparison after plant longevity, all material inputs, and appropriate energy storage devices are considered.

I thought this video was a solid attempt at comparing solar thermal and thin film photovoltaics. NASA uses small Stirling engines for power due to their high reliability without maintenance. Nobody there, or at least nobody that I've ever spoken to, is seriously considering them for large power plants. The radiation environment that the thin film will be subjected to should also be considered. I doubt it lasts longer than 10 years. NREL's solar thermal demonstrator built in the 1970s has already operated for 50 years. Kudos to AnthroFuturism. I love the work he put in. Subscribed.

It's amazing how poorly received plain old energy reality tends to be, but that's because I don't allow ideological beliefs to "control" what I think about real tangible power generating technology.

Source documentation, which I'm more than willing to provide, comes from NREL, SWRI, NASA, and private industry. In my mass estimates, I was very conservative, and based them upon straight linear scale-up of the existing sCO2 pilot power plants which are now operational.

It later turned out, after doing even more reading and listening to test results YouTube videos reported by SWRI, that I overestimated the mass of most sCO2 pilot power plant components, such as the recuperator. They're actually transferring 43MW/m^3 of thermal power through their prototype heat exchangers. That is a stupid amount of power crammed into such tiny components, relative to steam plants. One of the PhDs from SWRI said the power density of the sCO2 turbine is very similar to the high pressure LOX turbopump on the RS-25. What's more, they're designing all the "hot section" components for 100,000hr service lives or greater. Few parts of a RS-25 lives for 100hrs, never mind 100,000hrs. That's why this tech took 20 years to develop. The tiny 16MWth / 10MWe turbine rotor was actually 200kW/kg, not the 160kW/kg I used in my estimate, apparently based upon smaller prototypes from SWRI documentation. That means a scale-up to 50MW to 300MW will deliver greater power density. If they start making components from CMCs, gravimetric power density will further increase by a factor of 3X to 4X with no increase in operating temperature. C/SiC ceramic composites are designed to live at 1,000C to 1,200C, rather than 715C. 600kW/kg is already a crazy power density figure, but sCO2 turbine rotors operating at 1,000C could go as high as 1MW/kg. That is just plain nuts. The reduction gearbox, electric generator, and its base support may very well become the single heaviest component of a 1GWe solar thermal power plant on the moon or Mars.

There are 4X 300MWe power plants being built at 4 different sites here in America by Occidental Petroleum and 8 Rivers Capital (2 are natural gas and 2 are coal-fired and recapture their own CO2 emissions for piping to the fracking industry), with multiple smaller units under construction elsewhere in the US, UK, EU, Russia, India, and China. I think there's one being built in Brazil as well. I didn't check what South Korea and Japan have in the works. The 50MW units are for natural gas pipeline compression and the 300MW units are for commercial electric power.

So far as I'm aware, there is no serious attempt to use Stirling engines for commercial power. We're moving to Recompression Closed-loop Brayton Cycle (natural gas or oil) and Allam-Fetvedt Cycle (coal) power plants, because all of it fits inside of the area occupied by a normal American-sized house.

Offline

Like button can go here

#413 2024-11-20 18:45:35

- kbd512

- Administrator

- Registered: 2015-01-02

- Posts: 8,342

Re: Why the Green Energy Transition Won’t Happen

tahanson43206,

Why are photovoltaics and wind turbines not being used to build more photovoltaics and wind turbines here on Earth?

Hazard a guess as to why you think that is.

Offline

Like button can go here

#414 2024-11-20 19:14:36

- tahanson43206

- Moderator

- Registered: 2018-04-27

- Posts: 23,356

Re: Why the Green Energy Transition Won’t Happen

For kbd512 re #413

The question you have posed appears to be offered in a serious manner, and it deserves a serious response.

My estimate of the time required to fully understand the human behavior of thousands if not millions of people would be on the order of years, depending upon the number of researchers available, and the quality of the leadership.

What I can verify from my vantage point in 2024, is that writers have been thinking about this problem since at least 1985 and probably much earlier.

Humans ** have ** achieved self-replicating systems that depended upon renewable energy in the past. Those systems were called "farms". They existed before the modern industrial age. I doubt there are any left on Earth today. The Siren Call of oil-from-the-ground has obliterated the skills and knowledge that allowed self-replicating systems like that to not only survive but succeed.

This topic is fresh in mind, because a relative pointed me toward a history of the American revolution, and I opened the book to a passage that talked about how the revolution was funded.

I am confident that eventually ** very ** bright people will put together a chain of hardware that harnesses solar energy to replicate the machinery of which it is made.

We can get a hint at what is possible by looking at a computer chip factory. If we have income from sale of chips, and we use those funds to make more chips, and we able to sell ** those ** chips, then we would have an example of a self-replicating system.

My knowledge of the chip manufacturing industry is insufficient to know if we have already seen that happen on Earth, but it is certainly possible.

The challenge of trying to fund a factory to make semiconductor products using nothing but solar energy as input is quite likely beyond human capability at present. However, only 200 years ago, humans were able to figure out how to create self-replicating systems using nothing but solar energy as input. The accomplishment was not recognized at the time for the achievement it was, because it was so commonplace.

I am confident we (humans) will achieve at a comparable level at some point in the future. The Moon is an excellent place to look for developments along those lines, because there are (apparently) no 5 million year old deposits of free energy. Instead, the Sun bakes the Lunar surface each and every Lunar day, and at this point, humans are capturing only a teeny tiny sliver with the probes scattered around the body.

Thanks again for a terrific question!

(th)

Offline

Like button can go here

#415 2024-11-21 10:23:45

- kbd512

- Administrator

- Registered: 2015-01-02

- Posts: 8,342

Re: Why the Green Energy Transition Won’t Happen

tahanson43206,

However, only 200 years ago, humans were able to figure out how to create self-replicating systems using nothing but solar energy as input. The accomplishment was not recognized at the time for the achievement it was, because it was so commonplace.

Have you learned anything yet about why we stopped pursuing subsistence farming to industrialize?

I am confident we (humans) will achieve at a comparable level at some point in the future.

Photovoltaics were first invented in 1839. Internal combustion engines were invented in 1853. Photovoltaics were not like-kind substitutes for combustion engines by 1939, and they still won't be by 2039. If photovoltaics become 100% efficient by 2039, now only 15 years away, they still won't replace thermal engines, because they can't operate the way thermal energy systems operate. They are not on-demand devices. Photovoltaics function only when input power is available from the Sun.

Both thermal and electrical systems are ultimately photonics-based, but storing thermal spectrum photons in matter, with the resultant artifact effect we call "heat", is dramatically simpler and easier than storing electrons in matter. A thermal system relies upon an intrinsic property of all matter excited to an internal energy state above absolute zero. An electrical or electronic system uses an artifact of an intrinsic property of certain kinds of highly ordered matter which doesn't, practically speaking, exist in nature. All matter vibrates when photonic energy input strikes an then flows through it, and thus matter "stores" thermal energy. We don't have to do anything at all to any piece of matter to make that happen. Conversely, only highly refined metals conduct or store electricity at levels usable by human-created machine technology like a car or aircraft or robot. There are "hot rocks" and "cold rocks" in nature by virtue of their proximity to a star or other energy source launching photons at them. There are no analogous "electric rocks" to be had.

How much power photo-electric devices produce, at whatever efficiency level, is entirely a function of how far away they are from their external power source. Here on Earth, we're about 93 million miles from that power source at all times. It converts to thermal energy at all times, but very little ambient electrical energy, which means we require energy converters to consume the photonic power as electrical power. If Earth was 83 million miles closer to the Sun, then the arguments for using electrical systems, such as photovoltaics, become far more compelling because the areal energy density is drastically higher. Unfortunately, life as we know it would also be extinguished by that same energy source in such close proximity. That's the gist of the problem with using electrical devices powered by converted photonic energy. That is a very real form of inefficiency which dictates how much input energy / material / labor must be sunk into energy generating and storage systems, which always detracts from "all other useful purposes requiring input energy / materials / labor".

This electrification fantasy has thus become an unsolvable math problem, which shall remain unsolvable until sufficient quantities of metals and energy can be devoted to our energy systems, or until some of us become far more pragmatic about how we use solar and wind energy. An incredible surplus of energy is a hard requirement to create and use any kind of semi-conductor device, because it implies that energy is so abundant that all basic human needs have been met, and what we do with the surplus is a matter of personal preference.

That is why all photovoltaics and wind turbines produced thus far have been a net drain on our energy supplies and overall economy. They've replaced nothing, because they can't. Ambient energy systems have always been a marginal power source without the ability to store energy. Every living thing uses or directly converts solar energy into stored chemical energy, and then barely survives off of that energy.

Meanwhile, internal combustion engines went from machines weighing hundreds or even thousands of pounds, only capable of generating a literal handful of horsepower, to sCO2 gas turbines with gravimetric and volumetric energy densities most closely comparable to rocket engines, which are themselves the most powerful form of internal combustion engine, and hold absolute records for gravimetric and volumetric energy density.

I'm reasonably confident we won't attain such achievements until we address our immediate energy shortfalls created by pursuing solutions which will never perform the way you want them to, because the fundamental underlying problem relates to the poor quality of the energy input, which we have no real control over.

Offline

Like button can go here

#416 2024-11-21 13:09:44

- kbd512

- Administrator

- Registered: 2015-01-02

- Posts: 8,342

Re: Why the Green Energy Transition Won’t Happen

tahanson43206,

TSI at Mercury ranges between 6,272W/m^2 and 14,448W/m^2. Mercury is 33.6 million miles from the Sun. It's a barren lifeless planet stripped of its atmosphere by the Sun. At 6kW/m^2, there's enough areal power density for propelling a motor vehicle or even a flying vehicle if a planet with an atmosphere existed in such close proximity to the Sun.

If someone came to me and argued that we needed to start burning coal after we already developed and implemented at-scale, photovoltaics providing 1.5kW/m^2, I would tell them to find something else more productive to do with their time, or that they could have the energy to play with their lumps of coal AFTER we had satisfied all basic human needs to a very high degree.

Our place in the universe and our technology, never mind the way in which most energy transfer takes place in the universe, didn't shake out that way. We ended up 93 million miles from our star, not 33.6 million miles, so the power per unit area here on Earth is 10X less than what Mercury receives. Any farther from the Sun than Earth is, and photovoltaic power becomes a very marginal power source, to the point of near-uselessness for human civilization by the time we arrive at the main asteroid belt.

The moon makes it somewhat easier without the atmosphere, but the areal energy density remains very low, which is why the Sun doesn't immediately BBQ you when you step outside in a fabric space suit.

In practical terms, there are 1kW/kg photovoltaics, possibly up to 6kW/kg for certain kinds of thin films. Thermal engines are now 200kW/kg, and they could go to 1,000kW/kg if made from CMCs and scaled-up to GW output levels. The issue is that a mylar reflector is made from the same base material as thin film photovoltaics, but can concentrate and convert (thermalize) the photonic energy input with an efficiency of around 90%. Thin films top out at between 15% and 20%.

The thin film photovoltaics are already at 60 grams per square meter and thinner than a human hair, so very delicate. I don't think they can get much thinner and lighter than that in a practical way. The problem is not the weight of the panel, though, it's the total area required for a given application, combined with the much greater mass of metal conductor wiring required to not act like a resistive heating element, which will exceed the total mass of a solar thermal engine by at least 2X, for the mass of the Copper conductor wiring alone.

We can and should apply CNT wiring tech, which reduces our wiring mass by about 80%. That's still not enough mass reduction to make it equal to a thermal solution. The issue is that if we apply CMC tech to solar thermal engines, we still end up with a much lighter solution that is much longer lasting.

That means we're probably never going to see photo-electrical solutions which become lighter and less energy-intensive than photo-thermal solutions, and this is for an electrical power end use application. For direct thermal power input applications, it's virtually impossible to compete with direct photo-thermal power, because that process is 90% to 95% efficient using very common materials (Mylar plastic and Aluminum). Photo-electrical solutions are thus never likely to become cost-competitive with photo-thermal solutions that lasts 5X to 7X longer, are recyclable, and consume lower tonnages of more abundant and easier-to-recycle materials.

As we switch from electronics computer tech to photonics computer tech, reducing energy consumption by 1,000,000X, the only remaining applications for photo-electrics tech is powering low-powered commercial satellites using legacy semi-conductor technology. Anything that needs greater gravimetric and volumetric power density is going to use photo-thermal solutions, because it costs less and lasts longer.

Remember that old "buggy whip" analogy you made?

It's rapidly becoming applicable to photovoltaics and electro-chemical batteries.

Buggy whips, as you pointed out, fell out of fashion after we quit riding horses.

Now that we have photo-thermal solutions requiring less mass / surface area / volume than photo-electrical solutions, I fail to grasp the point behind development of another buggy whip. They won't be used to power photonics-based next generation computing devices and they don't provide the power density of photo-thermal, so why are we spending so much money on these inefficient buggy whips we call photovoltaics?

Offline

Like button can go here

#417 2024-11-21 14:15:20

- Calliban

- Member

- From: Northern England, UK

- Registered: 2019-08-18

- Posts: 4,261

Re: Why the Green Energy Transition Won’t Happen

One thing that the video misses is that a solar thermal powerplant can be made mostly from iron. Reflectors need a thin coating of aluminium no more than 10 atoms thick. But the reflector shell, receiver, pipework, heat exchangers and most of the power cycle machinery can be made from iron. Iron is by far the easiest metal to make on the moon. It is also the cheapest in terms of energy.

PV requires a great deal of other materials. Making polysilicon on the moon is impractical. The scale of plant needed to make semiconductor grade silicon is immense and mass production on Earth took many years to establish. Using PV would mean shipping polysilicon from Earth. The mass burden would then favour thin film PV. Unfortunately, that would push down efficiency and operating life. That increases the amount of other materials needed per unit power from PV.

The video author is correct in saying that solar thermal plants will need large radiators. However, radiators containing liquid sodium will not need to be massive, as the vapour pressure of sodium is low even at red hot temperature. An S-CO2 turbine is compact enough to be imported. A 1MW turbine is about the size of a tube of pringles. Even a 1000MW unit could be easily accomodated within a Starship payload. The amount of torsion acting on the shaft a turbine that compact must be impressive. Printed circuit heat exchangers can be 3D printed on the moon. Or we can build tube and shell heat exchangers. Both will work. The moon is a very dry environment. So dry in fact that we might even use sulphur dioxide as a working fluid. This may offer even better power density than CO2.

Kbd512 wrote: 'The thin film photovoltaics are already at 60 grams per square meter and thinner than a human hair, so very delicate.'

I find it impressive that we can make films that thin. But even on the airless moon, they would be vulnerable. Rocket engines from spacecraft landing and taking off, will propel dust particles many miles on the moon. We woukd need to build thin film PV a long way from any landing sites.

Last edited by Calliban (2024-11-21 14:31:35)

"Plan and prepare for every possibility, and you will never act. It is nobler to have courage as we stumble into half the things we fear than to analyse every possible obstacle and begin nothing. Great things are achieved by embracing great dangers."

Offline

Like button can go here

#418 2024-11-22 10:32:33

- kbd512

- Administrator

- Registered: 2015-01-02

- Posts: 8,342

Re: Why the Green Energy Transition Won’t Happen

Calliban,

Yes, the reflectors could be made from Iron, but on an airless void such as the moon, both Aluminized Mylar and thin film photovoltaics (special semi-conductor inks layered onto a Mylar substrate) can be economically imported from Earth because the total tonnage per GWe is so low.

However, Aluminized Mylar is dramatically cheaper than thin film photovoltaics per square meter, it's not subject to serious damage from ionizing radiation punching holes through / fusing / short-circuiting the photo-electric semi-conductor "inks" / circuitry together, and it requires no specialty materials to produce. Mylars and Aluminized Mylars are standard mass-produced commercial products created from natural gas. Gallium, Indium, Selenium, and Tellurium are definitely NOT standard commercial products. They're very low volume / high-demand specialty materials so precious that all thin film photovoltaics are recycled here in America. We don't throw those away, ever, because we cannot "simply make more" by mining more virgin metal. There's not enough to go around. We can make Aluminum and plastics in almost endless quantities by way of comparison, even though there's a real energy cost associated with doing that as well.

The radiator does not need to be made from a large quantity of metal at all. In point of fact, high temperature NASA-designed radiators are largely strands of pure Carbon Fibers (not a CFRP composite), because pure Carbon Fiber is an excellent thermal conductor in a single plane, which is precisely what a radiator is. Once again, pure Carbon Fiber, and CNT for that matter, is a product of natural gas.

388,759kg (60g/m^2) thin film photovoltaics

1,100,000kg CNT wiring

1,488,759kg total mass for the thin film photovoltaic mass (ignoring the fact that no mass is allocated to power conditioning electronics to buffer the plant output so as to prevent damage to downstream electrical power consumers)

If we're going to invoke advanced materials tech (CNT wiring) for thin film photovoltaics, then this is what the mass breakdown will look like when using similarly advanced materials tech for the solar thermal:

52,872kg Aluminized-Mylar (20.4g/m^2) collector area

20,833kg sCO2 power turbine and recompression turbine from sintered Sylramic fiber reinforced SiC

46,512kg printed circuit heat exchanger from sintered Sylramic fiber reinforced SiC ceramic composite, at 43MWth/m^3 at 715C, the actual power density of the prototype device built by SWRI (I thought it was only 16MWth/m^3, but that was only what they started with)

62,500kg 1GW high speed electric motor-generator using Wright Electric's liquid-cooled motor-generator technology for aircraft

330,013kg Sylramic fiber reinforced SiC ceramic composite receiver tubing for thermalizing input power

250,000kg Carbon Fiber and Inconel 718 high temp radiator array

762,730kg total solar thermal system weight for all major components except the CO2 charge and storage tanks (something which absolutely doesn't need to be imported to a base on Mars)

Energy production and storage doesn't work like microchips do. You cannot wait 2 years and expect to get a photovoltaic panel twice as efficient or powerful as one made 2 years prior. Photovoltaics and wind turbines will either get cheaper or more expensive on the basis of input energy / labor / capital costs, and panel output may increase by a fraction of a percentage point if/when a slightly more refined manufacturing process is used, but that's what you should expect from existing polysilicon or thin film photovoltaics, because that's what you will actually get.

We still see routine major improvements made to gas turbine and piston combustion engine technology, because there's such a huge trade space to work within to obtain those improvements, and for decades the computing tech required to simulate engine operation with high fidelity simply did not exist. It's not economic to manufacture thousands or even millions of prototype engine components trying to improve the fundamentals of their operation while incorporating them into a complete engine design, so progress on efficiency was very slow for many decades.

Someone ran a high fidelity CFD simulation, over 20 years ago when it first became practical to do so, and discovered that the combustion process itself could be 30% more efficient merely by adding a "special pattern" of indentations to the top of a piston crown, greatly resembling a "golf ball pattern". Unfortunately, that windfall tech advancement was found to be highly specific to each type of engine. There was no universal "pattern of dents" that would produce the same combustion efficiency improvement for all engines. Merely changing the crankshaft throw would make the same pattern far less effective. Said tech was originally created to reduce emissions by such a degree that no catalytic converter was required to pass emissions tests. Knock-on effects included an extreme improvement to engine oil service life intervals. Speaking of which, Valvoline recently created an engine oil additive which does appear to effectively remove all Carbon deposits and sludge from engine components like pistons and valves over time, making parts that usually look black after enough use come out of the engine almost completely clean. Though originally created for gasoline engines, the piston shaping tech from Speed-of-Air Pistons was then applied to Caterpillar diesel engines. Now a wide variety of gasoline and diesel engines feature Speed-of-Air's special piston shaping tech.

The only way to secure dramatic improvements to photovoltaics tech is to radically alter the materials used, essentially to "discover" some new meta-material that has greatly improved efficiency, or to increase the junction count and therefore cost as the device becomes more like a microchip and less like a simple photo diode. Diodes can be made in all kinds of ways, and work should continue, but what limits efficiency is the bandgap of the materials in question and the electrical conductivity of the materials that transport the electricity off of the panel.

Silver is already the most electrically conductive metal in the periodic table. Graphene is the only feasible improvement to be had, on the basis of weight / cost / mass manufacturing of abundant Carbon allotropes (potentially way more abundant than Silver). Find a cheap way to make Graphene and then find a way to lay down a perfect Graphene layer on the substrate with no discontinuities, and you've just built a better photovoltaic cell, of whatever variety, but you still need macro (greater than 1 atom thick) metal structures to funnel the power into a conductor wire. From there, you have better bandgap materials, you can add more of them, and/or use cheaper materials if you find a material that is abundant that does the job as well as existing more exotic materials, but that's about it. You get no major improvements from other methods. There is no magic to be had here. It's all hard chemistry and evaluation of processing methods.

That's why photovoltaics appear to be "stuck", technologically speaking, with nothing but a constant stream of meaningless BS to baffle the ignorant into thinking some wild new photovoltaics tech is just around the corner. It's not. The basic concept was perfected to the point of creating highly repeatable results during the 1950s by Bell Labs. Photovoltaics were converted from cool science experiments to practical devices. Electronics mass manufacturing tech caught up in a big way by about the 1980s, so the cost started to drop like a rock as more and more process automation was implemented. It was not because cheaper materials were used or we discovered something new that we didn't already know. During the 2010s we figured out ways to optimize every aspect of the manufacturing process. Everything since then has been all about the efficiency of processing the materials. We've been tinkering with Perovskites for many years now, unfortunately sunlight destroys these crystals with interesting properties.

Volts, Amps, Watts, and bulk electrical conductor wiring and power conversion tech have been very stable and remarkably resistant to tech advancement for a very long time, with Graphene and CNT being the only interesting new developments. Room temperature superconductors remain the stuff of science fantasy and probably always will. The few superconductors we've found that operate closer to room temperature also suffer from poorer electrical conductivity relative to superconductors operating at more cryogenic temperatures. Power electronics have improved by leaps and bounds with the introduction of fast solid state switches (MOSFETs) capable of switching gobs of power over very brief periods of time. That means there's not much room for dramatic improvements to be had here, either, apart from cost.

Electric motor-generators are subject to magnet and conductor wiring improvements. We've optimized them to between 96% and 99% overall efficiency in terms of mechanical-to-electrical or electrical-to-mechanical. While a 100% efficient device would require no cooling, even the most power-dense motors are well within our ability to cool. There's almost nothing left to extract in the way of energy efficiency. Other forms of efficiency such as mass and monetary efficiency are still subject to materials improvements made with CNT conductor wiring and composite casings and shafts. That said, they're becoming about as cheap, powerful, efficient, and pervasive as they possibly can be. All ongoing research is about improving power density per unit mass and volume, or optimizing a type of electric motor to serve a specific application. We can make these devices cheaper, more closely matched to desired operating speeds, or lighter and smaller. We cannot improve their fundamental efficiency to any meaningful degree.

Where the heck does that leave us?

If we attempt to electrify everything using existing photovoltaics / electric motor-generators / electro-chemical battery tech, then there's going to be a colossal materials mining energy input, materials processing energy input, whopping mass penalties over thermal engines, metal scarcity issues, and major recycling efficiency penalties to pay for doing that. The benefits achieved by electrification are broadly applicable ONLY to electrical or electronic devices. Having a 100% efficient electrical conductor does nothing whatsoever to change the total input power required to melt 1,000kg of scrap steel, or to move a 20,000kg semi-truck down the road, for example. It's a stupid shell game. We made one aspect of the process more efficient while making other parts of it massively inefficient.

A Tesla semi-truck is about 50% more efficient than a diesel truck, which is fantastic, but you need at least two of them to move the same payload to the same distance over the same amount of time. That's a rather annoying problem to have, especially since the Tesla costs a lot more than a diesel semi. What's "the real solution" / "actual answer"? If efficiency matters so much and you don't want people to burn something, then use a train, because physics greatly favor steel wheels on steel track over rubber wheels on blacktop. If you want to put batteries on a train, their weight matters a lot less than on a road-bound motor vehicle equipped with rubber tires rolling on physically "weak" asphalt road surfaces that can't handle additional weight. Simple as. Always has been. Pipelines, cable cars, trains, and airships can all be powered by photovoltaics in a practical way. You cannot power semi-trucks, cars, airplanes, or ships using photovoltaics or wind turbines.

Everything we could possibly hook up an electric power cable to in the mining industry already has one, but the issue is that they're all those power cables are connected to a coal or natural gas power plant. Since there is zero energy storage and near-zero thermal power from solar heat, all we're really doing is burning more stuff to mine more metal while failing (badly) to keep pace with demand.

We've already achieved whatever marginal benefits were achievable through electrification, and have largely moved from electrification to electronization (trying to put computerized control circuitry in absolutely everything, whether it needs it or not). Every remaining application for electricity has become increasingly inappropriate. The problem is that people view electricity as "magic". Gwynne Shotwell called electricity, lasers, and solar panels "magic" several times during an interview she gave a couple days ago. A mechanical engineer thinks electricity is "magic". We're now converting thermal energy into electricity and then converting it back into thermal energy. That is just silly when more efficient methods have existed longer than electric grids.

We need to try something new, something which at least has the potential to work at a vast scale, at reasonable cost, with material demands we can keep up with while not invoking things that don't exist, such as "magic", to explain why something works or doesn't. Photovoltaics and wind turbines never became what we hoped they would. After 200 years of development, with intense development over the past 75 years, they're nowhere close to replacing anything. If internal combustion engine development progressed as poorly as photovoltaics, we would've given up on them, much as we did on electric cars, until someone decided that "magic happened" because we had modestly better batteries in the 2010s vs 1910s. We have nuclear thermal and solar thermal for most uses. Everything else is a niche solution, important to someone somewhere, but will never come close to powering human civilization in a single lifetime.

Offline

Like button can go here

#419 2024-11-29 00:54:41

- Calliban

- Member

- From: Northern England, UK

- Registered: 2019-08-18

- Posts: 4,261

Re: Why the Green Energy Transition Won’t Happen

UK road fuel prices remain high due to retailers margins.

https://oilprice.com/Energy/Energy-Gene … -Drop.html

A couple of things the article fails to mention.

1) Increasing proportions of UK fuel are produced using imported crude as the north sea drops off. The pound has taken a hammering against the dollar. So those costs are going up, even if the dollar price of oil is stable.

2) Electric vehicles are eating into sales of petrol (gasoline). That means the marginal cost of infrastructure needed to distribute fuel is being spread over smaller volumes. So the margin that retailers charge on what they sell has to increase.

Last edited by Calliban (2024-11-29 00:56:15)

"Plan and prepare for every possibility, and you will never act. It is nobler to have courage as we stumble into half the things we fear than to analyse every possible obstacle and begin nothing. Great things are achieved by embracing great dangers."

Offline

Like button can go here