New Mars Forums

You are not logged in.

- Topics: Active | Unanswered

Announcement

#126 2024-03-20 16:33:24

- Terraformer

- Member

- From: The Fortunate Isles

- Registered: 2007-08-27

- Posts: 3,988

- Website

Re: Lithium used for batteries

Much of this stuff already is steel and Aluminum

Given the massive price difference between copper and aluminum, *are* there many areas left where aluminum can be substituted?

Use what is abundant and build to last

Offline

Like button can go here

#127 2024-03-20 19:28:22

- kbd512

- Administrator

- Registered: 2015-01-02

- Posts: 8,350

Re: Lithium used for batteries

reddit - Ask Engineers - Why isn't Aluminum used for wiring in EV motors?

Jeff5877

I've spent 12 years designing large electric motors. I'm a mechanical engineer, so I have no formal education in the theory, but I know enough to be able to talk design tradeoffs with the simulation folks who do the heavy electromagnetic design.

TLDR - motor designers are optimizing for the lowest possible motor size and weight. Using aluminum instead of copper would require more total motor volume and weight to achieve a given power level due to the increased resistance losses from aluminum. Copper is typically only about 10% of the weight and cost of an electric motor, and the increase in size required with aluminum windings would overwhelm any weight savings in the winding.

We make high-performance motors for EVs, so we're always trying to design for the smallest possible spaceclaim and lowest possible weight while getting out the most performance. The answer could be different for smaller components, lighter duty components, etc. I have no experience with the design tradeoffs for those applications so can't comment either way.

If you ignore cooling for a minute, the fundamental factor that is going to dictate the size of an electric motor is the magnetic flux density you can generate at the airgap between the rotor and the stator. Wrap a coil of wire around a piece of iron, and the flux density you can generate from that electromagnet is proportional to the number of turns of wire x the current through that wire. But that's only true up to a point, for the typical materials that stator laminations are made out of, once the flux density in the steel exceeds ~2 Tesla, the steel starts to saturate and you don't get any additional flux density for higher and higher currents past that point.

Since you're designing to optimize the output torque/power, you start a motor design knowing the maximum flux density you want to target (1.8T-2T), and your stator windings need to be able to provide the amp*turns required to achieve that flux density. That effectively translates into a maximum force per unit area on the OD of the rotor, so your maximum motor torque is then proportional to rotor L*D2.

After sizing the rotor, there are optimum stator slot geometries that mostly dictate how much room you have available for the stator windings. Typically you'll see roughly a 50/50 split between tooth width and slot width.

At this point, you'd think that you could just use lower current and more turns to get the same amount of flux as copper without increasing your R*I2 losses, but there are two problems with this. 1) to increase the number of turns in a slot with a fixed size, the conductor has to get smaller - this offsets most of the benefit, and 2) increasing turns increases torque at low speed, but increases the back EMF at high speed and causes you to lose capability at the high end of the speed range. Selecting the correct number of turns is a balancing act to make sure you have the needed torque at both the low and high end of the speed range.

All that is to say, for a given torque speed curve, there is an optimal lamination geometry and turn count to achieve the desired performance (really a family of optimal geometries with different L/D ratios). So, let's look at what changing from copper to aluminum would do:

A quick word on temperature. Temperature of the winding is a critical factor in motor engineering. The insulation materials used all have a temperature rating for how much temperature rise they can withstand and for how long. High-performance motors use class H insulation, which allows for a 180C maximum operating temperature. The rule of thumb is that the insulation system life reduces by a factor of 2 for each 10C increase in operating temperature, so thermal management is critical.

Option 1 - direct material switch without changing anything else. As you said, aluminum has about a 50% increase in resistance. This is going to add 50% more R*I2 loss and more than a 50% increase in temperature rise. In order to operate at the same temp rise as a motor with copper windings, you would need to operate the motor at maybe 2/3 the output power. You could try to optimize cooling, but high-performance motors are already designed to operate with maximally effective cooling (you are correct as you stated in a comment that the cooling can only be done on the coil surface, so you do deal with a square-cube law problem as the motor gets bigger).

Option 2 - increase the amount of space available for the aluminum. You can't really make the tooth width narrower without reducing your torque capability, so you'd have to just make the slots deeper. Unfortunately, in order to maintain performance, you would also have to grow the stator OD by the same amount. This means that for your 50% increase in slot area needed to match the loss of the copper, you are adding >2x the added slot area in extra lamination material at the OD, more than eating up any weight savings you get from using aluminum.

There might be another option I'm missing, but bottom line is that the performance characteristics of aluminum are so much worse than copper that using aluminum would end up increasing your size, weight, and cost to achieve the same performance as a motor with copper windings.

One final note - a lot of smaller induction motors actually do use aluminum rotor bars and end rings rather than copper as most larger motors do. This is a big cost saving as the squirrel cage can actually be cast in place around the rotor laminations, this lends itself quite well to high-volume manufacturing. This does lead to a performance hit relative to copper, but since temperature is not as critical on an induction motor rotor (due to not having to keep any insulation materials from overheating) the performance hit is small enough to make the cost savings worth it.

Edit: one other consideration I remembered is thermal expansion. Thermal cycling and thermomechanical stress are key life drivers of insulation systems, beyond absolute time-at-temperature limits. As a motor heats up, the windings get hotter than the surrounding steel that they are bonded to through an encapsulating resin. Along with the higher coefficient of thermal expansion of copper vs steel, this puts mechanical stress on the insulation materials. Aluminum has a ~45% higher CTE than copper, so even if you were able to get comparable temperatures, a motor with aluminum windings would experience thermomechanical stresses ~45% higher than a motor with copper windings.

Offline

Like button can go here

#128 2024-03-20 19:28:40

- SpaceNut

- Administrator

- From: New Hampshire

- Registered: 2004-07-22

- Posts: 29,932

Re: Lithium used for batteries

Seems that after all of the discussion the conversion of fuels to power is at best 25% give or take 10 possibly max under the right condition. Solar losses as well as wind due to storage conversions and intermittency of those source. It also suffers from the same efficiency levels. Water seems higher until you get draughts, and you now have no drinking water or water to grow crops with.

Nuclear seems to be 2 times that range but it comes with a high cost to establish the system.

Offline

Like button can go here

#129 2024-03-20 22:26:10

- kbd512

- Administrator

- Registered: 2015-01-02

- Posts: 8,350

Re: Lithium used for batteries

The Energy Efficiency Fallacy

Figures provided by Professor Mark P. Mills

Energy Consumed to Provide Light for 4 hours of light per day, over an entire year

Prior to 1850s - 1,000lbs of whale to obtain whale oil

1850s - 300lbs of coal transformed into kerosene

1880s - 100lbs of coal Edison lightbulb

1960s - 10lbs of coal with then-current incandescent bulbs

2020s - 1lb of coal to power LED light

2020s - 0.2lbs of natural gas to power LED light

Total illumination provided prior to 1850s: 0.1 Trillion lumen-hours

Total illumination provided in 2020s: 1,000+ Trillion lumen-hours

We consume orders of magnitude more energy to provide illumination today, despite the fact that the energy required to provide that same 4 hours of light per day, over an entire year, has decreased by 5,000 TIMES!

Let that sink in for a moment. We increased the energy efficiency of creating illumination by 5,000X between the 1850s and 2020s, while demand for illumination increased by 10,000X over that same period of time.

How can that possibly be!

Energy efficiency was supposed to DECREASE our energy consumption. The simple answer is that it did reduce our consumption. Absolute efficiency of illumination increased by over 5,000X, as-indicated, between the 1850s and 2020s, to provide equivalent illumination over an entire year. The demand for that illumination is what obviously skyrocketed. Due to that simple and obvious fact, no energy consumption was EVER "saved" or "reduced"! Energy consumption radically increased.

How did CO2 emissions remain flat in the 2020s?

Look at how much coal was required to provide illumination vs natural gas. Shockingly, natural gas derived CO2 emissions are almost exactly where coal derived CO2 emissions were about 10 to 15 years ago. Natural gas is a fuel that can provide 5X as much illumination (power input, per unit weight, when burned in a recuperated / combined-cycle gas turbine) as the garbage grades of coal left today.

That means we're not consuming 2X more energy, as I simplistically estimated without remembering that we now use recuperated gas turbines for electric power generation these days, which means we're getting 5X more energy.

Somehow, some way, we're consuming 5X more energy, and our CO2 emissions basically haven't budged from where they were when we stopped burning "dirty coal".

What are the lessons to be drawn from this ugly fact about energy efficiency and consumption rates?

Anybody who thinks some new energy-saving lightbulb, perhaps based upon a tiny semi-conductor laser with its light randomly scattered to further reduce illumination energy demand, is either fantastically ignorant of history or well beyond delusional. They do not understand, not a little bit, nor at all, how it is that reduced energy consumption of energy-consuming devices, has the effect of greatly increasing energy demand.

Does anybody naively believe that was a fluke, some dumb accident of happenstance only applicable to our usage of lights?

Back in the 1950s, computers were the size of entire buildings, consuming tens of kilowatts to low megawatts of power- each! All of them put together were laughably less capable than a single $50 example of today's cellular telephone technology that can be purchased anywhere in the world. In the 2020s, computing power consumes more energy than the entire airlines industry- thousands of monstrously powerful machines that guzzle kerosene to hurtle through the air at speeds approaching 600mph, carrying over 2 billion passengers to their destinations every year. Somehow, all those thunderous flying machines consume less energy than near-silent computers, which have increased their compute efficiency BY OVER A TRILLION TIMES!

What should we learn? What should be our takeaway?

No amount of energy efficiency increase is ever going to offset demand. The only way our total energy consumption levels off, and by proxy CO2 emissions, is when energy becomes so unaffordably expensive that the entire global economy implodes. Even COVID only decreased total annual demand by about 10%, over a period of about one year.

Offline

Like button can go here

#130 2024-03-25 09:46:24

- Spaniard

- Member

- From: Spain

- Registered: 2008-04-18

- Posts: 144

Re: Lithium used for batteries

Hi.

I'm sorry I couldn't reply anything. As I though, a sudden influx of workload and family matters joined to make me unable to answer.

So I will make the quickest reply possible at least for one post.

Spaniard,

That's not an indicator that "renewables are working". It's an indicator that we're consuming 2X as much energy as we were previously, and our CO2 emissions from natural gas are rapidly rising to the same level as coal.

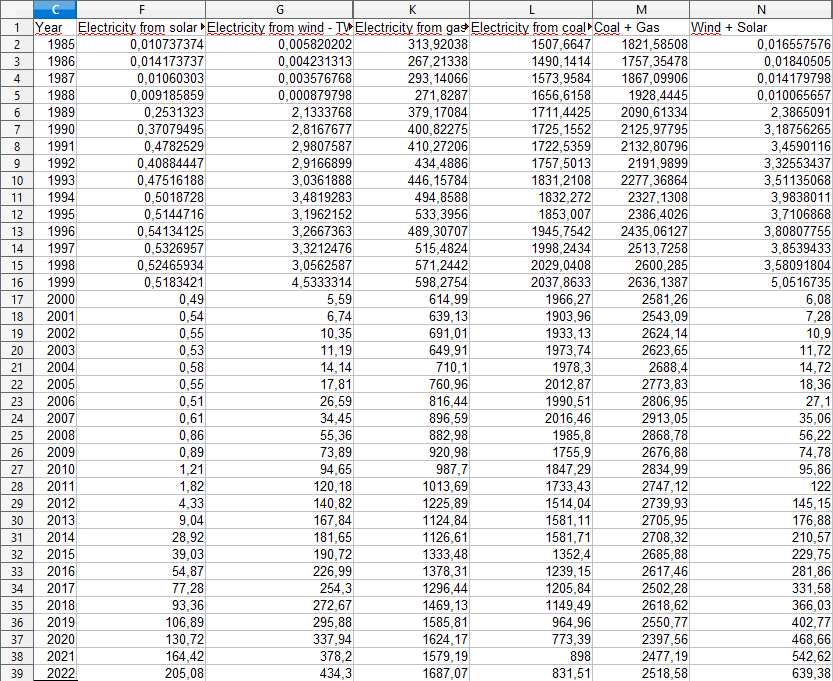

As graphs are sometimes lacking on detail, I made my one capture. I just brought the data from the CSV of electricity mix and just removed all columns except coal, gas, solar and wind. Later I added coal+gas and solar+wind.

As you can see, coal+gas remained more or less flat. The maximum was reached on 2008 when coal pretty much peaked (in USA), which was also the year with more coal, with a clear trend of decline.

Still, while the total production grows, coal+gas remain pretty much around 2400-2600 twh, while solar+wind steady grows each year, now for a total account of 25% of coal+gas, with an accelerating trend.

In relative terms, coal+gas has lost share. In total terms, they have plateau (although there is a trend of changing coal for gas), while the growing of renewables push for a total reduction next years.

In term of emissions per twh, CO2 reductions are clear. To reduce total emissions, more renewable are needed, so solar+wind will grow faster than demand.

With the current trend, that milestone is imminent.

Offline

Like button can go here

#131 2024-03-25 13:31:03

- kbd512

- Administrator

- Registered: 2015-01-02

- Posts: 8,350

Re: Lithium used for batteries

Spaniard,

It's wild to me that you can't or won't make the connection between what we're actually doing and the CO2 levels that you posted a chart of, to show me how wrong I was about emissions. Coal CO2 went down, but natural gas CO2 rose to take its place. One is not better or different than the other. 1Mt of CO2 from burning coal is precisely equal to 1Mt of CO2 from natural gas. CO2 is the same molecule, regardless of source or aesthetics.

We burned less coal because 1kg of natural gas burned by a combined-cycle gas turbine generates about 5X more energy than 1kg of coal burned in the 1920s to 1950s era coal-fired power plants we shut down. Despite our 5X gain in energy efficiency over those old "dirty coal" power plants, rather than CO2 emissions from coal burning becoming 1/2 as much CO2 emissions from natural gas burning, or 1/5 as much, which is what they should be, we're releasing the same amount of CO2- more in fact because we never stopped burning coal. There's no net change in emissions, except the quantities allocated to both sources. Thus far, all energy sources are additive, meaning they don't cancel each other out, or energy gods forbid, actually go down, the mixes simply change over time... until we run out. Then the real pandemonium starts.

To top it all off, we're actually getting 5X more energy in the process. We gained 5X efficiency, which is spectacular, but then we burned the CO2 emissions equivalent of 5X more natural gas, which means we burned 5X more natural gas, simple as that. That's not an accomplishment. It's yet another example, amongst a list of examples, of how energy efficiency increases have never once resulted in humanity consuming less energy. If CO2 emissions from natural gas fell to 1/5 of their previous levels, that would be an actual accomplishment worthy of high praise, and I would consider that "proof" that your assertion / the assertion of our green energy advocates, actually had merit and made good sense to someone who is not ideologically motivated by any of these changes.

In a subsequent post, I then showed the example of how global lighting affected energy usage over time. Illumination efficiency increased by 5,000X, but illumination demand increased by 10,000X. I didn't post numbers, but illustrated how computing has gone from something that didn't qualify as a footnote to our total annual energy usage during the 1950s, to something that now consumes more energy each year than the entire airline industry- an industry that burns jet fuel as an integral part of its business model. Flying used to be expensive and inefficient, so we made it cheaper and more efficient. Now 2 billion people fly each year. The astronomical increases in energy efficiency from lighting and computing only resulted in even more astronomical increases in energy consumption associated with those use cases for energy.

Our CO2 emissions functionally haven't budged from where they were, 10 years ago. After spending about 2 trillion dollars, meeting 3% of total global energy demand from wind and solar, does not a revolution make. It took 20 years to create 3% of our energy supply from wind and solar. At that rate, it will take 667 additional years to complete our "energy transition" to this fabled circular economy. To complete the task in 20 years, we will accelerate consumption and energy demand by 33.35X present rates, because the materials are where the embodied energy lies, and you cannot recycle what you do not currently possess.

I do believe you actually understand this, you simply don't like what it means. I don't like it, either. I'm all for something that's proximal to solving the problem. I'm against conflating activity with accomplishment. A Chinese fire drill is definitely an activity that someone could participate in, but not one that they should expect will accomplish anything worth doing. Tell me you at least understand and accept that we're going to burn coal and gas like mad over the next 2 to 3 decades in a desperate attempt to create these green energy machines, because no other energy sources can provide the input power required to do that, and at least then I'll believe you are accepting of current technology reality. We're gambling / betting our future on being able to re-create all of this green energy tech in another 20 to 30 years without using any hydrocarbon fuels to do it. For everyone's sake, we'd better be very certain about our ability to do that. Our confidence level should be similar to our confidence in the Second Law of Thermodynamics, because regardless of how much better you think electricity is, that law will apply with full force to whatever solution we come up with.

Offline

Like button can go here

#132 2024-03-25 17:39:56

- Calliban

- Member

- From: Northern England, UK

- Registered: 2019-08-18

- Posts: 4,271

Re: Lithium used for batteries

To top it all off, we're actually getting 5X more energy in the process. We gained 5X efficiency, which is spectacular, but then we burned the CO2 emissions equivalent of 5X more natural gas, which means we burned 5X more natural gas, simple as that. That's not an accomplishment. It's yet another example, amongst a list of examples, of how energy efficiency increases have never once resulted in humanity consuming less energy. If CO2 emissions from natural gas fell to 1/5 of their previous levels, that would be an actual accomplishment worthy of high praise, and I would consider that "proof" that your assertion / the assertion of our green energy advocates, actually had merit and made good sense to someone who is not ideologically motivated by any of these changes.

You have described Jevons's Paradox.

https://en.m.wikipedia.org/wiki/Jevons_paradox

As the efficiency of a device improves, operating cost declines. People can suddenly afford a lot more of whatever service it provides. Demand for the service increases rapidly, resulting in an overall increase in energy demand, despite the improvement in energy efficiency of each device. There are many examples of this.

Cars became practical mass market devices only after the internal combustion engine was developed. ICEs have unrivaled power density and can be mass produced from base metals like iron and steel. They are fuelled by one of the most energy dense chemical energy sources known. It is a liquid with a high vapour pressure that can be stored at atmospheric pressure. We don't have to make the fuel as such. Nature has already done than. We only need dig it out of the ground. Doing that isn't free, but it is cheap compared to making a chemical fuel from scratch.

When you think about the advantages that fossil fuelled ICEs have, it is a very high bar to cross for any rival system that might challenge their dominance. It really isn't surprising that a manufactured battery powered vehicle, struggles to compete. It is competing against something that nature has already given almost for free.

Sadly, there is only so much rotten dinosaur juice in the Earth. We have burned through about half of it. We have built a transportation system based on single occupancy, 2 tonne vehicles that travel 10-20x faster than a man can walk for 500 miles non-stop. Now our fossil fuel inheritance is running low. We find to our surprise that other methods of powering this profligate transport system are more costly, have inferior energy density, shorter range and require more metals. Who would have thought? Humans are poor at adapting their thinking. Could it be that such an energy intensive transportation system only works when you have cheap, liquid energy? The sort that you dig out of the ground?

Last edited by Calliban (2024-03-25 18:05:58)

"Plan and prepare for every possibility, and you will never act. It is nobler to have courage as we stumble into half the things we fear than to analyse every possible obstacle and begin nothing. Great things are achieved by embracing great dangers."

Offline

Like button can go here

#133 2024-03-26 08:53:46

- kbd512

- Administrator

- Registered: 2015-01-02

- Posts: 8,350

Re: Lithium used for batteries

Calliban,

I'm looking for some part of battery and electric vehicle performance rhetoric to start aligning with practical reality. For example, if we built Lithium-ion batteries that truly lasted for say, 20 years, then regardless of what happens to the rest of the vehicle after a mere 5 years (frame rusted out, accident happens, vehicle submerged), we unbolt the working battery from the non-working old vehicle, bolt it into a new vehicle, and away we go. I'm not opposed to the experimentation and tinkering, because that's how technology advances, but I want it to lead somewhere worthwhile. If Sodium-ion batteries can be made more cheaply, but more importantly can be made to last for 20 years, then I consider that a win. Driving up the cost of new tech, merely to increase the energy density, but with the same or worse cycle life, is not a win. Energy density needs to improve to replace hydrocarbon fuels, but serviceability is a real concept that requires more than lip service paid to it.

We need a battery with the size / weight / dimensions of an engine block, that bolts into a normal vehicle like a normal engine block, so that a vehicle of normal size and weight can be driven around on battery power. A subcompact car with the weight of a full sized pickup truck either requires a truck-strength chassis, truck tires, and truck brakes, or else the crushing weight of the vehicle components makes the vehicle a "not a subcompact car", that constantly requires new tires and brakes, and has repeated basic structural failures like the Tesla CyberTruck. That was adequately solved before most people alive today were born. We simply don't see failures like that inside of a few thousand miles of driving in normal vehicles, cars or trucks. As battery energy density gradually improves over time, as it has in the past, and as we should expect in the future, then the battery powered vehicle goes farther and faster. What would normally be a 2,500lb vehicle when powered by a combustion engine simply cannot have an extra 2,000lbs of weight bolted to it without real physical consequences, even if you distribute all that additional weight evenly along the underside of the vehicle's passenger compartment. If the battery was small and light enough to place inside the engine compartment, then there'd be no point to doing something like that.

Existing combustion engine cars were intelligently designed for passenger transport. If there's a fire in the combustion engine, then it typically starts in the engine compartment, which is not located directly underneath where all the passengers sit. Having a trunk where the engine goes is a rather useless design feature. The underside of the vehicle should contain nothing but a steel passenger bucket. Designing a vehicle that way, the entire vehicle doesn't get totaled by an insurance company because you went over a speed bump or pothole in the road and dented the battery casing. Put the battery back in the engine compartment where it belongs. The battery and motors are the "new engine", so treat them that way for sake of economy / practicality / passenger safety. These new engineers are not any smarter than the engineers working for Henry Ford. They have more information, but they're making decisions that transform vehicles, durable goods by any other name, into disposable appliances. The engine was placed there for multiple reasons, not because it was utterly impossible to install a combustion engine somewhere else. All those vehicles that put the engine "somewhere else", well, you don't see many of those on the roads, because they weren't practical. The additional hassle / expense / complexity associated with doing that was deemed not worthwhile by the majority of automotive engineers, who were more interested in designing and building practical cars than trying to prove how clever they were.

If the battery was the same size and weight as a small block V8, with the drive train built into a K-member like an old Chrysler, then someone could rear end the car or T-bone the passenger compartment, but that doesn't result in a loss of the most expensive part of the car- the engine and drive train. To reuse your electric "engine", you drop the K-member out of the busted vehicle, the battery and electric motors come with it- those expensive functional bits of the vehicle, and you bolt them into a new vehicle chassis. There's no transmission to mess with, and very little in the way of fluids, so the entire process is about as fast as it possibly can be, thanks to the new battery and electric motor paradigm.

Whether or not this way of doing things allows engineers to prove how clever they are, or not, is largely irrelevant to the design and implementation of practical and affordable electric vehicles that most people can buy and maintain. After the novelty of "something new" wears off, such as mounting the battery to the passenger compartment, you're left with the practical issues related to living with the novelty. Thus far, those issues have included how to avoid incineration if the battery catches fire, how the fire department can put the fire out, and how or if the vehicle can be repaired if the battery is physically damaged by driving the vehicle over a less-than-perfect road.

We created big block V8s to obtain enough power when compression was very low and combustion chambers rather inefficient. Around that same time, small block V8s became much more powerful and could power most light vehicles- everything from subcompact cars to small dump trucks. Later on, I6s / V6s / turbocharged I4s replaced the small block V8s and provided equivalent power. Now we have EV motors you can pick up with one hand that supply as much power as the most powerful small block V8s of the muscle car era, and all the torque, from zero rpm to redline. The battery is the new fuel tank, one that can be co-located with the motors if you stop trying to replicate combustion engine driving range and start trying to make vehicles that most people can afford to own and operate. Once you create a real aftermarket, one supported by both OEM and parts companies, you can start converting existing vehicles, so entirely new vehicles don't need to be built from scratch, because that process will take another 40+ years at current production rates.

Offline

Like button can go here

#134 2024-03-26 09:30:34

- Spaniard

- Member

- From: Spain

- Registered: 2008-04-18

- Posts: 144

Re: Lithium used for batteries

Man... To my already busy schedule now even more workload has been added to my work tasks queue.

Very fast answer

Spaniard,

...

I do believe you actually understand this, you simply don't like what it means. I don't like it, either.

We clearly show two different things. I didn't omit in my original comment the shift from coal to gas. But I show you now how coal+gas pretty much peaked while renewables is filling the gap of growing demand, and increasing the speed.

If you continue the trend you can show how not only coal will almost disappear but also gas will start to decrease and sooner you can expect.

That's said, after some NG/renewable ratio is reached, to add more renewables, storage will be needed, so once reached that point I expect to grow both again, until storage+renewables would be competitive with gas.

And that depends in new advancements, still in the future.

About the Jevon's paradox, I read most than enough in my former peakoiler times.

It's not written in stone. You must understand the internal dynamic to understand when we can expect to work and when will not.

It's an mixed technological/economic phenomena.

A quick summary.

Efficiency and other improvements reduce the cost.

People could buy more for the same money. So they can choose. Save/redirect money to another purpose or increasing consumption.

When there is an important gain in increasing consumption, this phenomena is the most probable.

That's pretty much the inner workings of Jevon's paradox although peakoilers doesn't explain like that and prefer to said it's like a expected(without really explain) process where efficiency just increase consumption. It's not so direct and that's not an explanation, besides they usually expose like that.

But here is the thing. There are circumstances/limits that make Jevon's paradox don't work.

First. Efficiency is linked to energy. Price is linked to a bunch of things. Energy is just a variable. For example, land has a price, but it has no monetary cost besides the cost we could generate over complicating things(ex. bureaucracy). They are cost not linked to energy but supply/demand.

So, a better efficiency not always turn into a better price or have a minor impact. If the price is similar before the efficiency increasing, Jevon's paradox won't work.

Second. Demand has sometimes limits. Think on food for example. No matter how cheap food can become, you aren't gonna eat more than a certain quantity. Yes, you can increase the quality of food, but there is a natural limit to that.

You have mentioned illumination. I'm pretty sure that depends when and where are you accounting, the energy spent on that has decrease significantly. In western homes has pretty much occurred. The reason of that is that we already have more than enough light for most usages, so no matter how efficient could become, how low the cost could become, there is no need to increase the quantity of light there.

Of course culture also have effects in that limits. Europeans for example are a lot more prone to limit light contamination (environmental issue). In the streets, if you ignore this kind of waste, there is margin to increase far beyond current levels.

You shouldn't mix different markets that are driven by different variables or you will point to a wrong conclusion.

In the past, and in poor countries, there is a significant lack of light, so there is margin for a huge increase on consumption there. In other cultures, there isn't more push than population growing.

Third. Any number multiplied by zero is zero. If we reach zero emissions per energy unit, there has no sense to claim Jevon's to ensure than the emissions will never go down.

In fact, this argument is almost the same than the first. Zero emissions will never mean zero cost, so it's not possible a very high demand (towards infinity) consumption that it's the only factor that can compensate very close to zero emissions. As costs become more and more related to different factors than emissions, you can expect that phenomena to disappear.

That's the reasons because Jevon's paradox are not written on stone, and other things can change the result, including culture changes to push for reduction in consumption specially if it reduce your quality of life (even if you don't know).

In the end, it's understand that increasing efficiency is not the problem, but that nothing can avoid problems if you don't limit your consumption. Unlimited consumption is impossible to provide.

That's said, our consumption per capita at least from the energy aspect has stabilized in the western countries years ago. This doesn't seem like a severe problem compared with other related to the transition.

Last edited by Spaniard (2024-03-26 10:08:31)

Offline

Like button can go here

#135 2024-03-26 14:31:22

- kbd512

- Administrator

- Registered: 2015-01-02

- Posts: 8,350

Re: Lithium used for batteries

Spaniard,

7. Very long term trends in lighting efficiency and consumption

Source: Data is taken from Fouquet and Pearson 2007 and Fouquet 2013

There seem to be at least three good reasons for suggesting that demand for lighting services will continue to grow strongly in the coming decades. First, interior light levels are still well below the intensity of daylight, by as much as one or two orders of magnitude. There is no immediately apparent reason why people should have an intrinsic preference for lower light levels than found naturally, at least for much of the time, and especially in winter.

Second, every major technological shift has caused an increase in the consumption of lighting services in the past. LED lighting now appears, together with other technologies, to be introducing such a major technological shift. There will surely be significant progress in the coming decades, with costs falling and the quality of the light improving. If the historical pattern is followed this will lead to an increase in demand.

Third, incomes will continue to rise, which is also likely to lead to an increase in demand.

Working in the other direction, in many existing buildings there may be many instances in which people simply replace older halogen bulbs with LEDs generating a similar amount of light, simply because that is what’s available. This generates a large efficiency saving.

In their more recent research Fouquet and Pearson have estimated both price and income elasticity of lighting demand over time. They have found that both income and price elasticities have fallen below the very high levels found in the late nineteenth and early twentieth centuries. But they are still materially different from zero. Demand for lighting services can be expected to grow even in a mature market such as the United Kingdom over the next half century, given expected increases in income and reductions in the price of lighting services. Estimates indicate that despite increases in average efficiency of lighting of 2% p.a. electricity use for lighting could still increase by more than a third in the coming decades. Even if elasticities do fall somewhat over time, with greater demand saturation than yet evident, and other cost increases somewhat offset price falls due to efficiency there will be major limitations to the ability of lighting efficiency to produce very marked percentage reductions in emissions relative to current levels.

This analysis does not necessarily generalise to other services. Lighting remains a minority of total electricity demand even in the residential sector, and there may be greater saturation effects in other applications, for example in some domestic appliances. For example, energy use by refrigerators sold in the US has declined enormously since the mid-1970s. However other sectors, such as passenger transport and domestic heating, also show continuing growth in demand for energy services over the very long term, confirming that the type of trend found for lighting is not unique.

Increased lighting efficiency, however desirable, thus seems unlikely to do much to avoid the need for very large amounts of low carbon electricity generation. Efficiency will help but it will be nowhere near enough on its own.

Offline

Like button can go here

#136 2024-03-26 15:19:23

- kbd512

- Administrator

- Registered: 2015-01-02

- Posts: 8,350

Re: Lithium used for batteries

Here it is again in absolute terms:

Global Energy Usage, 1965-2020

Per capita energy usage declined, because we added massive improvements in efficiency, but we also added a massive number of "capitas" ![]() . Oddly enough, energy usage increased.

. Oddly enough, energy usage increased.

Increasing energy usage with increasing efficiency is not an absolute, but if all the stuff you want to build requires a lot more materials and therefore energy, then a lot more energy will be consumed.

Increasing energy usage with increasing demand for materials is an absolute. Raw ore requires energy to transform it into metal. All the green methods to transform ore into metal require a lot more energy and a lot more metal.

Note how we see that as energy demand went down, it went down for all sources, renewable or otherwise, and when it went up, it went up for all sources, renewable or otherwise.

Offline

Like button can go here

#137 2024-03-26 17:43:19

- SpaceNut

- Administrator

- From: New Hampshire

- Registered: 2004-07-22

- Posts: 29,932

Re: Lithium used for batteries

So, all in all its our fault for using more since it makes life easier to bare.

Less time consuming but it's coming with a cost that is making more of us lapse into poverty each day.

Offline

Like button can go here

#138 2024-03-26 22:53:51

- kbd512

- Administrator

- Registered: 2015-01-02

- Posts: 8,350

Re: Lithium used for batteries

SpaceNut,

I'm not blaming people for using energy, I'm trying to get them to connect the dots that what they're proposing doing to "solve" their CO2 emissions conundrum, is to burn anything that burns at an ever-increasing rate. Somehow, some way, at the end of all that, we're supposed to achieve a "circular economy". All I see is a lot of circular reasoning that spares no effort to ignore what we're actually doing, which is the thing we're supposed to be doing less of, rather than more. The reason we're doing more of the thing we can't seem to admit that we're doing, is that what we're doing isn't working. It's not working because you cannot demand 100X to 1,000X more materials and therefore energy, and then expect to also burn less coal and gas, given that our entire real economy is almost entirely based on burning something. In 20 years time, if there's still anything left to burn, we'll burn it, guaranteed.

70% to 80% of the cost of a Lithium-ion battery is now the raw materials. Even if manufacturing and distribution costs fell to zero, the energy cost of demanding more and more of the raw materials over time would outstrip our ability to produce more. With coal / oil / gas, we started with an enormous resource base to draw upon, yet those energy inputs are harder and harder to come by. The total supply of Lithium metal is a minute fraction of the total quantity of coal and natural gas, and the price of Lithium have exploded.

Global Avg Lithium Carbonate Price Per Metric Ton, USD:

2019: $11,700

2020: $8,400

2021: $12,600

2022: $68,100

2023: $46,000

Global Avg Nickel Price Per Metric Ton, USD:

2019: $13,914

2020: $13,787

2021: $18,465

2022: $25,834

2023: $22,000

In forecasting, we'd call that a "regime change". Battery prices aren't going down because all prices are going up.

Thus far, I've heard, CO2 emissions are going down, yet we're not consuming less of anything, so that's an obvious lie. I've heard that we're not demanding more energy over time, especially from hydrocarbon fuel sources, another obvious lie. We've become much more efficient in our usage of hydrocarbon fuels, but we merely consume more energy over time as a result of the efficiency increase. I've heard that producing more short-lived electronic devices is going to make energy usage or CO2 emissions irrelevant, because any number divided by zero is still zero, but when asked as to how that is going to happen, the answer is to make and consume more metals, none of which are made without demanding more materials and more energy, and of course, generating more CO2, because we're burning more coal and gas to do that. It's all perfectly circular logic.

I think we need a better plan.

Offline

Like button can go here

#139 2024-03-27 01:45:11

- Spaniard

- Member

- From: Spain

- Registered: 2008-04-18

- Posts: 144

Re: Lithium used for batteries

You have cut certain part of the text. I'm gonna cut another.

However, there appears to have been a plateau towards the last quarter of the 20th century, with a tick up at the end. This raises the important question of whether demand for lighting is saturating, or whether there is still room for growth in the demand for lighting services. If demand for lighting services is saturating then producing the same service with much less electricity may lead to substantial savings in emissions from electricity generation. However if demand has not yet saturated, increases in efficiency through the wider deployment of new technologies, especially LEDs, could lead to lower than expected savings, as increased efficiency reduces price per lumen and so increases demand, a rebound effect.

There seem to be at least three good reasons for suggesting that demand for lighting services will continue to grow strongly in the coming decades. First, interior light levels are still well below the intensity of daylight, by as much as one or two orders of magnitude. There is no immediately apparent reason why people should have an intrinsic preference for lower light levels than found naturally, at least for much of the time, and especially in winter.

First, the text considers a peak by saturation (it's more or less the same that the concept of demand limit I said before).

Second, their consideration about what will happen in the future is just mere speculative.

And the main argument is that our current lightning is was behind than natural lightning.

I said this argument is flawed, because that's not how our sight works. From some very low level to a certain point, there is an appreciable difference.

Our eye cell cones has multiple types with different light sensibility. If the light is too low, we loose chromatic sensibility and focus precision, but it's still amazing how good our human eyesight is, specially compared among most other animals with few exceptions.

But reached certain point, the sensibility peak, and they it's the pupil which enter in action to suppress light, because it not the exposition would start to become excessive which is bad.

In other words, no matters how much you increase the light, the sensibility pretty much remain the same. There is no advantage in increasing the light, you see the same, so the argument is flawed.

Besides, if you check current lamps, you will see that most current bulb sockets have power limits pretty low compared to years before. If you force to use an old high powered incandescent bulb for hours, you risk the socket because it's not ready to dissipate so much heat.

In every house I met, there has being significant savings after removing the old incandescent bulbs. There wasn't a increasing in consumption but the opposite. Sure, someone could increase the light in some places where it was lacking before, but not near to increasing the consumption.

That's the difference between the past and the present. Light saturation. Demand limit. The reason why Jevon's paradox can't be taken for granted.

Now I will reference just a plan in my country.

https://www.idae.es/en/news/inventory-e … spain-2017

On the one hand, the applicants for this programme have presented reforms that will allow them to obtain minimum savings of 65%. On the other hand, the various projects undertaken with the participation of the IDAE, as well as others executed by third parties and publicised in the media, show savings of over 80% when combining LED with the scheduled regulation of flows. These figures, which would be extraordinary in the reform of other types of energy consumption installations, mean that this sector, which is currently immersed in a technological change, is rapidly moving towards energy consumption values that are difficult to determine at this moment.

In fact, the public movements went in the opposite direction. Fighting night light contamination. A peak demand.

Of course, if you start to account other light usages and add to this like it were the same, you can show the numbers you want. For example, start to account indoors plant cultivation, that it's a sector that it didn't exists before. Most of this sector is not even legal (drug cultivation). I don't consider that the same sector, even if it's involve to generate light. After all, the goal is not allow people to see, but cultivate plants that it's complete different.

And I again insist. Peak demand is a developed countries situation, not poorer ones. I'm pretty sure poor countries will raise their artificial lights and depending in their original situation, that will means increasing consumption. And that's a good thing because it's a huge leap in life quality for them.

Remember that the core of my argument is not other than "Jevon's paradox is not a thing that always occur". Peak demand is not a theoretical thing but a reality in some markets, and even the text you cited admitted the possibility even if it later argued in the opposite direction.

Offline

Like button can go here

#140 2024-03-27 01:54:21

- Spaniard

- Member

- From: Spain

- Registered: 2008-04-18

- Posts: 144

Re: Lithium used for batteries

Here it is again in absolute terms:

And again, using global data with a lot of years where renewables pretty much didn't exists, you hide the current phenomena.

You need to look the data more closely and in the countries were renewables has being adopted sooner to have a window to future events and possible projections. If you hide the data diluting in global data, when you will see the renewable crushing fossil fuels, that won't be a future trend but a confirmed past.

I see clearly that you don't want to focus in the current movements.

Note how we see that as energy demand went down, it went down for all sources, renewable or otherwise, and when it went up, it went up for all sources, renewable or otherwise.

That was the COVID. This kind of anomalies occurs from time to time. Real data is not a perfect curve.

Offline

Like button can go here

#141 2024-03-27 09:38:14

- kbd512

- Administrator

- Registered: 2015-01-02

- Posts: 8,350

Re: Lithium used for batteries

Spaniard,

And I again insist. Peak demand is a developed countries situation, not poorer ones. I'm pretty sure poor countries will raise their artificial lights and depending in their original situation, that will means increasing consumption. And that's a good thing because it's a huge leap in life quality for them.

That will increase rather than decrease global demand for energy. That will consume more materials and thus energy to produce them. They're going to burn hydrocarbon fuels to do that, because those sources will be what they can afford. That's how we did it, that's how China did it, and that's how the rest of the developing world is doing it. Nobody who is struggling to feed their children gives a tinker's damn about the environment, and why would they?

And again, using global data with a lot of years where renewables pretty much didn't exists, you hide the current phenomena.

Global data is the only data that means something in the context of a global problem. Cherry picking countries that you think support your beliefs is the dictionary definition of ignoring "the big picture".

The use of global data was intended to show you the clear and unmistakable trend towards ever-greater energy intensity from all sources, and that it was all additive in nature. New energy technology did not replace the other existing sources, as they became available. Nuclear energy did not replace coal in industrialized countries. It's absurd to think that wind and solar will, at least within our lifetimes.

If nuclear energy, which is millions of times more concentrated than any other form of energy, could not do what technologies that require 1,000X more materials and input energy require to provide equivalent output, then it's downright silly to believe that such a thing will happen in any meaningful timeframe. To believe otherwise, and to outright ignore all the warning signs that it objectively is not doing what we want it to do, is the dictionary definition of cognitive dissonance.

All of your arguments about wind and solar were made by proponents of nuclear power. This time will be different or special, because we have different or special technology. That may be true, but it probably isn't. Every new group of communists also thought the last group of communists were not real communists and didn't know what they were doing, so that's why they failed. The problem couldn't possibly be their grand idea, at least not according to them, but that everyone else was "too stupid" to implement real communism. Yeah, sure they were.

You need to look the data more closely and in the countries were renewables has being adopted sooner to have a window to future events and possible projections

I have looked at data from countries like Germany- a large industrialized nation that spent all its money on renewable energy- a place filled with "forward thinking Europeans", who either lack basic math skills or cannot reconcile their ideologically-held beliefs about energy with actual results. It shows that CO2 emissions levels are what they were when they started this green energy silliness 20 years ago. They shut down their nuclear reactors, only to go back to burning coal, because those reactors were producing far too much energy without CO2 emissions, relative to the money spent on the reactors vs the renewables, which invalidated their ideology, so the reactors had to go.

It's easy for me to understand because I haven't been ideologically captured by this religious movement masquerading as science. It has all the features of Scientology and other weird new-age religions, but none of the features of science.

I see clearly that you don't want to focus in the current movements.

This is something you wrote and attributed to me. You want me to fixate on the minutia of your arguments while ignoring the big picture. The GLOBAL CO2 emissions are the only thing that is relevant to "Anthropogenic GLOBAL Warming". I haven't lost sight of the big picture, because that's the only picture that's relevant to GLOBAL CO2 emissions.

If you need to use special data to illustrate your points about something that has to be a global phenomenon in order to achieve the desired result (ostensibly GLOBAL CO2 emissions reduction), assuming that result is math-based rather than ideology-based, then your argument is invalid.

That was the COVID. This kind of anomalies occurs from time to time. Real data is not a perfect curve.

A real energy transition would show an unmistakable downward trend in GLOBAL CO2 emissions, but since all of our green energy technology requires consuming insane amounts of hydrocarbon energy to create it, we're not going to see that clear and unmistakable signal in the data until AFTER we burn the fuel to create those green energy machines.

Your arguments are, "we got this new thing going on that we call green energy, and it's going to work out great", "we're smart and we'll figure it out", and "look over here at this one thing that supports my beliefs, which still doesn't mean anything at a global level". Sorry, but that is thoroughly unconvincing. Hope and belief are not valid engineering principles. If historicl data doesn't show what you want it to show, it's not because the data is wrong, it's because you have an unrealistic belief about how reality should work.

You could get really upset that you're not convincing me that your beliefs about this is correct, or you could come back to this idea in about 20 years after it's beyond obvious that wind and solar energy will only ever be part of the total energy mix, much like nuclear energy, unless they're implemented in radically different ways, as compared to what we're presently doing. By then you should be able to temper beliefs about the next big future with knowledge of how seemingly world-changing technologies of the past, didn't cause the world that existed prior to cease to exist.

The men who built the hydro dams during the Great Depression never thought or believed they'd still be in operation long after their children were dead. The men who built the B-52 thought all of them would be retired and replaced inside of 20 years, if not 10 years, such was the pace of innovation in aviation. They're all dead, most of their children are now dead, and their grandchildren are now flying them. All B-2 stealth bombers will be retired before the B-52s. The men who went to the moon thought many others would swiftly follow in their footsteps. Most of them are dead now. The only men, and now women, following in their footsteps, are the same ones who built and flew the giant moon rockets to begin with. It took 100 years to get to where we are today with industrialization, electric grids, indoor plumbing, environmental standards, human rights, etc.

Lasting change takes human lifetimes, often multiple human lifetimes, and what was previously viewed as "the most important thing in the world today", might not be a footnote tomorrow.

Offline

Like button can go here

#142 2024-03-28 11:31:42

- kbd512

- Administrator

- Registered: 2015-01-02

- Posts: 8,350

Re: Lithium used for batteries

One application where I see an absolute hard requirement for Lithium-ion batteries is providing power to pure battery electric submarines for operations within littoral waters, especially in Asia and the Middle East, wherein the use of diesels or nuclear reactors is simply too noisy or cumbersome, even as quiet as they have become today, to assure the stealth required to afford a decent chance at survival for a submarine and her crew. Certain kinds of egregiously expensive nuclear submarines can operate in littoral waters, but their great size and poor maneuverability, relative to much smaller conventionally powered boats, makes them far better suited to fighting in open ocean.

Movement through littorals is typically at low speeds, it's done with great caution, and you want to stop and listen before maneuvering into or out of any particular area. This requires dead silent direct drive electric motors operated at low speeds and dead silent batteries. The existing diesel electric and nuclear electric submarines presently do this in a compromising way that effectively makes them no more capable, in terms of speed under water, than a pure battery electric submarine. Nuclear submarines can sustain high speeds for effectively unlimited periods of time, but in actual practice that's not how a war patrol works.

Nuclear submarines operated at low speeds without their cooling pumps turned on, meaning using natural convection to cool their reactors, are functionally limited to the same low speeds as diesel electric or battery electric submarines. If they turn their pumps on to go faster, then they show up on sonar. The diesel electric submarines can dive and briefly operate at higher speeds on battery power, but the additional weight of the diesel engines and fuel means they'll be coming up for air to runn their noisy diesels on the surface to recharge their batteries. That makes diesel electric submarines prime targets for maritime patrol aircraft equipped with both anti-ship missiles and torpedoes. Decades ago, America developed tech to literally "sniff" for diesel fumes from aircraft or drones, so locating and targeting diesel powered submarines became far faster and more certain. Combined with satellites and drones, surfacing to recharge the battery, even at night, has become a very dangerous proposition.

I can demonstrate, using rather simple math, how a Barbel class or Albacore class submarine can use battery power alone to hunt enemy diesel electric submarines, missile boats, kamikaze drones, corvettes, frigates, and small landing craft. Anything larger than a frigate is probably best dealt with by nuclear powered submarines or carrier aircraft. Destroyers and cruisers tend to have better anti-submarine capabilities and helicopters, so fighting one of those with a less capable submarine is inadvisable, even if you can make it work. Most Russian and Chinese naval assets consist of smaller yet still very dangerous ships equipped with powerful anti-ship cruise missiles. Many of those vessels would be used to attack or defend against our carrier battlegroups, so taking them out of the fight or keeping them preoccupied with avoiding our electric submarines makes them far less effective at striking our major surface assets. As always, stealthy diesel-electric enemy submarines remain priority targets. Numerous fleet exercises have proven that nuclear powered submarines and aircraft carriers typically don't hear them coming.

Very large batteries are under dramatically less stress in a submarine operating its electric motor at full rated output, as compared to any battery electric vehicle, 3.35C for a Tesla Model 3 vs about 1/18C to 1/36C for a Barbel class submarine with a battery equal in tonnage to the diesel engines and batteries, there is a reasonable expectation that the batteries could last for 10 to even 20 years with a modicum of Depth-of-Discharge discipline.

As of late, our nuclear powered submarines have also proven exceptionally troublesome to maintain. Present backlogs place both repairs and new construction years behind schedule. When operational, these nuclear attack boats are highly capable, but keeping them operational is the hard part. Completely silent all electric submarines, capable of completing 45 day war patrols on battery power alone, will be a vital addition to our overall fighting force in the littorals, where future great power competition is expected to occur.

Offline

Like button can go here

#143 2024-03-28 19:04:15

- SpaceNut

- Administrator

- From: New Hampshire

- Registered: 2004-07-22

- Posts: 29,932

Re: Lithium used for batteries

The dip for covid is really due to not driving so many commutes to work during a short period of time as I was among those that could not work early in 2020 for 4 months. As I was considered to be the at-risk age...

Offline

Like button can go here

#144 2024-03-29 12:07:46

- Spaniard

- Member

- From: Spain

- Registered: 2008-04-18

- Posts: 144

Re: Lithium used for batteries

You could get really upset that you're not convincing me that your beliefs about this is correct, or you could come back to this idea in about 20 years after it's beyond obvious that wind and solar energy will only ever be part of the total energy mix, much like nuclear energy, unless they're implemented in radically different ways, as compared to what we're presently doing. By then you should be able to temper beliefs about the next big future with knowledge of how seemingly world-changing technologies of the past, didn't cause the world that existed prior to cease to exist.

Upset no. Disappointed maybe.

That's pretty much a declaration that you won't accept any proof besides the transition would completed , which it will occur far beyond the existence of this forum.

Because even reach the peak won't be enough to you to admit that renewables are replacing fossil fuels, because that moment could occur in the next decade timeframe, as I published before. But even then, you are pretty much admitting that you will maintain your position.

Nobody is arguing that the total energy migration could be completed in 20 years. 25 years is 2050, and even in the net zero projections instead of removing all fossil fuels, they expect to combine some fossil fuels with some negative emissions to achieve that.

And that scenario is considered that require a big political push that actually don't exists.

Still, the projection is about shifting from fossil fuels to renewable as the main energy source.

Offline

Like button can go here

#145 2024-03-29 13:48:34

- kbd512

- Administrator

- Registered: 2015-01-02

- Posts: 8,350

Re: Lithium used for batteries

Spaniard,

I'm going to believe that there's an actual energy transition to renewables from hydrocarbon fuels when I see an actual decrease in the consumption of hydrocarbon fuels. Thus far, available data does not show that. In point of fact, available data shows significant increases in hydrocarbon fuel consumption. I told you, again and again, that hydrocarbon fuel energy consumption was my measuring stick to determine if an actual transition was underway, or not. There is no energy transition presently underway, only increased consumption of hydrocarbon fuels while pretending that we're transitioning away from them, with lots of contorting basic logic and math to attempt to hide that simple fact.

Maybe you're not arguing that a transition will be completed in 20 to 25 years, but lots of other people think that there will be a transition in 10 to 15 years. It took 100 years of relatively free-flowing hydrocarbon fuel energy consumption to provide us with the world we have today. It took 100 years to create the electric grid we have today. To double or triple the existing electric grid's total capacity, I would guess that process might take another 100 years.

Offline

Like button can go here

#146 2024-03-29 15:58:28

- SpaceNut

- Administrator

- From: New Hampshire

- Registered: 2004-07-22

- Posts: 29,932

Re: Lithium used for batteries

There are many synthetics being created and used but the rub is that you cannot detect the difference to the normal versus artificial.

Offline

Like button can go here

#147 2024-03-29 19:30:44

- Calliban

- Member

- From: Northern England, UK

- Registered: 2019-08-18

- Posts: 4,271

Re: Lithium used for batteries

kbd512 wrote:You could get really upset that you're not convincing me that your beliefs about this is correct, or you could come back to this idea in about 20 years after it's beyond obvious that wind and solar energy will only ever be part of the total energy mix, much like nuclear energy, unless they're implemented in radically different ways, as compared to what we're presently doing. By then you should be able to temper beliefs about the next big future with knowledge of how seemingly world-changing technologies of the past, didn't cause the world that existed prior to cease to exist.

Upset no. Disappointed maybe.

That's pretty much a declaration that you won't accept any proof besides the transition would completed , which it will occur far beyond the existence of this forum.

Because even reach the peak won't be enough to you to admit that renewables are replacing fossil fuels, because that moment could occur in the next decade timeframe, as I published before. But even then, you are pretty much admitting that you will maintain your position.

Nobody is arguing that the total energy migration could be completed in 20 years. 25 years is 2050, and even in the net zero projections instead of removing all fossil fuels, they expect to combine some fossil fuels with some negative emissions to achieve that.

And that scenario is considered that require a big political push that actually don't exists.Still, the projection is about shifting from fossil fuels to renewable as the main energy source.

Intermittent renewable energy is not displacing fossil fuels as things stand. It is modestly reducing the amount of fuel burned in electricity production. But even that small achievement is questionable when the entire lifecycle CO2 emissions are calculated, given the amount of fossil fuel used to build RE systems in the first place. For renewable energy to actually displace fossil fuels, it would need to: (1) Operate without fossil fuel fired backup; (2) Would need to provide large amounts of energy to applications that are not presently electricity based (heat and transport); (3) Provide the energy needed to produce replacement RE infrastructure.

Taken together, this is a lot more difficult than generating modest amounts of electricity for a large grid. It will be very difficult and expensive to do these things, because RE lacks the power density that fossil fuel based systems provide. It means building an energy system that requires a 1-2 order of magnitude greater investment of materials and energy to work. And those processed material and embodied energy inputs must be provided by a renewable energy source which is more expensive and has lower power density. In doing this we are trying to fight the second law of thermodynamics. Using a low EROI energy source to build more low EROI energy sources is a losing proposition. We will be living in a very different world if we have to do that. A much poorer world.

Last edited by Calliban (2024-03-29 19:45:37)

"Plan and prepare for every possibility, and you will never act. It is nobler to have courage as we stumble into half the things we fear than to analyse every possible obstacle and begin nothing. Great things are achieved by embracing great dangers."

Offline

Like button can go here

#148 2024-03-29 23:43:29

- kbd512

- Administrator

- Registered: 2015-01-02

- Posts: 8,350

Re: Lithium used for batteries

Calliban,

In doing this we are trying to fight the second law of thermodynamics. Using a low EROI energy source to build more low EROI energy sources is a losing proposition. We will be living in a very different world if we have to do that. A much poorer world.

Finally, an engineer comes straight out and says what we're really trying to do here. At a macro scale, all we're actually doing is dumping money, energy, materials, and labor into energy generating systems that ultimately had to be abandoned in order to provide most of humanity with their present quality of life. The technology for harvesting energy from these sources has improved by leaps and bounds, but the overall quality of the energy source has not, which means we'd be resigning ourselves to a world that all of humanity previously swore off because it was such a miserable existence compared to what came after industrialization.

Offline

Like button can go here

#149 2024-07-07 16:21:10

- kbd512

- Administrator

- Registered: 2015-01-02

- Posts: 8,350

Re: Lithium used for batteries

I've been searching for ideal applications for Lithium-ion batteries where their service life is not questionable. I've noted how poor electro-chemical batteries are for wheeled passenger vehicles, or nearly any other type of wheeled vehicle for that matter. However, there appears to be an ideal vehicle application, wherein an appropriately-sized battery will not be stressed at all during normal operation.

We often refer to our submarines, both diesel-electric and nuclear electric, as "electric boats". In point of fact one of our major submarine manufacturers is named "The Electric Boat Company". It turns out that Lithium-ion batteries are an almost ideal power sources for stealthy fast attack submarines expected to operate in the littorals.

There are various reasons why Lithium-ion batteries work so well for this application, but suffice to say that the best reasons are noise reduction due to very limited cooling requirements for traveling at typical cruising speeds, and an ability to travel a useful distance using the battery alone, without the need to carry diesel engines or fuel.

The last class of diesel-electric submarines built for the US Navy, the Barbel class, were powered by a trio of 1,050hp Fairbanks-Morse diesel engines to recharge their batteries, combined with two banks of batteries and a pair of powerful 2,350hp General Electric motors to power these vessels underwater. Each diesel engine has a 25,000kg dry weight, according to the manufacturer, and these engines remain in use today aboard nuclear submarines for backup power. The Lead-acid batteries of the Barbel class were capable of discharging a varying amount of power, dependent upon discharge rates. The faster the discharge rate, the lower the total power output. Each cell weighed in at a very hefty 460kg. There were 504 cells in total in two banks, one forward and one aft, and each bank of batteries was known as a "well" or "battery well". Thus, 231,840kg of total battery weight, plus 75,000kg for the diesel engines, plus 112,000 gallons of diesel fuel weighing in at 360,697kg. This puts the weight of the major components of the primary power and propulsion system, less electric motors and engine lube oil, 667,537kg.

10 hour rating: 2 banks * 6,850AH * 414V = 5,671,800Wh

5 hour rating: 2 banks * 6,100AH * 414V = 5,050,800Wh

1 hour rating 2 banks * 4,000AH * 414V = 3,312,000Wh

In terms of Lithium-ion battery capacity, 667,357kg is equivalent to about 133.5MWh.

The surface running-optimized diesel-electric submarines of the 1950s would require about 287,920W per hour to maintain about 5.9knots of speed. The teardrop shaped Barbel class would attain a greater submerged speed at the same discharge rate. Given 133.5MWh of stored electric power to work with, 463 hours (19 days 7 hours) of submerged operation at about 6 knots, possibly slightly more, should be expected. That equates to 2,778 nautical miles of range on a battery alone, at a speed comparable to what nuclear powered fast attack submarines operate at in the littorals, in order to reduce their operating noise to within acceptable limits. For a direct-drive electric submarine operating batteries that don't require much cooling at the discharge rates involved, boats so-powered would be almost silent while operating in some of the most dangerous conditions for any type of submarine. This would provide a crystal clear advantage for us to capitalize on to maintain naval dominance in the face of rival powers who wish to control or subvert their neighbors.

Prior to the use of exorbitantly expensive nuclear powered submarines which are most useful for blue water operations far from any land masses, but decidedly inferior in the littoral regions around islands and coastal areas where virtually all modern resource-based conflicts now occur, the US operated submarine tenders to replenish our submarines' fuel tanks and to repair them at sea. If we had a small fleet of modern submarine tenders, the batteries of all-electric Lithium-ion powered boats could be recharged using small modular reactors or conventional diesel engines.

What is most notable about operating this way is how long the batteries would last. If each boat conducted a 15 day patrol per month, with 15 days of down time between patrols for repair / crew rest / recharging of the batteries, then the battery life would greatly exceed the operational service life of the submarine, to the point that it would make more sense to have half as many batteries as total submarine hulls.

The most striking advantage is the purchase cost and operating cost. Each 133.5MWh battery would cost $13.35M at today's prices. If we purchased 100 batteries, the total cost for the battery assets is $1.335B. That is less than 1/3rd the cost of a single Virginia class nuclear powered fast attack submarine, which we cannot build in the quantities required, at the rate required. The electric motors would cost about $2M to $4M per boat. The hull cost for each boat could be reduced to perhaps $10M. Total cost for each boat would range somewhere between $40M and $50M per copy, assuming that the electronics cost about as much as the entire boat (hull + propulsion system + battery).

The bottom line is that $6B, the cost of a single Virginia class boat, would pay for 120 all-electric boats. Quantity has a quality of its own. Numbers win wars, forever and always. The purpose of these boats would be to hunt and kill enemy missile boats, corvettes, frigates, and of course, other submarines. They would either operate independently in wolf packs or provide a submarine screen for our valuable nuclear powered aircraft carriers.

The balance of power won't shift due to the presence or absence of a singular Virginia class boat. If you have 60 boats roaming around in the enemy's backyard, hunting for targets, they will rapidly become a force unto themselves, one capable of influencing a war by picking off all the supporting ships and submarines an opposing naval force might array against us or our allies. Wherever the enemy has a small task group sailing through, we can have at least one electric boat trailing them, either waiting for them to make a mistake or simply lying in wait for whatever ships pass through the area. When you have numbers on your side, you can afford to operate that way. During WWII, allied submarines and miniature aircraft carriers had an outsized impact on sinking enemy shipping and influencing the land wars near coastal regions. Sheer numbers made the difference, rather than including every conceivable capability into a single vessel or task group. Modern technology allows us to use economy of scale and economy of force to achieve the same end goal today. When deterrence fails, then total available combat power will still prevail.

Offline

Like button can go here

#150 2024-07-08 14:34:54

- GW Johnson

- Member

- From: McGregor, Texas USA

- Registered: 2011-12-04

- Posts: 6,109

- Website

Re: Lithium used for batteries

That's an interesting idea. Never thought about it in those terms.

You would be somewhat restricted with a 15-day mission length. The old WW2 fleet boats were min 90-day missions, and some went past 120 days.

GW

GW Johnson

McGregor, Texas

"There is nothing as expensive as a dead crew, especially one dead from a bad management decision"

Offline

Like button can go here